{Update November 2015: Google rolled out a significant algorithm update on November 19, 2015 that had a strong connection to Phantom 2 from May 2015. Many sites that were impacted during Phantom 2 in May were also impacted on November 19 during Phantom 3. And a number of companies working to rectify problems saw recovery and partial recovery. You can learn more about Google’s Phantom 3 Update in my post covering a range of findings.}

Two years ago on May 8, 2013, I began receiving emails from webmasters that saw significant drops in Google organic traffic overnight. I’m not talking about small drops… I’m referring to huge drops like 60%+. As more emails came in, and I checked more of the data I have access to across websites, it was apparent that Google had pushed a big update.

I called it the Phantom update, since it initially flew under the radar (which gave it a mysterious feel). Google would not confirm Phantom, but I didn’t really need them to. I had a boatload of data that already confirmed that a massive change occurred. Again, some sites reaching out to me saw a 60%+ decrease in Google organic traffic overnight.

Also, Phantom rolled out while the SEO community was waiting for Penguin 2.0, so all attention was on unnatural links. But, after digging into the Phantom update from 5/8/13, it was clear that it was all about content quality and not links.

Phantom 2 – The Sequel Might Be Scarier Than The Original

Almost two years later to the day, we have what I’m calling Phantom 2. There was definitely a lot of chatter the week of April 27 that some type of update was going on. Barry Schwartz was the first to document the chatter on Search Engine Roundtable as more and more webmasters explained what they were seeing.

Now, I have access to a lot of Panda data, but I didn’t initially see much movement. And the movement I saw wasn’t Panda-like. For example, a 10-20% increase or decrease for some sites without any spikes or huge drops doesn’t set off any Panda alarms at G-Squared Interactive. With typical Panda updates, there are always some big swings with either recoveries or fresh hits from new companies reaching out to me. I didn’t initially see movement like that.

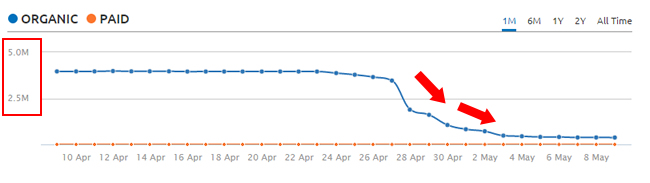

But that weekend (5/1 through 5/3), the movement seemed to increase. And on Monday, after having a few days of data to sift through, I saw the first real signs of the update. For example, check out the screenshot below of a huge hit:

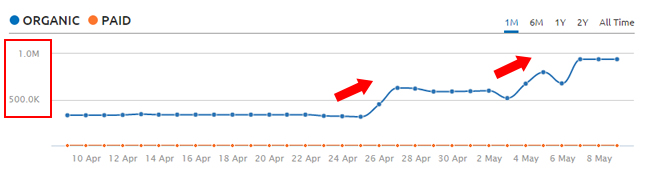

And as more chatter hit the Twitterverse, more emails from companies starting hitting my inbox. Some websites had experienced significant changes in Google organic traffic starting on 4/29 (or even earlier). For example, here’s an example of a huge surge starting the week of 4/27:

I dug into my Panda data, and now that I had almost a full week of Google organic traffic to analyze, I saw a lot of moderate movement across the sites I have access to. Many swung 10-20% either up or down starting around 4/29. As of today, I have an entire sheet of domains that were impacted by Phantom 2. So yes, there was an update. But was it Panda? How about Penguin? Or was this some other type of ranking adjustment that Google implemented? It was hard to tell, so I decided to dig into websites impacted by Phantom 2 to learn more.

Google Denies Panda and Penguin:

With significant swings in traffic, many webmasters automatically think about Panda and Penguin. And that’s for good reason. There aren’t many updates that can rock a website like those two characters. Google came out and explained that it definitely wasn’t Panda or Penguin, and that they push changes all the time (and that this was “normal”).

OK, I get that Google pushes ~500 updates a year, but most do not cause significant impact. Actually, many of those updates get pushed and nobody even picks them up (at all). Whatever happened starting on 4/29 was bigger than a normal “change”.

A Note About Mobile-Friendly:

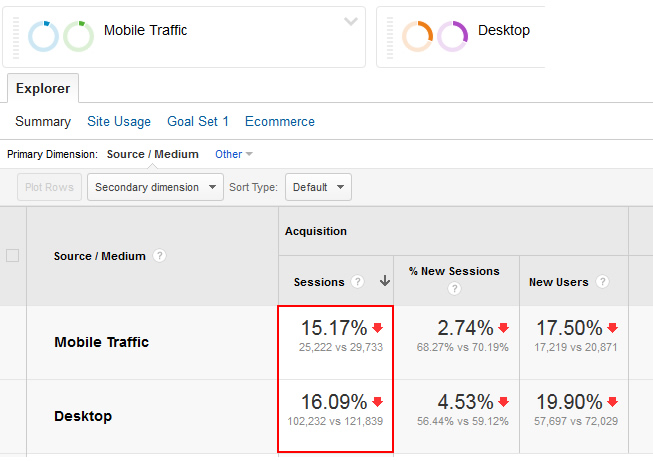

So, was this part of the mobile algorithm update from 4/21? No, it doesn’t look that way. Many of the sites impacted are mobile-friendly and the impact was to both desktop and mobile rankings. I don’t believe this had anything to do with the mobile-friendly update. You can read more about some of the mobile rankings changes I’ve seen due to that update in a recent post of mine.

Understanding The Signature of Phantom 2:

If you know me at all, then you know I tend dig into algorithm updates. If there’s enough data to warrant heavy analysis, then I’m in. So I collected many domains impacted by the 4/29 update and started to analyze the decrease or increase in Google organic traffic. I analyzed lost keywords, landing pages from organic search, link profiles, link acquisition or loss over the past several months, etc. My hope was that I would surface findings that could help those impacted. Below, I have documented what I found.

Phantom 2 Findings – Content Quality Problems *Galore*

It didn’t take long to see a trend. Just like with Phantom 1 in 2013, the latest update seemed to focus on content quality problems. I found many examples of serious quality problems across sites heavily impacted by Phantom 2. Checking the lost queries and the destination landing pages that dropped out revealed problems that were extremely Panda-like.

Note, I tend to heavily check pages that used to receive a lot of traffic from Google organic. That’s because Google has a ton of engagement data for those urls and it’s smart to analyze pages that Google was driving a lot of traffic to. You can read my post about running a Panda report to learn more about that.

Did Panda Miss These Sites?

If there were serious content quality problems, then you might be wondering why Panda hadn’t picked up on these sites in the past. Great question. Well, Panda did notice these sites in the past. Many of the sites impacted by Phantom 2 have battled Panda in the past. Again, I saw a number of sites I’m tracking swing 10-20% either up or down (based on the large amount of Panda data I have access to). And the big hits or surges during Phantom 2 also reveal previous Panda problems.

Below, I’ll take you through some of the issues I encountered while analyzing the latest update. I can’t take you through all of the problems I found, or this post would be massive. But, I will cover some of the most important content quality problems I came across. I think you’ll get the picture pretty quickly. Oh, and I’ll touch on links as well later in the post. I wanted to see if new(er) link problems or gains could be causing the ranking changes I was witnessing.

Content Quality Problems and Phantom 2

Tag Pages Ranking – Horrible Bamboo

One of the biggest hits I saw revealed many tag pages that were ranking well for competitive keywords prior to the update. The pages were horrible. Like many tag pages, they simply provided a large list of links to other content on the site. And when there were many links on the page, infinite scroll was used to automatically supply more and more links. This literally made me dizzy as I scrolled down the page.

And to make matters worse, there were many related tags on the page. So you essentially had the perfect spider trap. Send bots from one horrible page to another, then to another, and another. I’m shocked these pages were ranking well to begin with. User happiness had to be rock-bottom with these pages (and they were receiving a boatload of traffic too). And if Phantom is like Panda, then poor user engagement is killer (in a bad way).

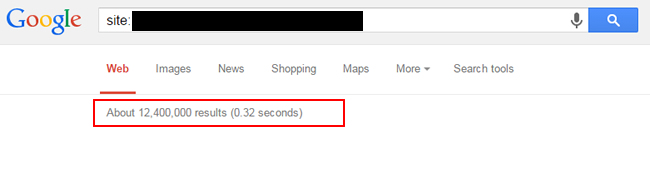

So how bad of a problem was this on the site I was analyzing? Bad, really bad. I found over twelve million tag pages on the site that were indexed by Google. Yes, twelve million.

Also, the site was triggering popups as I hit new landing pages from organic search. So if the horrible tag pages weren’t bad enough, now you had horrible popups in your face. I guess Phantoms don’t like that. I know I don’t. :)

Thin, Click-Bait Articles, Low Quality Supplementary Content

Another major hit I analyzed revealed serious content quality problems. Many of the top landing pages from organic search that dropped revealed horrible click-bait articles. The pages were thin, the articles were only a few paragraphs, and the primary content was surrounded by a ton of low quality supplementary content.

If you’ve read some of my previous Panda posts, then you know Google understands and measures the level of supplementary content on the page. You don’t want a lot of low quality supplementary content that can detract from the user experience. Well on this site, the supplementary content was enough to have me running and screaming from the site. Seriously, it was horrible.

I checked many pages that had dropped out of the search results and there weren’t many I would ever want to visit. Thin content, stacked videos (which I’ve mentioned before in Panda posts), poor quality supplementary content, etc.

Low quality pages with many stacked videos can have a strong negative impact on user experience:

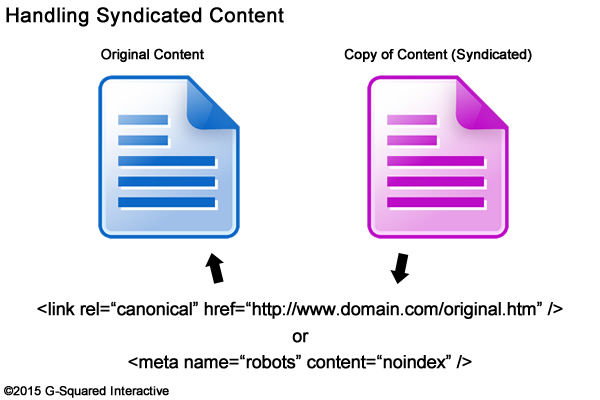

I also saw this site had a potential syndication issue. It was referencing third party sites often from its own pages. When checking those third party pages, you can see some of the content was pulled from those sites. I covered syndication after Panda 4.0 rolled out and this situation fit perfectly into some of the scenarios I explained.

Navigational Queries, Poor Design, and Low Quality User Generated Content

Another big hit I analyzed revealed even more content quality problems, plus the first signs of impact based on Google SERP changes. First, the site design was out of 1998. It was really tough to get through the content. The font was small, there was a ton of content on each page, there were many links on each page, etc. I’m sure all of this was negatively impacting the user experience.

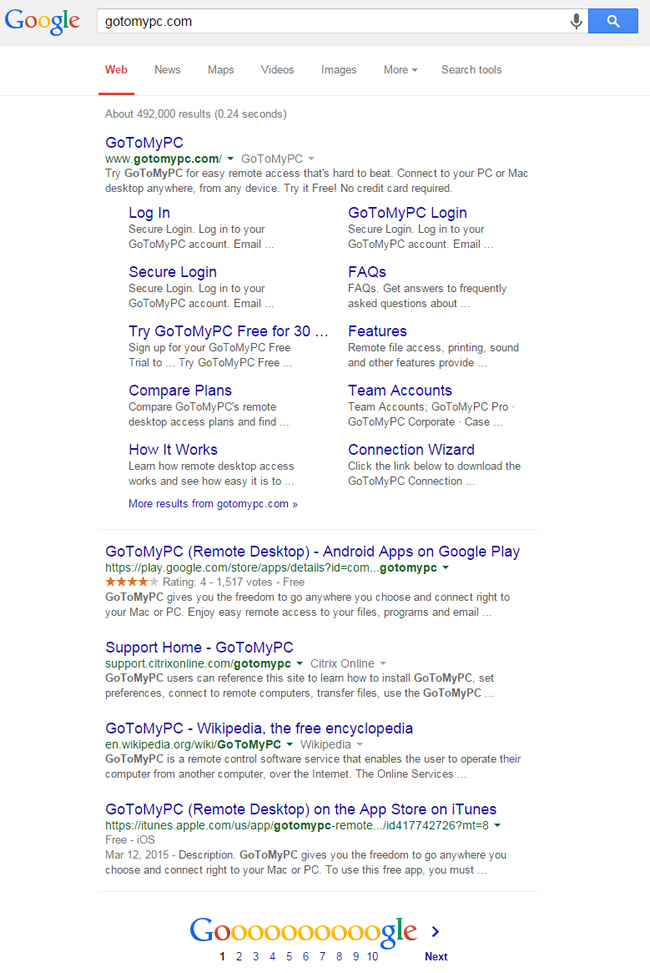

When checking lost rankings, it was clear to see that many queries were navigational. For example, users entering domain names or company names in Google. This site used to rank well for those, but checking the SERPs revealed truncated results. For example, there were only five listings now for some of those queries. There were times that the site in question dropped to page two, but there were times it dropped much more. And for some queries, there were only three pages listed in the SERPs.

An example of just five listings for a navigational query:

So when you combine giant sitelinks, truncated SERPs, limited SERP listings, and then some type of major ranking adjustment, you can see why a site like this would get hammered.

There was also user-generated content problems on the site. Each page had various levels of user comments, but they were either worthless or just old. I found comments from years ago that had nothing to do with the current situation. And then you had comments that simply provided no value at all (from the beginning). John Mueller explained that comments help make up the content on the page, so you definitely don’t want a boatload of low quality comments. You can check 8:37 in the video to learn more. So when you add low quality comments to low quality content you get… a Phantom hit, apparently. :)

Content Farms, Thin Content, Popups, and Knowledge Graph

Another interesting example of a domain heavily impacted by the 4/29 update involved a traditional content farm. If you’re familiar with the model, then you already know the problems I’m about to explain. The pages are relatively thin, don’t heavily cover the content at hand, and have ads all over the place.

In addition, the user experience gets interrupted by horrible popups, there’s low quality supplementary content, ads that blend with the results, and low quality user-generated content. Yes, all of this together on one site.

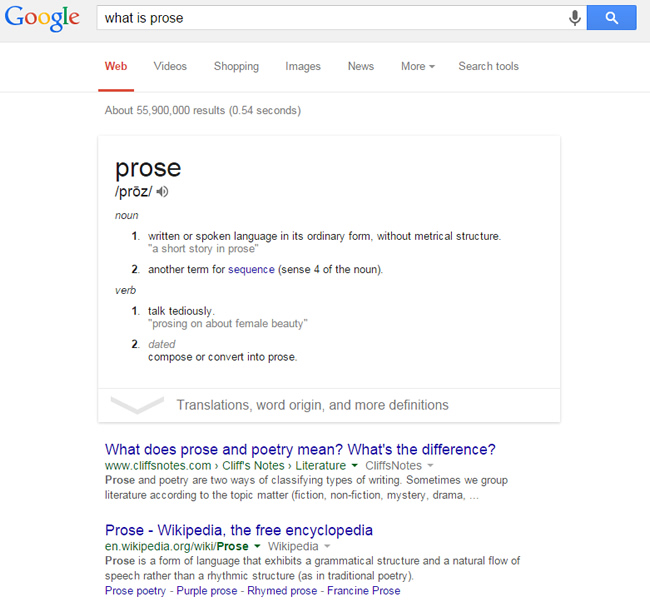

Also, when checking the drop in rankings across keywords, I often came across queries that yielded knowledge graph answers. It’s an interesting side note. The site has over 100K pages with content targeting “what is” queries. And many of those queries now yield KG answers. When you combine a ranking shift with a knowledge graph result taking up a large portion of the SERP, you’ve got a big problem for sure. Just ask lyrics websites how that works.

Driving Users To Heavy Ad Pages, Spider Trap

One thing I saw several times while analyzing sites negatively impacted by the 4/29 update related to ad-heavy pages. For example, the landing page that used to rank well had prominent links to pages that simply provided a boatload of text ads (they contained sponsored ads galore). And often, those pages linked to more ad-heavy pages (like a spider trap). Those pages are low quality and negatively impact the user experience. That’s a dangerous recipe for sure.

Directories – The Same Old Problems

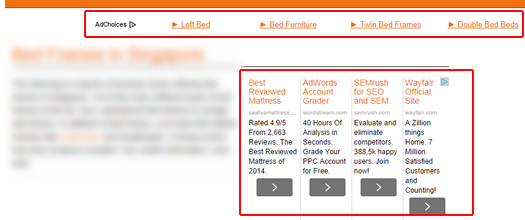

I reviewed some directory sites that were impacted by the 4/29 update and saw some of the classic problems that directories face. For example, disorganized content, thin content, and low quality supplementary content. I also saw deceiving ads that blended way too much with the content, which could cause users to mistakenly click those ads and be driven off the site (deception). And then there were pages indexed that should never be indexed (search results-like pages). Many of them…

An example of ads blending with content (deceiving users):

It’s also worth noting the truncated SERP situation I mentioned earlier. For example, SERPs of only five or seven listings for navigational queries and then there were some SERPs with only three pages of listings again.

I can keep going here, but I’ll stop due to the length of the post. But I hope you see the enormous content quality problems riddling sites impacted by Phantom 2. But to be thorough, I wanted to check links as well. I cover that next.

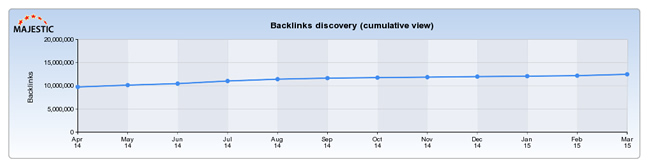

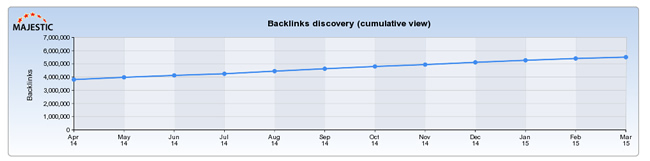

The Impact of Links – Possible, But Inconclusive

Now what about links? We know that many sites impacted had serious content quality problems, but did links factor into the update? It’s extremely hard to say if that was the case… I dug into the link profiles for a number of the sites both positively and negatively impacted and came out with mixed findings.

First, a number of the sites I analyzed have huge link profiles. I’m not talking about a few thousand links. I’m talking about millions and tens of millions of links per domain. That makes it much harder to nail down a link problem that could have contributed to the recent impact. There were definitely red flags for some domains, but not across every site I analyzed.

For example, some sites I analyzed definitely had a surge of inbound links since January of 2015, and you could see a certain percentage seemed unnatural. Those included strange inbound links from low quality sites, partner links (followed), and company-owned domains (also followed links). But again, the profiles were so large that it’s hard to say if those new(er) links caused enough of a problem to cause a huge drop in rankings during Phantom 2.

On the flip side, I saw some sites that were positively impacted gain many powerful inbound links over the past six to twelve months. Those included links from large publishers, larger brands, and other powerful domains. But again, there’s a lot of noise in each link profile. It’s very hard to say how much those links impacted the situation for this specific update.

Example of domains impacted by Phantom 2, but had relatively stable link profiles over the past year:

And to make matters even more complex, there were some sites that gained during the 4/29 update that had lower quality link profiles overall. So if links were a driving force here, then the sites with lower quality profiles should not have gained like they did.

My money is on content quality, not links. But hey, anything is possible. :)

Next Steps for Phantom 2 Victims:

If you have been impacted by the 4/29 update, here is what I recommend doing:

- I would take a hard look at content quality problems riddling your website. Just like Phantom 1 in 5/2013, I would audit your site through a content quality lens. Once you thoroughly analyze your content, then you should form a remediation plan for tackling those problems as quickly as possible.

- Understand the queries that dropped, the landing pages from Google organic that used to receive a lot of traffic, find engagement problems on the site, and address those problems. Try to improve content quality across the site and then hope you can recover like previous Phantom victims did.

- From a links standpoint, truly understand the links you’ve built over the past six to twelve months. Were they manually built, naturally received, etc? Even though my money is on content quality, I still think it’s smart to tackle any link problems you can surface. That includes removing or nofollowing unnatural links, and disavowing what you can’t get to.

Summary – The Phantom Lives

It was fascinating to analyze Phantom 2 starting on 4/29 and to see the similarities with the original Phantom from 5/8/13. After digging into a number of sites impacted by the latest update, it was clear to see major content quality problems across the domains. I don’t know if Phantom is cleaning up where Panda missed out, or if it’s something completely separate, but there’s a lot of crossover for sure.

And remember, Penguin 2.0 rolled out just a few weeks after Phantom 1. It’s going to be very interesting to see if the next Penguin update follows that model. I guess we’ll find out soon enough. :)

GG

Valuable research and examples you’ve shared here Glenn. Thank you!

Thank you Donna. It was fascinating to analyze the sites heavily impacted. By Monday, 5/4, it was extremely apparent that the update was big. And it was interesting to see the similarities to the 5/8/13 update (almost 3 years ago to the day).

Hello Glenn,

This was a strange update, affecting the long tail in our case, another -10%.

As I told you ~ 6 month ago, we are running out of traffic.

Panda/Phantom affected us terribly and even we had deleted 2/3 o the site (as possible thin content), the problem still exists, as a slow poison.

You should be aware of another strange phenomenon, which seems to be related to this quality update!

Since the last week of April, the CPC for many Adsense publishers has plummeted to a level unseen until now. We are talking about -40-60% for medium CPC, with was relatively stable for years.

The Webmasterworld Adsense forum (May thread) is screaming in terror .

Check with your affected and non-affected sites to see the trend of earnings.

I suspect we see the first quality update for adsense publishers!

Hi Bogdan. I’m sorry to hear you were negatively impacted by Phantom 2. And that’s really interesting about AdSense. I’ll have to check that out later today when I have some time. Thanks for the heads-up!

Ya Glenn I am seeing a crazy jump in traffic after the last week of April. And mobile only accounts for a small part of it. So definitely something else going on besides the mobile update. And no I have never been hit with a penalty. But I’ll take the extra traffic! :)

Great to see your numbers jump Brian. I’m glad Phantom liked your site. :) And I definitely agree about the mobile-friendly update. This was definitely not part of it… Like your site, every one I analyzed saw movement across both desktop and mobile traffic.

By far the best analysis on that update.

Thank you Valentin! I appreciate it. I’ll try and publish more about the update as I continue to dig into sites that were impacted. Stay tuned.

Hey, guys.

First off, good analysis Glen. Kudos.

As more I read into the article, the more it came clear to me that we’re talking not about a Phantom update, but more about the Doorway pages update. Especially with the tag pages being hit. The doorway pages update is supposed to be acting quite similar to Panda, so that’s how people can mistake that for it. Google said in March that they are going to launch it:

http://googlewebmastercentral.blogspot.com/2015/03/an-update-on-doorway-pages.html, and apparently they did.

So, to me there is no mystery there.

Thanks for your comment Evgeni, but this was not the doorway page update. Many of the sites impacted did not employ doorway pages. And on that note, I’ve been monitoring known doorway pages across domains to see the impact of the doorway algo update. And they are still ranking as well now as before the update rolled out! So I’ve been calling that update a “dud”. :)

Phantom 2 was different. It impacted many sites across the web and heavily targeted a range of content quality issues (beyond what the doorway page algo would target). I’ll try and publish more information as I continue to dig into sites that were impacted.

Well, thank you for clarifying that. It makes more sense that way.

Thanks David!

Great to hear you weren’t impacted by Phantom 2, even after being impacted by the first Phantom in May of 2013. This was a big update… bigger than I initially thought. :)

Hmm – my main site doubled its traffic after this update! Great analysis – thank you sincerely.

Hey, great to hear you’ve surged. “When there is down, there is always up.” :)

Thank you Glenn! This has just confirmed what I relayed to a client about an hour ago so now I have a nice meaty analysis to share.

Same thing… drop was around the mobile update, but affected desktop more…. and they have a long standing thin content/duplicate content issue which I hope to see corrected by addressing it at a technical level and then creating richer content hubs to support the thin product page content.

Thanks Ralph. I’m glad my post could help! After checking many sites now impacted by Phantom 2, it was clear that content quality was the problem. I would definitely tackle the issues you mentioned… Good luck with everything.

Do you have a newsletter. Id love to subscribe!

LOL, great question. I’ve been meaning to put one together, but I haven’t yet. You can subscribe to my blog, follow me on Twitter, G+, and Facebook. I’m extremely active across all three. :)

I totally aggree with you. In the last weeks my blog has being climbing the SERP and getting more organic traffic, exactly because he is clean and with good content.

Finally I start to see Google giving value to what matters: good experience to the visitors and good content, and not the SEO experts work, like before.

Great to hear you saw positive impact based on the latest update. I plan to publish additional findings as I analyze more sites impacted by Phantom 2 (both positive and negative), so stay tuned. But again, great to hear you saw an increase.

Thanks for your comment. That is interesting regarding direct traffic spiking, but I’m not seeing that across other sites impacted by Phantom. It must something happening with your own traffic! Have you dug into which landing pages are receiving that traffic? That might help you figure out the spike.

Excellent article Glenn. Best one I have seen to date.

Thank you Kevin! I appreciate it. I plan to publish more findings as I dig into more data, so stay tuned.

Thanks Eric. I’m glad you found my post helpful. It was a big update… a lot bigger than I originally thought. There were some major hits and surges. I’m still digging into more impact and will probably write additional posts about Phantom 2. Stay tuned. :)

Hey Glenn, I’m seeing some sites that temporarily lost organic traffic then went back them. They are smaller niche blogs/sites.

Example 1: http://screencast.com/t/UuN6aEq5jo

Example 2: http://screencast.com/t/VixFsiCPEAen

The content is pretty darn good on both of them. 99% squeaky clean. Are you seeing this as well? It’s like they got caught in the algo at first and then released.

Hi Dan. Thanks for posting this. I have definitely seen sites hit at various points after 4/29, but haven’t seen any bounce back like that. But, if we are seeing “tremors” like we did after Panda 4.0, then that’s entirely possible.

John Mueller explained to me back then that bigger changes can be followed by smaller tweaks to ensure things are working like they should be. That could be happening here. I hope that helps (and great to hear they bounced back). :)

Great analysis of the update, thanks Glenn. Looking forward to any follow-up posts!

Thanks Dustin. It’s been a fascinating update to analyze. Definitely subscribe to my feed and follow me on Twitter. I plan to publish more about this update soon based on additional sites I’m analyzing.

Great work Glenn

Very interesting analysis

Regards

Alan

Great work Glenn

Very interesting analysis

Regards

Alan

Great article! I stumbled across this via a e-mail I received from Moz.com (I believe) and found it will worth my time.

I shared a link to it on twitter via my followers @BrooksClifford

Thanks for taking the time to not only compile the information but share it.

– Brooks

Thanks Brooks! I’m glad you found my post via Moz. It was a big update for sure.

Really impressive article. Great analysis of the impact of the Google algorithm updates on search and certain groups of websites. Thanks for providing such important insight. I particularly appreciate the data analysis making a distinction between Google mobile friendly update and the current trends in search.

Right, based on the timing of this update, many thought the mobile-friendly update was impacting them. It wasn’t. It was Phantom 2. :)

thanks for the great post. Question: what do you mean by “Horrible pop ups?”

I notice many popular websites using overlay popups where people subscribe to newsletters. Are you referring to these.

Hey, no problem. I was referring to disrupting the user experience as soon as someone hits the page via organic search. As soon as you land on the page, a giant popup hits you in the face. Not good, and can send horrible user engagement signals to the engines. For example, if someone gets angry and jumps back to the search results. That yields low dwell time.

Hi Glenn, great analysis and some really useful insights – thank you!

I’m an in-house SEO and we saw around a 20% drop in traffic and some noticable ranking hits during Phantom 2.

We recently introduced cross-selling hover banners which appear 5 seconds after a user has been on specific landing pages. These have actually decreased our bounce rate and increased time on site, but do you think they could be seen as a negative quality signal by Phantom as we’re funelling traffic away to a different section of our site from where Google has originally sent it?

Thanks,

Pete.

Thanks for your comment Pete. First, I’m sorry to hear you were negatively impacted by Phantom 2. The 20% drops lines up with many that were impacted BTW. There were definitely big hits and surges, but many sites fell into the 10-20% range. Just an interesting side note.

Regarding the banners, I would have to see them in action to know if they were a problem. Many popups are engagement killers, but it depends on the specific sites, how they were implemented, etc.

Also, I highly doubt one thing would cause problems from a Phantom standpoint. Almost every site I analyzed had a combination of quality issues. Sure, popups can be problematic, but they were usually combined with other problems (like the ones I listed above).

I have now reviewed many more sites impacted by Phantom and hope to write another post soon. It has been a fascinating update to analyze. Definitely stay tuned.

Hi Glenn, thanks very much for the response. I look forward to seeing another post on Phantom with your updated research, would be great to see!

Explains what I’m seeing. Thanks for all the work.

This is fantastic. I’ve been looking for some insight into the algorithm changes that hit us beginning of May. Several sites took a hit and I can agree with some of your reasons why. Thank you for this great work.

You bet, and I have more to share. I’ve been heavily analyzing the update and hope to publish another post soon. Stay tuned.

Very good & informative post Glenn. I saw an initial drop on one of my clients who’s had a history of great positions followed by a slow climb back up.

Have you seen any drops based on increased back link counts over your collection of data?

Thanks

Martin

This definitely didn’t look like a link issue. I analyzed the link profiles (top-level) of many sites impacted by Phantom 2. It focused on content quality. I hope that helps.

WOW! Outstanding analysis. Neither gaining, nor losing any traffic for the websites I am monitoring although some fluctuations on rankings took place the past few days. Thank you for your effort. Totally worth sharing ;)

I’m glad my post could clarify what’s been going on. It was a big update, much bigger than I originally thought.

I have seen our site ranking increase as amazon affiliate sites were being dropped on rankings. The last update (penguin/panda, cannot remember which one) last fall was supposed to affect affiliate sites did not affect anything in our industry until this week. Could it have been a slow roll out and they just finally expanded it?

The last Panda update was on 10/24/14. It was a big update that I detailed in my post on Moz. http://moz.com/blog/the-danger-of-crossing-algorithms-panda-update-during-penguin-3

This is a separate update, although there does seem to be a lot of overlap from a content quality standpoint. So my guess is that the levers Google is pulling now with Phantom are impacting those affiliate sites. Very interesting. Thanks for your comment.

Hi Glenn,

I have noticed a sudden rise (200% growth in impression) in GWT impression report from 15 April to 30th April and then its went back to normal.

How should I take it ?

Thanks

James

Hard to say. This latest update didn’t really start rolling out until the week of the 27th. Google could have been testing the update… but it’s hard to say.

I’ve seen similar behavior prior to more than one past update. We came to the conclusion (theory, really) that prior to rolling out a major update, Google temporarily switches off many of their algorithmic filters, so they can watch it launch with less noise. As a result, some sites will shoot back up temporarily (which may allow them to overtake competitors, which appears like a false hit). When the filters are switched back on, things may then return to their previous state. Just a theory, but we’ve see it so many times that it seems very likely, and it seems to make sense that they might do it. What’s your take on it, Gabe?

Interesting theory Doc. After Panda 4.0, I noticed a lot of additional movement (up or down for sites impacted by P4.0). So I reached out to John Mueller about it. I called them “Panda tremors” btw.

John said that larger changes or updates can be followed by smaller tweaks to make sure the algo is doing what it needs to do. I’ve seen tremors wtih Phantom as well, so my guess is that the ups and downs are tweaks that Google is making to the algo. Here’s more information about Panda tremors -> http://www.hmtweb.com/marketing-blog/google-panda-near-real-time-since-p40/

Hi Mate,

Firstly, great write up. Very detailed, so thanks!

I have to ask though, how sure are you that this isnt the doorway update? Google updated the definition of doorway pages, and it isnt what it used to be. I feel the doorway guideline update much closely reflects content quality, and how the content is being linked to internally. So very similar to Panda essentially.

The reason I am questioning it, is essentially that we haven’t seen anyone (that I can find) that was hit by the doorway page update. The only update people have significantly been hit by is this phantom one. Obviously, Google doesn’t announce everything but they have been announcing quite a bit lately. And the only thing announced is the doorway update.

Looking at the questions they posted (http://googlewebmastercentral.blogspot.com.au/2015/03/an-update-on-doorway-pages.html) , surely you can see that there could be a crossover?

My biggest one is this:

—-

Do these pages exist as an “island?” Are they difficult or impossible to navigate to from other parts of your site? Are links to such pages from other pages within the site or network of sites created just for search engines?

—-

The way I am interpreting this is content that isnt heavily linked to from other parts of the site. Ie, if a crawler hits the homepage, how many levels do they need to go through, to get to the specified page? Clickbaity style articles are like this because they would barely be linked to from high-value internal pages.

—-

Do the pages duplicate useful aggregations of items (locations, products, etc.) that already exist on the site for the purpose of capturing more search traffic?

—-

This to me, is the definition of a tag page.

So yeah, while it smells of something panda related, it also smells like the questions Google posed.

Your thoughts?

Cheers,

Sam.

Great article Glen, thanks for the hard work & insights. Around the time of this update we lost traffic, but the home page seemed to take the hit, our content still ranks well and hasn’t changed much. We basically fell off the map over the course of a week, we were ranking on the first page for our high level queries, now not in the top 50. As a result of this update, are you seeing that internal pages taking the hit or is the update just identifying these problem sites and the home page is taking the hit?

Thanks for your question Bill. The update definitely looks like a domain-level demotion, but I am seeing different sections take different hits (percentage-wise).

Also, there are some sites that saw an increase in certain areas, where other areas got pummeled. I recommend running a Panda report like I explained in my post and digging into your top landing pages from Google organic (prior to the hit). That may reveal some content quality issues that you need to deal with. I hope that helps.

This is fantastic – thanks for the thoughtful analysis. I’m looking forward to any additional insights you have on this, since it looks like you’ve been able to put together data where others have none. Particularly, I’m interested in anything you’re seeing about how subdomains interact – for the sites that had a blog.site.com subdomain impacted, is the root domain also affected?

Thanks Chris. I plan to write another post soon based on analyzing more sites impacted by Phantom. I’ll share that post on Twitter, G+, and Facebook once it’s ready.

I have analyzed some sites using subdomains, and similar to what I explained in other comments, the impact ranges per subdomain. In other words, I’ve seen some subdomains get hit hard, others less, and then some with either no impact (or an increase). It really depends on the problems impacting the site, where those problems reside, etc. I hope that helps.

I Enjoyed while reading this great and worthy article but the Question is that If My Websites is Hit By Google “Phantom” Update ,What Would be My Tactics to face the problem ???

Perform a content audit, identify content quality problems, and fix those problems as quickly as possible. That might require nuking content, enhancing the content, breaking down engagement barriers, etc. Just like with Panda, “content quality problems” can mean many things.

This is What i was looking for i got it sir and i really appreciate your time and efforts to give me .

thank you sir

—> “And remember, Penguin 2.0 rolled out just a few weeks after Phantom 1.”

I’m looking forward to the latest iteration of Penguin to roll out :-)

We’ll see! It does sound like Google is close to pushing another Panda update (and possibly Penguin). John Mueller mentioned that in the latest Webmaster Hangout. :)

Outstanding analysis, Glenn! None of my clients saw any significant up or down that might be Phantom-driven, but a friend’s site has seen some mysterious traffic drops (80-85%) in this time-frame that I’ve promised to look into. Your write-up gives some interesting things to look at, so thanks for the time you put into this.

Looking forward to see any followup.

Hey, I’m glad my post could help Doc. 80-85% is obviously a severe hit. I’ve also seen some big hits and surges due to Phantom, so your friend isn’t alone. Look for another post from me soon. I have more data to share. :)

Hi Gabe, thank you for you analysis. I’ve analyzed some of our PBN traffic totally drop since last week. It hits young domain that have many organic traffic. I was only focussed to Mobilegeddon and Panda but overlooked “moves” from Google

Interesting information Yudhie. Thanks for sharing. I don’t believe this had to with age as much as overall quality. If you want to share any of the domains, feel free to email me. Thanks again.

Thanks for this Gabe… I’m still scratching my head since we did see a huge drop in traffic. From close to 15K per month down to maybe 2K per month. Even after reading your post I’m still confused. We don’t have panda issues, we don’t have thin content, we are not (actively) link building so I’ll continue to dig around. But it does explain how some of our clients lost traffic. LOL just not ours… *sigh

Thanks for explaining your situation Gabriella. I haven’t analyzed any sites impacted by Phantom that didn’t have content quality problems, so I would love to see the domain you are referring to. If you want to email me the domain, I can take a look. That’s really interesting… Thanks again.

Hi Glenn,

I have 2 clients with non-responsive, non-mobile friendly websites whose rankings on page one dropped around 4 to 5 places for each search term over 2 days May 10 & 11.

1 client is ranking for local UK city terms, 1 is ranking for global search terms.

My clients with responsive mobile friendly sites have not been affected thus far.

Funny thing was that the rankings for the 2 clients with old fashioned static sites were not affected after April 21 until May 10th.

It’s weird that it should take so long for the algo to hit these sites.

But now I’m going to check the other issues you mentioned in this post to see if they may be contributory factors.

Many thanks for your helpful observations. :-)

No problem Mike. I’m glad my post could point you in the right direction. If they were impacted by Phantom, then absolutely check the sites through a content quality lens. That’s definitely what this update focused on. Good luck!

Hey Glenn,

I have noticed an increase of traffic to my web hosting review site. I guess you can say I am on the good end of the phantom 2 update. For an entire year now I have been just focusing on creating pages with high quality content and only building a couple of authority backlinks to internal pages.

My advice to anyone that noticed a drop in traffic would be to axe pages with thin content, 301 them, and then just focus on building high quality content. None of my SEO clients have noticed any kind of drop either. All are increasing too.

I have been using “Post Admin Word Count” for WordPress to make sure all post are at least 1,000+ words. Then I check to make sure the content is of some real value otherwise I axe the content. Have you used this plugin to help you with this update?

Garen

Great to hear you were positively impacted by Phantom. And I agree about nuking low-quality content. It’s important to identify, and quickly deal with, content problems riddling a website.

Regarding word count, I wouldn’t focus on that. It’s not really about word count. It’s more about meeting user expectations based on their query. If you write a thorough piece based on the topic at hand, you should be fine. For some posts, that might be a lot of content, where other posts might need less. It depends on the topic.

Again, great to hear you are doing well after the update.

Hey Glenn,

Yeah, I would agree to not just caught up on the word count as you say. As long as it’s good content and provides some real value I would keep the piece of content. However, one thing I have been doing also in combining blog post and 301 redirecting them. This works great for similar topics.

Do you have any advice for pages that I might consider 301ing. For instance, going to Google Webmaster Tools and looking at pages that barely get any views at all?

It will be interesting to see if Panda is rolled out this week. I always look forward to these Google updates because I am doing stuff right on my end :).

Thank you for this, it has answered a lot of questions for myself and someone else I work with. We had concluded that having loads of content on a site is not the answer but quality content thats worth a read and gets shared is going to hold a lot more wait. So your analysis is quite accurate, and confirms our suspicions. Back to the drawing board I think.

Excellent, I’m glad my findings back up your strategy. It’s definitely not about the amount of content… it’s about the quality of content. Keep doing what you’re doing. :)

Thanks Syed! I’m glad my post was helpful. I have more to share soon, so stay tuned. :)

Interesting. I haven’t analyzed a site hit by Phantom that didn’t have a combination of content quality issues. Can you email me your domain? Thanks.

Glenn, are you still thinking this “Quality Update” is a domain-level penalty, or perhaps directory-level penalty? Jennifer Slegg is reporting that it’s a page-level penalty. Apparently she reached out to Google and got confirmation: http://www.thesempost.com/googles-quality-algo-update-what-we-know-about-it/.

I find it hard to believe it’s a pure page-level algo. I think it’s way more complex than that. In addition, I think it could be very dangerous for webmasters to think they could tweak a page and bounce back in real-time. That could lead to a lot of spinning wheels.

I have analyzed sites impacted by Phantom that saw specific sections get hammered, while others only decreased slightly, or were untouched. And then there were some that got completely hammered (losing traffic throughout the site). But I’ve seen Panda situations that were similar (and that’s domain-level). For example, hitting certain areas harder than others.

I plan to cover this in more detail as I gather more data based on analyzing more sites impacted by Phantom. So stay tuned.

One other note. John Mueller explained in a recent webmaster hangout video that there is no silver bullet for Phantom. He recommended taking a long-term approach to increasing quality. That’s much different than tweaking a page to see a result. :) I guess we’ll learn more soon as webmasters make changes based in the impact. https://www.youtube.com/watch?v=oMCwvBmD2Hk#t=2130

From what I’m seeing with client websites, and even one of my own, I’m in agreement with you that this is not a pure page-level update. It’s appearing to me that this is closer to an 80/20 approach of sorts… 80% of the sites’ pages saw a dip downward around 15-20% in organic traffic…and 20% of the pages saw a slight dip upward…but not nearly as much as the downward dips.

I’m also seeing more clear examples of competitors’ “higher quality” content ranking higher than some client content…indicating that the client wasn’t penalized per se but instead leapfrogged by a better content page that was even more useful to users.

Right, the change to the core ranking algorithm assesses quality signals differently, which can either result in a stronger or weaker score. So, based on the changes, pages could leapfrog others.

Note, I have seen horrible pages leapfrog others too… So clearly Phantom has some work to do. :)

But…you are still thinking this algorithm update is affecting sites more than just at a page-level?

And that’s the rub, Dan. The core ranking algorithm takes many factors into account. That’s why it’s complex. We obviously know the page is taken into account (based on many dimensions), but I think it’s even more complex than that. I do think there is a domain-level component to this (just like there’s a domain-level component to how any page on a site ranks).

And I think that’s part of the reason that John answered the question the way he did. There’s no silver bullet, not one technical problem you can overcome, etc. in order to bounce back. Work on quality over the long-term (on your *site*).

So to me, I would work on fixing quality problems overall with the site, and not just focus on specific urls. I think that’s dangerous (and can lead to a lot of spinning wheels). Just my 2 cents. :)

Great analysis Glenn and thanks for sharing your findings and advice. This post was very similar in content to Panda posts you have made in the past. If we didn’t know you were talking about Phantom 2 we could easily assume you are talking about another Panda.

I know you were covering the changes that have happened but it also fills in the blanks to how I’ve been thinking SEO works. I’ve done some of the more technical SEO changes on my site and read a lot into things but this article really hits home on how important well written, useful and readable content is. Thanks for doing the research and sharing it. Really helpful.

Do you recommend adding nofollow noindex to tag pages and also leaving them out of the sitemaps?

Depends on the tag pages per site. But for most, I would recommend using “noindex, follow”. And yes, remove them from your xml sitemap. You should only include canonical urls in your sitemap. I hope that helps.

Great and super thorough post! I just noticed that my traffic significantly dropped on May 1, as you can see. As I’ve eliminated all other possibilities, seems like it was clearly affected by this update. But I’m still puzzled. It’s an ecommerce site with only 1 product. There are no ads. There is a blog, but it’s extremely focused, seasonal (only in spring/summer to correspond with niche) and is built around images. Did you come across any domains with a similar issue? This traffic tank was brutal and obliterated my revenue for May.

I’m sorry to hear you were hit so hard. I would have to analyze the site to better-understand the problems content quality-wise. If you want to email me the domain, I can take a quick look.

Thanks so much Glenn! That would really be helpful. The only thing I can think of is keyword concentration, but haven’t seen any comments on that as part of this update. I’ll send over the domain now.

By far the most detailed analysis I’ve come across, and it replicates my findings/thoughts on what might be the culprit – thanks for taking the time to share this informative blog!

Excellent Article. In my agency, we do a lot of blogging, with long and original posts for each customer. For all of them, the traffic spike was +20%, minimum. My own blog had an increase of 60% in organic traffic in one month. All my articles are carefully loaded, with clean HTML, descriptive images, no keyword stuffing, good spacing, calls-to-action, over 500+ words. This month I am at 40%+ in traffic growth when compared to May. So, top quality content has been rewarded a lot.

Hi Glenn, this is a fantastic analysis of this Google Update. Thank you. I’m not actually involved with SEO for my company but rather I’m on the digital advertising side. What brought me to this piece is that in discussing the placement of ad banners on our site our SEO person suggested that our organic search results might be negatively affected by adding a top leaderboard to additional pages on our site. Note that all of our interior article pages have a top 728×90 banner, 300×250 medium rectangle on right rail and a 728×90 bottom banner. A good number of our landing pages have only the 300×250 medium rectangle. From a revenue generating perspective we are looking to add the top banner to our landing pages to exponentially increase revenue. Seems like really low-hanging fruit. However, adding this one top banner has been eschewed based on the potential impact on search from this Google update. I don’t agree that our site would be negatively impacted as a top banner seems like industry standard and thus we wouldn’t be dinged by this Phantom Update. I was hoping perhaps you could lend tour expert opinion to our discussion. You can find pages with advertising on our site in the following section http://www.arthritis.org/living-with-arthritis/. Any help feedback you could provide would be really appreciated. Thanks!

Thanks Steve. I’m glad my post was helpful! There’s no simple answer here. There’s a lot at play with Google from a quality standpoint (including Phantom, Panda, and other quality algos). And then you have the top-heavy algorithm, which can cause problems if there are too many ads (or any secondary content) pushing down your primary content.

Without analyzing your site, your search history, etc., it’s hard to tell you what to do. But I would be careful with adding any type of aggressive advertising to the site (size and/or functionality). If you disrupt the user experience, then that definitely could impact SEO. For example, users jumping back to the search results because they can’t easily find your content. In aggregate, that can be a killer SEO-wise.

You should also read my post about aggressive advertising and Google Panda. I’ve helped a lot of companies that pushed the limits, and got hit hard. I hope that helps! http://searchenginewatch.com/sew/how-to/2377004/google-panda-and-the-high-risk-of-using-aggressive-or-deceptive-advertising

BTW, did you jump during the Phantom Update in early May? Third party tools show a big increase for your site. That would be another reason to be very careful.

Thanks Glenn. Your post about aggressive advertising and Google Panda was very helpful too. I understand what you’re saying about too much above the fold advertising becoming a possible issue. But the advertising we’re talking about is not deceptive or aggressive. And I would assume as 80% of our page views/traffic already features a top banner that we would have felt any impact that would have come from the Phantom Update in May? Unfortunately, I don’t have any of our search data/history to analyze. I will note that we had a complete site redesign take place at the beginning of May which is most likely why third party sites are showing a big jump at that time. I guess the only way to really understand if a top banner on more pages would be detrimental is to run a test. But it sounds like there is a lot of unknowns out there and finding that ad/edit balance is pretty much a big mystery:)

It’s very hard to test that, since you wouldn’t know the impact for some time. Google needs to recrawl the pages, remeasure engagement, etc. before you would see an impact. So you could roll it out fully, then see the impact months down the line. Not good.

So you are replacing the top banner with a new one (just larger)? Maybe you should email me so we can take the conversation offline. It’s really hard to say without knowing more about the situation, seeing the actual ads, etc.

Thank you Glenn! I read though this and you rock.

Hey, I’m glad my post was helpful! Phantom was a huge update.

Phantom… Googlel likes to change everything and say “we are changing algo each day” – from 2015 we had may phantoms ; )

Can you please share your analysis on Fred update. Thanks

Sure, here you go. I posted this on 4/18 and it covers three examples of impact from the 3/7 update (AKA Fred). :) https://www.gsqi.com/marketing-blog/march-7-2017-google-algorithm-update-fred/