If you’ve read some of my case studies in the past, then you know Panda can be a real pain the neck for large-scale websites. For example, publishers, ecommerce retailers, directories, and other websites that often have tens of thousands, hundreds of thousands, or millions of pages indexed. When sites grow that large, with many categories, directories, and subdomains, content can easily get out of control. For example, I sometimes surface problematic areas of a website that clients didn’t even know existed! There’s usually a gap of silence on the web conference when I present situations like that. But once everyone realizes that low quality content is in fact present, then we can proceed with how to rectify the problems at hand.

And that’s how you beat Panda. Surfacing content quality problems and then quickly fixing those problems. And if companies don’t surface and rectify those problems, then they remain heavily impacted by Panda. Or even more maddening, they can go in and out of the gray area of Panda. That means they can get hit, recover to a degree, get hit again, recover, etc. It’s a maddening place to live SEO-wise.

The Insidious Thin Content Problem

The definition of insidious is:

“proceeding in a gradual, subtle way, but with harmful effects”

And that’s exactly how thin content can increase over time on large-scale websites. The problem usually doesn’t rear its ugly head in one giant blast (although that can happen). Instead, it can gradually increase over time as more and more content is added, edited, technical changes are made, new updates get pushed to the website, new partnerships formed, etc. And before you know it, boom, you’ve got a huge thin content problem and Panda is knocking on the door. Or worse, it’s already knocked down your door.

So, based on recent Panda audits, I wanted to provide three examples of how an insidious thin content problem can get out of control on larger-scale websites. My hope is that you can review these examples and then apply the same model to your own business.

Insidious Thin Content: Example 1

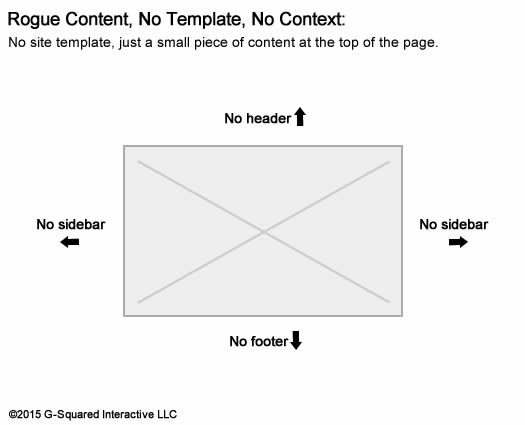

During one recent audit, I ended up surfacing a number of pages that seemed rogue. For example, they weren’t linked to from many other pages on the site, didn’t contain the full site template, and only contained a small amount of content. And the content didn’t really have any context about why it was there, what users were looking at, etc. I found that very strange.

So I dug into that issue, and started surfacing more and more of that content. Before I knew it, I was up to 4,100 pages of that content! Yes, there were over four thousand rogue, thin pages based on that one find.

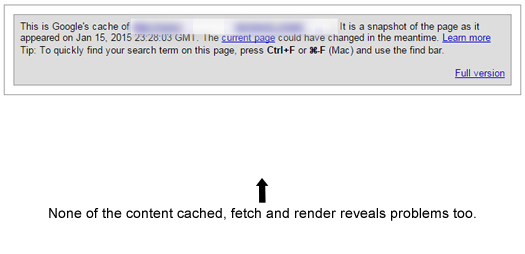

To make matters even worse, when checking how Google was crawling and indexing that content, you could quickly see major problems. Using both fetch and render in Google Webmaster Tools and checking the cache of the pages revealed Google couldn’t see most of the content. So the thin pages were even thinner than I initially thought. They were essentially blank to Google.

When bringing this up to my client, they did realize the pages were present on the site, but didn’t understand the potential impact Panda-wise. After explaining more about how Panda works, and how thin content equates to giant pieces of bamboo, they totally got it.

I explained that they should either immediately 404 that content or noindex it. And if they wanted to quicken that process a little, then 410 the content. Basically, if the pages should not be on the site for users or Google, then 404 or 410 them. If the pages are beneficial for users for some reason, then noindex the content using the meta robots tag.

So, with one finding, my client will nuke thousands of pages of thin content from their website (which had been hammered by Panda). That will sure help and it’s only one finding based on a number of core problems I surfaced on the site during my audit. Again, the problem didn’t manifest itself overnight. Instead, it took years of this type of content building on the site. And before they knew it, Panda came and hammered the site. Insidious.

Insidious Thin Content: Example 2

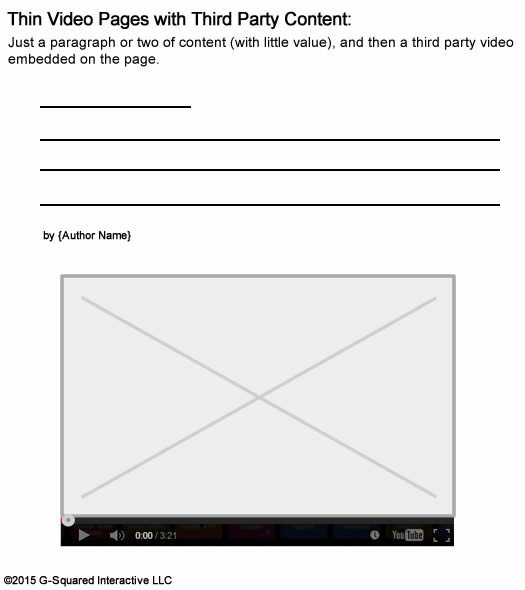

In another audit I recently conducted, I kept surfacing thin pages that basically provided third party videos (which were often YouTube videos embedded in the page). So you had very little original content and then just a video. After digging into the situation, I found many pages like this. At this time, I estimate there could be as many as one thousand pages like this on the site. And I still need to analyze more of the site and crawl, so it could be even worse…

Now, the web site has been around for a long time, so it’s not like all the thin video pages popped up overnight. The site produces a lot of content, but would continually supplement stronger content with this quick approach that yielded extremely thin and unoriginal content. And as time went on, the insidious problem yielded a Panda attack (actually, multiple Panda attacks over time).

Note, this was not the only content quality problem the site suffered from. It never is just one problem that causes a Panda attack by the way. I’ve always said that Panda has many tentacles and that low quality content can mean several things. Whenever I perform a deep crawl analysis and audit on a severe Panda hit, I often surface a number of serious problems. This was just one that I picked up during the audit, but it’s an important find.

By the way, checking Google organic traffic to these pages revealed a major decrease in traffic over time… Even Google was sending major signals to the site that it didn’t like the content. So there are many thin video pages indexed, but almost no traffic. Running a Panda report showing the largest drop in traffic to Google organic landing pages after a Panda hit reveals many of the thin video pages in the list. It’s one of the reasons I recommend running a Panda report once a site has been hit. It’s loaded with actionable data.

So now I’m working with my client to identify all pages on the site that can be categorized as thin video pages. Then we need to determine which are ok (there aren’t many), which are truly low quality, which should be noindexed, and which should be nuked. And again, this was just one problem… there are a number of other content quality problems riddling the site.

Insidious Thin Content: Example 3

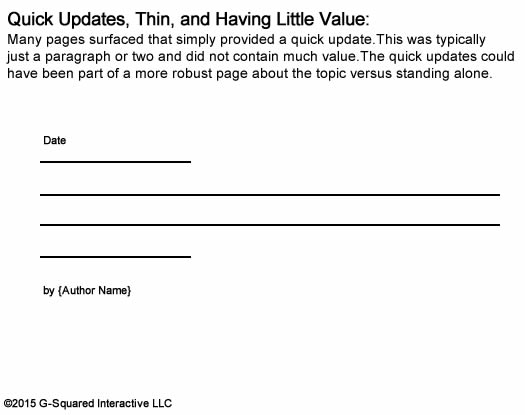

During another Panda project, I surfaced an interesting thin content problem. And it’s one that grew over time to create a pretty nasty situation. I surfaced many urls that simply provided a quick update about a specific topic. Those updates were typically just a few lines of content all within a specific category. The posts were extremely thin… and were sometimes only a paragraph or two without any images, visuals, links to more content, etc.

Upon digging into the entire crawl, I found over five thousand pages that fit this category of thin content. Clearly this was a contributing factor to the significant Panda hit the site experienced. So I’m working with my client on reviewing the situation and making the right decision with regard to handling that content. Most of the content will be noindexed versus being removed, since there are reasons outside of SEO that need to be taken into account. For example, partnerships, contractual obligations, etc.

Over time, you can see that some of these pages actually used to rank well and drive organic search traffic from Google. That’s probably due to the authority of the site. I’ve seen that many times since 2011 when Panda first rolled out. A site builds enormous SEO power and then starts pumping out thinner, lower-quality content. And then that content ends up ranking well. And when users hit the thin content from Google, they bounce off the site quickly (and often back to the search results). In aggregate, low user engagement, high bounce rates, and low dwell time can be a killer Panda-wise. Webmasters need to avoid that situation like the plague. You can read my case study about “6 months with Panda” to learn more about that situation.

Summary – Stopping The Insidious Thin Content Problem is Key For Panda Recovery

So there you have it. Three quick examples of insidious thin content problems on large-scale websites. They often don’t pop up overnight, but instead, they grow over time. And before you know it, you’ve got a thick layer of bamboo on your site attracting the mighty Panda. By the way, there are many other examples of insidious thin content that I’ve come across during my Panda work and I’ll try and write more about this problem soon. I think it’s incredibly important for webmasters to understand how the problem can grow, the impact it can have, and how to handle the situation.

In the meantime, I’ll leave you with some quick advice. My recommendation to any large-scale website is to truly understand your content now, identify any Panda risks, and take action sooner than later. It’s much better to be proactive and handle thin content in the short-term versus dealing with a major Panda hit after the fact. By the way, the last Panda update was on 10/24, and I’m fully expecting another one soon. Google rolled out an update last year on 1/11/14, so we are definitely due for one soon. I’ll be sure to communicate what I’m seeing once the update rolls out.

GG

Great post Glenn, thanks! Can anyone say … Does Panda affect sites as a whole, or does Google apply a sliding scale (i.e. on a page-by-page basis) to determine the site’s visibility in SERPS?

Panda is a site-level demotion. So you will typically see a significant decrease in rankings across the site. Some pages might remain ok, while many others drop. It’s not uncommon in severe Panda hits to see a drop of 60% or greater from Google organic traffic. I even had one company reach out to me that saw a 91% decrease after Panda 4.0 (overnight). I hope that helps!

Thank you for the insight, what you have stated makes sense. Cheers!

Thanks Stephen! I’m glad you found my post valuable. You should check out my other case studies (both here on my blog and in my Search Engine Watch column). I think you’ll find them helpful too. :)

I had a Panda issue in 2011 (until 2012 when I finally fixed it). It was seemed to be purely down to a Drupal install with hundreds of indexed profile pages with no content, plus a load of old orphaned pages with not content other than a title.

Thanks for your comment Jon. Yes, that’s exactly the type of issue I’m referring to. Large sites can easily build up many thin pages without even knowing it’s going on. And before you know it, you have a serious bamboo problem. Glad you figured it out and escaped the Panda filter. :)

Well, to be fair I did not figure it out. I spent ages removing shortish news posts and deleting old pages before somebody kindly pointed me in the right direction. I probably removed 400 pages of content that I did not need to in the end! I had a wonderful year after the penalty lifted, the best year ever. Then along came another P ….

Great post. Here’s my question, have you seen Panda situations where the traffic drop was more prominent on one section of the site, but the thin content was elsewhere on the site and didn’t suffer as much of a drop?

Hi Kathy. Panda is a domain-level demotion, but I have seen situations where certain areas of a site have gotten hit worse than others.

For your specific situation, it’s really hard to tell without analyzing the actual content, the drop in traffic, etc. That said, you can have low quality content problems from various areas of the site that are impacting the domain overall.

Basically, you need to identify *all areas* of low quality content across the entire site and deal with that accordingly. I hope that helps.

I love your articles and find them very helpful. If you are ever looking for new topics then I think a great topic would be how to analyze Google analytics and/or webmaster tools to find problematic pages and/or pages that need to be improved BEFORE being hit by Panda. What do you look for? If bounce rate is important as you have mentioned in other articles then do you look for the worst pages or pages over a certain % or can you find a benchmark. I would love to make a dashboard in analytics with the most important info and request updates when I reach certain targets (I think that is possible in analytics – such as an update when bounce rate is above certain %) but I’m not really sure what should be the most important things to include and what the metrics should be.

Thanks Marc. I’m glad you find my posts helpful! And that’s a great idea for a post. I’ll add it to my list of post ideas. Be sure to watch my SEW column and my blog (in case I write it soon).

Great article, thank you very much!

Just a question. I have a lot of pages blocked from crawling with robots.txt. We know that robots.txt is not working as noindex, so we have those pages in Google index, even if without informations because Google can’t crawling. Do you think those pages are a problem with Panda? I suppose best case is have noindex metatag, but right now is not possible for technical reasons.

Thankls

Yes, I would remove the pages from Google’s index via the meta robots tag. And you will need to stop disallowing those pages via robots.txt (so Google can actually get to the pages and see the meta robots tag). If they are indexed, they can hurt you.

Also, if you can remove them entirely (via 404 or 410), that’s another option. But I don’t know the site, the pages you are referring to, etc. I hope that helps.

Hi, can u help me with my blog i recently created. Google search console sent me a message a few days ago about thinning and low quality content although I can’t find any such thing, the data there is my personal creating and writing….

http://thedramasupdates.com

I’m sorry to hear that. Can you email me the manual action that was sent via GSC? I’ll try and take a quick look later. Thanks.