The Forgotten Coupling – With AI Overviews Surging in Web Search, Don’t Overlook Image Search, Video Mode, and the News Tab in Search

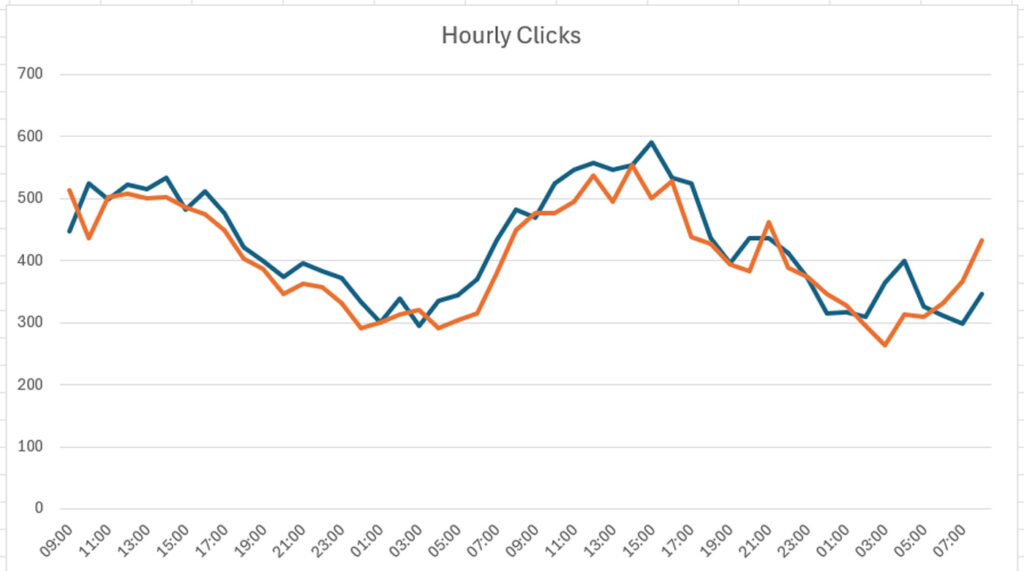

With the rise and surge of AI Overviews, and soon AI Mode, site owners are dealing with an unprecedented situation from a search traffic standpoint. Impressions in search might be stable, or even increasing, yet clicks might be dropping. And that’s often due to AI Overviews ranking in the SERPs for important queries that typically … Read more