March 7, 2017 will not be forgotten any time soon (at least for SEOs). That’s when a major algorithm update rolled out that impacted many sites across the web. It was named Fred (unfortunately) and there was quite a bit of controversy surrounding the update. Google finally confirmed the update, which is great, but there was so much obvious impact that a confirmation almost wasn’t necessary. Some sites lost 50-60% of their Google organic traffic overnight (with some losing up to 90%). I’ve received many emails and calls from site owners that were impacted. So it was clear that Google pushed a big update.

There have been a number of posts written about the 3/7 update already, and my goal isn’t to rehash those findings. Instead, I wanted to provide three specific examples of impact from the Fred update. The examples I’m providing in this post include a major recovery (a site surging 125% overnight), a large-scale site that has been in the gray area of Google’s quality algorithms for a long time, and then a third site that got absolutely smoked by Fred (losing nearly 70% of its Google organic traffic since Fred rolled out).

We’ll jump into those cases after a quick introduction to Fred.

Fred – A Major Core Ranking Update Tied To Quality

After Fred rolled out, it was clear that it was a significant core ranking update tied to quality. If you’ve read my previous posts about Google’s major core ranking updates, you will see many mentions of aggressive monetization, advertising overload, etc. Well, Fred seemed to pump up the volume when it comes to aggressive monetization. Many sites that were aggressively monetizing content at the expense of users got hit hard.

Barry Schwartz covered that heavily in his post about Fred and I agree it was a strong factor. But as Barry pointed out, that’s not the only factor when it comes to major core ranking updates focused on quality. There are many “low quality user engagement” problems that can cause damage. For example, thin content, low quality content, UX barriers, mobile problems, aggressive monetization, aggressive affiliate setups, and more. You can read my two-part series about Phantom to learn more about what I’ve seen while helping companies with major core ranking updates focused on quality.

With that quick intro out of the way, let’s hop into specific examples of impact from the 3/7/17 update.

Example 1 – Soaring With Fred

The first example covers an amazing case study. It’s a site I’ve helped extensively over the years from a quality standpoint, since it had been impacted by Panda and Phantom in the past. It has surely seen its share of ups and downs based on major algorithm updates.

When Fred rolled out, it didn’t take long for the site owner to reach out to me in a state of Google excitement.

“Seeing big gains today. Traffic levels I haven’t seen in a long time… Is this going to last??”

Needless to say, I immediately dug in.

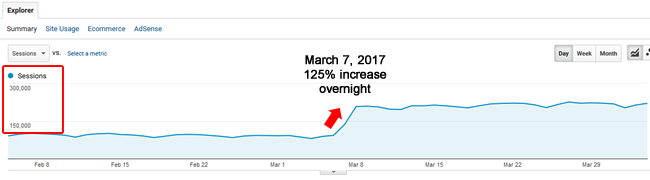

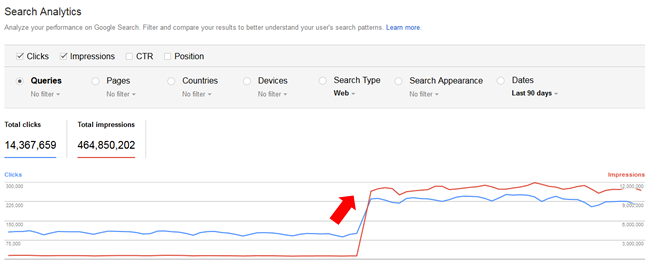

What I saw was a thing of beauty. It was a surge so strong that it would make any SEO smile. The site literally jumped 125% overnight (and this isn’t a small site with a minimal amount of traffic). Google organic surged by 110K sessions per day and has remained at that level ever since. Here are screenshots from Google Analytics and Google Search Console:

Reviewing the audits I performed for the site revealed many low quality content problems and “low quality user engagement” barriers. And that included aggressive monetization. So the remediation plan covered an anti-Panda and anti-Phantom strategy.

But it’s important to note that I helped this site over time and many of the significant changes were implemented a while ago. So it’s really interesting to see the site spike in March of 2017, when in my opinion, this should have happened sooner. It’s hard to say if the site finally crossed out of the gray area of Google’s quality algorithms or if there was something new introduced with Fred. Regardless, the site soared.

When I first starting helping the site, there were thin content problems, no content problems (literally no content on a number of pages due to tech problems), aggressive advertising problems, mobile issues, performance problems, and more. Many changes were implemented over a long period of time. And then those changes STAYED in place during that time. That’s an important note. The site owners did not fall back to old ways… They stayed the course, which is a great lesson for other site owners dealing with quality problems.

On that note, when you experience a big recovery, that is NOT the time to revert to old ways from a monetization standpoint. It’s easy to have that mindset and fall victim to quick changes that can take down a site again. But don’t do it. Just because you’re back in Google’s good graces, doesn’t mean you will stay there. Do not reintroduce UX barriers, aggressive monetization tactics, etc. If you do, you could very well get hammered by the next major core ranking update focused on quality. Beware.

Example 2 – Tired of the gray area. Better late than never.

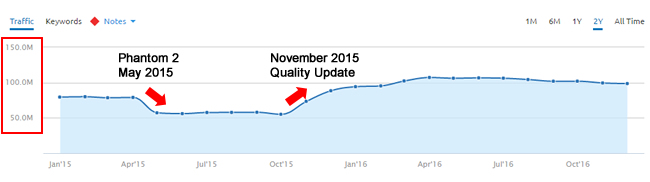

The next example I’m going to cover is a large-scale site driving a lot of traffic from Google organic historically. The site has been in the gray area of Google’s quality algorithms for a long time and has seen major drops and surges over time.

The company finally got tired of sitting in the gray area (which is maddening), and decided to conduct a full-blown quality audit to identify, and then fix, “low quality user engagement” problems. They began working on this in the fall of 2016 and a number of the changes did not hit the site until early 2017. In addition, there are still many changes that need to be implemented. Basically, they are in the beginning stages of fixing many quality problems.

As you can see below, the site has previously dealt with Google’s major core ranking updates focused on quality. Here’s a big hit during Phantom 2 in May of 2015, and then recovery during the November 2015 update:

When a site is in the gray area of Google’s quality algorithms, it can see impact with each major update. For example, it might drop by 20% one update, only to surge 30% during the next. But then it might get smoked by another update, and then regain some of the losses during the next. I’ve always said that they gray area is a maddening place to live.

When Fred rolled out on 3/7/17, the site dropped by approximately 150K sessions per day from Google organic. Now, the site drives a lot of traffic so that wasn’t a massive drop for them. But it also wasn’t negligible. In addition, the site increased during the early January update, so the company was expecting more upward movement, and not less.

Here’s a close-up view of the drop. More about the second half of that screenshot soon (not shown).

It can be frustrating for site owners working hard on improving quality to see a downturn, but you need to be realistic. Even though the site has a lot of high quality content, it also has a ton of quality issues. I’ve sent numerous deliverables through to this company containing problems to address and fix. Some of those changes have been rolled out, while others have not. Currently, there are still many things to fix from a quality perspective.

But the story doesn’t end here… Barry covered a possible update on 4/4 (check the comments for some interesting examples) and I tweeted shortly after that blog post was published that I was also seeing movement (specifically with sites that were impacted by the 3/7 update).

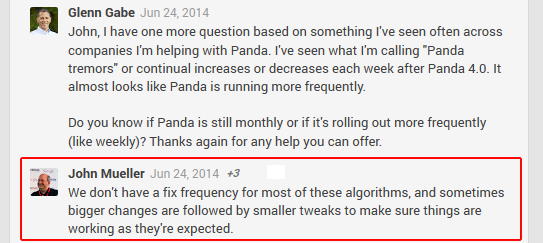

I’ve covered tremors in the past and that’s when Google can tweak an algorithm based on what they are seeing in the search results (after a major update rolls out). John Mueller explained that after I saw it happening after Panda 4.0. Here’s the conversation from G+:

Well, the 4/4 tremor looked just like that. I saw a number of sites that were impacted by the 3/7 update seeing additional movement (and several sites changed course). And one of those was the site I’m covering in this section of my post! This site, which lost ~150K sessions on 3/7, gained almost all of that back overnight. So the adjustment that Google made to the algo benefited this site greatly. And that increase has remained since 4/4.

Here’s the rest of that screenshot from above. Notice the site bounce back:

So, if you’re in the gray area of Google’s quality algorithms, work hard to exit as quickly as you can. Keep your head down and keep driving forward. Produce killer content, fix quality issues, tech SEO issues, UX barriers, advertising problems, and more. That’s how you can win during major core ranking updates focused on quality.

For this site, they are still in the process of fixing many issues. And then Google needs to recrawl those changes, and then see significant quality increases over time. They still have a long way to go, but it’s good to see them recover those lost sessions during a tremor.

Example 3 – Getting smoked.

A major hit based on UX Barriers, aggressive monetization, and low quality user experience.

The first two examples detailed a major surge and then a site dealing with the gray area of Google’s quality algorithms. Now it’s time to enter the dark side of Fred. There are many sites that got hammered by the 3/7 update, with some losing over 50% of their Google organic traffic overnight (and some lost up to 90%).

The next site I’m going to cover has dealt with Panda and major core ranking updates in the past. It’s a relatively large site, in a competitive niche, and is heavily advertising-based from a monetization standpoint.

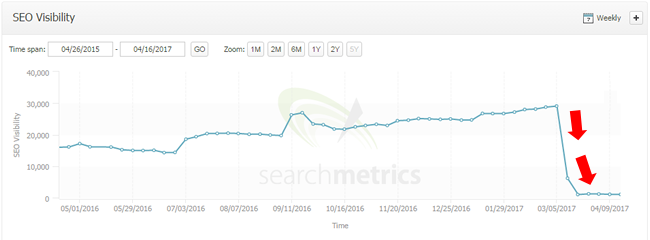

When Fred rolled through, the site lost over 60% of its Google organic traffic overnight. And with Google representing a majority of its traffic, the site’s revenue was clearly hit hard. It’s also worth noting that Google organic traffic has dropped even further since 3/7 (and is down approximately 70% since Fred rolled out.)

UX Barriers Galore

If you’ve read my previous posts about Google’s major core ranking updates focused on quality, then you’ve seen many examples of what I call “low quality user engagement”. Well, this site had many problems leading up to the 3/7 update. For example, there were large video ads smack in the middle of the content, deceptive ads that looked like download buttons (which users could mistakenly click thinking they were real download buttons), flash elements that don’t load and just display a shell, and more.

And expanding on the deception point from above, there were links in the main content that looked like internal links, but instead, those links whisked users off to third party advertiser sites. Like I’ve said a thousand times before, “hell hath no fury like a user scorned”. And it looks like Fred had their back.

To add insult to injury, the site isn’t even mobile-friendly. When testing the site on a mobile device, the content is hard to see, it’s hard to navigate around the site, etc. Needless to say, this isn’t helping matters. I can only imagine the horrible signals users are sending Google about the site after visiting from the search results.

This is why it’s so important to analyze your site through the lens of these algorithm updates. Understand all “low quality user engagement” problems riddling your site, weed them out, and improve quality significantly. That’s exactly what Google’s John Mueller has been saying when asked about these updates (including Fred). See a recent video below from John when asked about the 3/7 update (at 53:53 in the video):

Have you been impacted? Here’s what to do next.

At the risk of sounding like a broken record, you need to improve quality significantly (and keep increasing quality over the long-term). That’s what I’ve been saying for a long time, based on helping many companies deal with major core ranking updates. And it’s also what Google’s John Mueller has been saying repeatedly about core ranking updates (especially since Phantom 2 rolled out in 2015).

Here are some important bullets to consider:

- Perform a crawl analysis of your site and audit heavily through the lens of Google’s core ranking updates focused on quality.

- Identify major problems (including UX, content, and aggressive advertising), and fix them as quickly as you can. But don’t rush the changes… make sure they are implemented flawlessly. Don’t inject more problems onto your site.

- Read the Quality Rater Guidelines (QRG) thoroughly – and then read it again. Like I’ve said many times, it’s packed with amazing information directly from Google detailing what should be rated low and high quality. I’ve seen a serious connection between what I’m seeing in the field and what’s contained in the QRG.

- Don’t put band-aids on the situation. Fix as many of the problems as you can. Band-aids will keep you in the gray area of Google’s quality algorithms and you might not see much upward movement during subsequent updates.

- Work hard on publishing killer content over the long-term, building links naturally, building your brand, and improving user engagement. Again, Google needs to see significant improvement over the long term, not just over a few weeks.

- Periodically audit your site through the lens of “quality”. Don’t make changes and never revisit the situation. That’s how problems creep in. And when enough problems creep in, sites can get hammered. Don’t let that happen.

- You’ll need to wait for the next major core ranking update focused on quality in order to see movement. There’s not a set timeframe for those updates to roll out, although the past few have been almost exactly one month apart (1/6, 2/7, 3/7, and then 4/4). Google needs to refresh its quality algorithms, and when it does, you have a chance of recovering (or at least starting to recover). Keep your head down, work hard, and keep enhancing quality. If you do, you absolutely can recover. Don’t give up.

Summary – Fred Likes Quality. Fred Doesn’t Like Aggressive Monetization.

Fred was a significant algorithm update that seemed heavily focused on “low quality user engagement” (just like other major core ranking updates focused on quality). But it also seemed to turn up the dial on aggressive monetization. If you’ve been impacted by the 3/7 update, then you need to analyze your site objectively, weed out “low quality user engagement” problems, and implement changes quickly. I recommend reading all of my posts about Google’s core ranking updates focused on quality and then digging into your site. Good luck.

GG

Please let me know How many ads we can run on our side….is no. of ads is also affecting the quality.

Please help me explain how to improve Quality & UX. Many have told to do so, but not explained what sort of procedure needs to be followed.

Thanks in advance

It’s definitely not about the number of ads. It’s more about “low quality user engagement”, which could include aggressive monetization. For example, are the ads weaved into the content (deception), do they push the main content down the page? Are there too many ads based on the amount of content?

Try and objectively go through your site as a user visiting from Google after searching for specific keywords. Can you find what you need? Are ads in the way? Can you tell the difference between ads and main content?

I recommend reading my two-part series about Google’s major core ranking updates focused on quality. There’s a lot of good information in those posts about what I’m seeing when helping companies address quality problems. I hope that helps.

Thanks Glenn I’ve gone through your article & checked everything …There’s just few portion of content which is been showing duplicate & copied will that affect anything…. Do i need to replace it with unique one ?????

& as far as my ads are concerned it’s been placed properly its not pushing down my content and all.

Now i’m planning to add few new content & new pages …will that be okay

One Last Question

Seen lots of changes in Page indexing. even after fetch as google, taking too time for re-index pages. Why ???

I’m glad you went through my series. It’s really hard to say what’s ok and what’s not without analyzing the site. But yes, I would definitely keep driving forward and publishing new, high-quality content. Don’t be afraid to do that. It can only help.

Regarding indexing, again, hard to say without analyzing the site, those urls, etc. But if Google deems your site as low-quality, it could take longer for those urls to get indexed. Google may not be prioritizing those urls for crawling/indexing.

And also keep in mind that it can take months (or longer) to see a recovery or upward movement. Google needs to see significant improvements in quality over the long-term. I hope that helps.

Hi Glen just discovered your posts and glad I did. I have an amazon affilate website with almost 300 posts in kitchen niche. I want to try to audit it concerning the quality. As I read about aggressive monetization I ask myself if includng amazon links in majority of the posts does mean agressive. I mean I have a post with FAQs almost 7000 words where I answer to over 150 questions in my niche (nobody is doing this from my competitors) and I have no links to Amazon and even so the page was deacrease in SERP on 17.05. I have a lot of posts that are not keyword searched focused being product based but do they have affiliate links like 5 links to amazon for 1000 words.

Thanks for our thoughts

We have had over 6,000 lengthy and engaging comments on one series of posts on our site.

Do these comments have any impact on quality content analysis?

Yes, Google has explained that comments help make up the content on the page. You should make sure UGC is high quality, and if it is, then it can absolutely help with overall quality of the page — content-wise. I always recommend to moderate heavily. Here’s a video of John Mueller explaining that (at 11:41 in the video): https://www.youtube.com/watch?v=Ba_qLBFlIe4&t=11m41s

Thank you

Not very Tech minded. What is UGC?

Of course 72% of our traffic is Social Media driven and the organic search is 11.2? Total acquisition 39,000 in past 18 days

No problem. UGC is user-generated content (for your situation, that’s comments).

Thank you……

Glenn, great research and good info as always. What’s your take on duplicate content in boiler plates? Have you seen anything that can give you a definitive conclusion on this issue?

Boilerplate content is typically not a problem. Google has mentioned this several times. It’s normal to have that on a site and Google can recognize that it is site-wide (and will NOT treat it as part of the main content). Here’s a video from John Mueller explaining more about how Google handles boilerplate content (at 21:54): https://www.youtube.com/watch?v=kfICh_rsOEo&t=21m54s

For major core ranking updates focused on quality, I would heavily focus on surfacing low-quality content, thin content, UX barriers, low-quality user engagement problems, etc. I hope that helps.

What is a UX barrier?

Anything that interrupts the user experience and can cause frustration. Popups, broken UI elements, navigation problems, ads, etc. They are pretty easy to pick up if you try to navigate the site with an objective in mind. I hope that helps.

Thank you Glenn!

Yes, I wrote a post covering the update. You should check it out. http://www.gsqi.com/marketing-blog/may-17-2017-google-algorithm-update/