A funny thing happened as I was finishing my last blog post about Google’s unconfirmed algorithm updates in 2015. I was getting ready to publish the post and the algo Richter scale in my office started to move – and move quickly. So I held off on publishing the post and waited for more data to come in. And I’m glad I did. It ended up we saw yet another significant unconfirmed algorithm update on November 19, 2015. And it was big. Really big.

I included some information about the 11/19 update in my post, but knew I would be digging in much further as I analyzed more sites that were impacted. And the more I dug in, the more movement I saw. There were many sites surging or dropping starting on 11/19 and it was hard to overlook the connection to previous “quality updates” in 2015. For example, many sites that surged or dropped during the 11/19/15 update also saw significant movement during the 2/5/15 update, Phantom 2 in early May, and then the September updates (9/2 and 9/16).

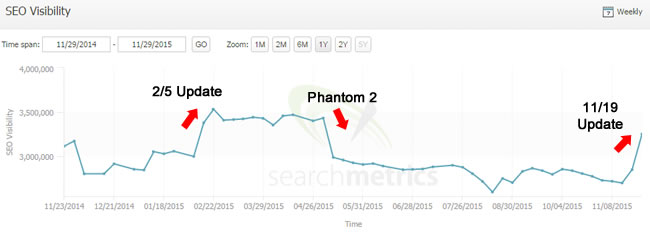

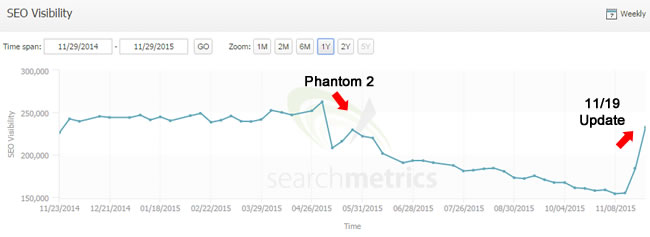

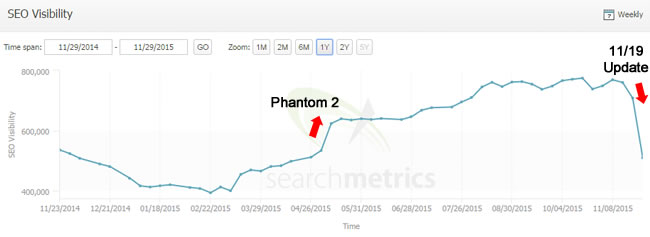

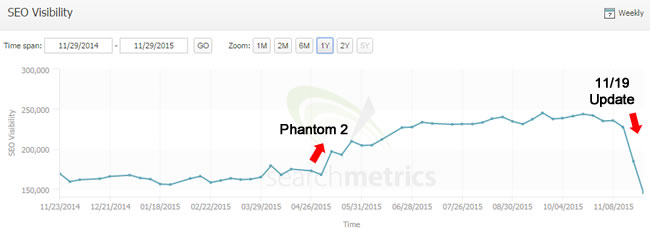

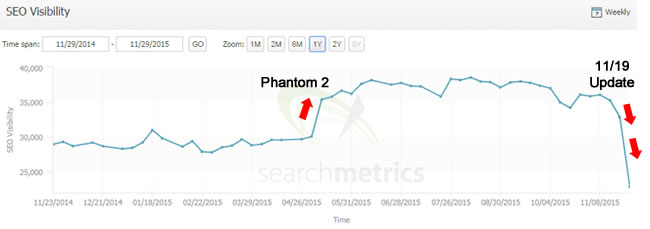

During my analysis, I saw a lot of trending that looked like the screenshot below. Notice the movement during each of the updates I mentioned above. Time and time again, I saw this type of trending (globally):

Phantom 2 and November 19 (or should I say Phantom 3, 4, etc?)

For those of you not familiar with Phantom 2, I ended up picking up a major algorithm update in early May of 2015. I named it Phantom based on its mysterious nature. It was a huge update that impacted many sites across the web. Google finally confirmed the update and said it was a change to its core ranking algorithm with how it assesses quality. Yes, that’s important to say the least. You can read more about Phantom in my post covering my findings and analysis.

After Phantom 2 rolled out, I knew it would take a lot of work for sites to recover from a major hit. That’s because I had seen a similar update before in May of 2013 (which I called Phantom 1). It took months of remediation work before sites began to recover. I believe the first recovery was about 2.5 months after the update rolled out on 5/8/13. Since Phantom 2 was eerily similar to the original Phantom update, I believed that recovery from Phantom 2 would take a similar amount of time. And it seems I was right.

The first signs of Phantom 2 recovery started during the September updates (9/2 and 9/16). I saw websites begin to jump during those updates that had been negatively impacted by Phantom 2 in May of 2015. And I also saw sites drop during the September updates that saw movement during Phantom 2 and the 2/5 update. That led me to believe there was a connection between those updates. You can read more about the September updates in my post covering both 9/2 and 9/16.

November 19 – A Significant Quality Update

The more sites I dug into that were impacted by the 11/19 update, the more connections I saw to previous quality updates like the 2/5 update, Phantom 2, and the September updates. To illustrate that point, let’s take a look at some screenshots from Searchmetrics.

The Phantom Connection In Pictures:

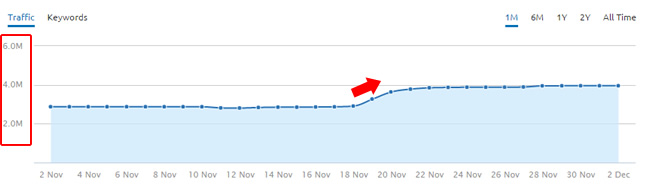

Positive Impact:

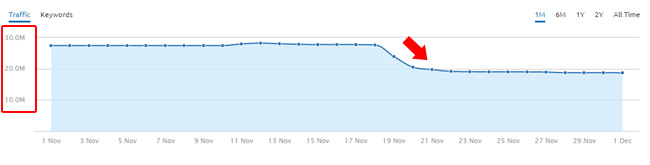

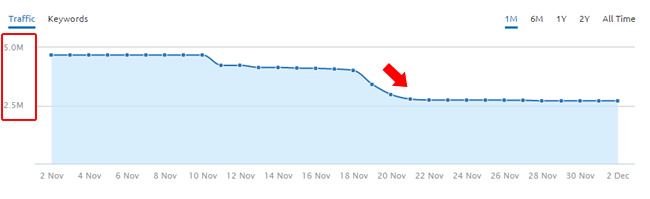

Negative Impact:

It’s crazy to see the connection to Phantom 2 in May of 2015, right?

And here are some screenshots of drops and gains from SEMrush (past 30 days):

Other Important Things We Know About The 11/19 Update

OK, so we know there was a major algorithm update on 11/19, but what else do we know about our Phantom-like friend? Well, based on my analysis over the past ten days, I do know some additional things. And they are important to note.

1. Rich Snippets Impacted

The 11/19 update seemed to be connected to the appearance or removal of rich snippets in the SERPs. Between my own clients seeing this, and new companies reaching out to me about the situation, I know of several sites where rich snippets either disappeared (for sites negatively impacted), or reappeared (for sites seeing positive movement).

To learn more about Google’s site-level quality evaluation and how that can impact rich snippets during broad core updates, then read my latest post covering the topic. I provide a thorough rundown on how it works and provide examples of that happening in the wild.

2. Tremor on 11/28

If you’ve read my posts about algorithm updates before, then you know that Google can roll out a significant algorithm update and then refine the algo to ensure they are seeing the best results possible. I’ve called these smaller updates “tremors” and John Mueller confirmed that Google can (and will) roll out these smaller changes. Well, there is a lot of evidence that a tremor rolled out on 11/28 and many of the sites seeing impact on 11/19 saw more impact starting on 11/28. Some went up more, some fell further, while others adjusted (going up after going down or vice versa). Keep this in mind while analyzing your own trending.

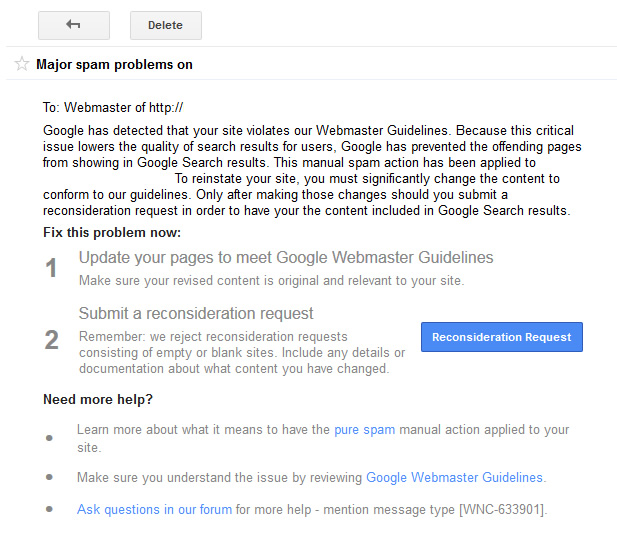

3. Pure Spam Manual Actions

I had a number of companies reach out to me after the 11/19 update explaining that they just received a pure spam penalty (manual action). I found the timing of these manual actions very interesting. Here we have a major algorithm update targeting “quality” and at the same time Google dishes out a bunch of pure spam penalties. Coincidence? Seems like Google had a full-blast attack on low quality content, poor user engagement, etc. It’s something to keep in mind.

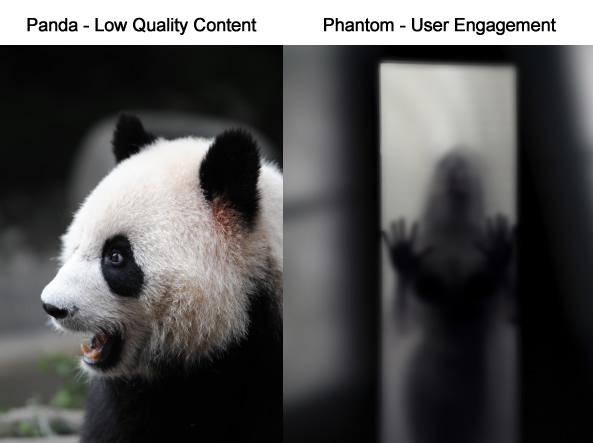

Phantom Versus Panda – User Engagement A Key Factor in 2015 Quality Updates

In my post about the unconfirmed algorithm updates in 2015, I explained that the various quality updates could have been Google deconstructing Panda and baking those elements into the core ranking algorithm. As I’ve mentioned before many times, it seemed that Panda and Phantom both targeted very similar things. For example, I noticed many examples of content quality problems on sites pummeled by Phantom, when most people would associate those problems with Panda.

But the more sites I analyzed that were impacted by the 2/5 update, Phantom 2 in May, and the 11/19 update, the more I started to realize the slight difference between the quality updates and Panda. And it came down to user engagement.

I’ll cover more about the negative impact I saw from the 11/19 update below, but wanted to mention an overarching theme based on fresh hits. While viewing pages that dropped in rankings during the 11/19 update, and then the sites overall, it was hard to overlook the horrible user engagement problems riddling those websites. Time and time again, I was banging my head against my monitor in frustration. Sure, there were times low quality content reared its ugly head, but user engagement barriers seemed to be a major factor that tied all of the fresh hits together.

So if that’s the case, then maybe Panda’s job is to focus more on “content quality” while Phantom and other quality updates focus on user engagement barriers. It’s important to cover this as we have two quality cowboys in town (Panda and Phantom).

Fresh Hits and Negative Movement

As I mentioned above, while analyzing sites that were negatively impacted by the 11/19 update, it was hard to overlook serious user engagement problems. In addition, deception reared its ugly head (just like it did during Phantom 2). For this post, I figured I would simply list some examples of problems I saw during my travels. This is by no means a final list of Phantom problems, but instead, a sampling of issues I believe can cause major problems with Google’s quality updates.

During my travels I saw:

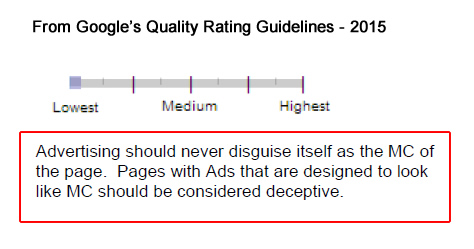

Ad Deception:

I’ve mentioned this many times before in posts about Panda and Phantom. I saw ads that looked like content weaved into the page (into the primary content). The intent was clear… get as many people as possible to click the ads (even if they didn’t know they were ads). This included both display ads that matched the content and text ads that looked like it was either part of the content or navigation.

It’s important to note that Google mentions ad deception in their freshly released Search Quality Rating Guidelines. Specifically, the guidelines state, “Pages with ads that are designed to look like MC (main content) should be considered deceptive.”

Clumsy UX

I visited a number of sites that got hammered by the 11/19 update that employed a clunky and clumsy user interface for accessing content, traversing the site, etc. That included horrible infinite scroll implementations, overlays that were hard to maneuver, and other extremely frustrating ways to access more information. And by the way, I have two large flat screens working in tandem in my office… If I was frustrated, I can’t imagine what the average user thinks while working on one smaller screen.

Let’s Play “Hide The Content”

I hit many sites that displayed gigantic ads at the top of the page that pushed the primary content below the fold (even on my giant monitors). And I even saw a few examples of giant ads that let you choose additional ads to play at the top of the page. Oh how nice of them. :) It was so confusing and frustrating. Think about someone searching for a topic, trying to find an answer, etc., and then hitting a page that provides giant ads that force you to scroll heavily to find any semblance of content. Not good. But let’s not stop there, how about adding video to the advertisements and you’ve got the perfect storm of user frustration. I saw that several times as well.

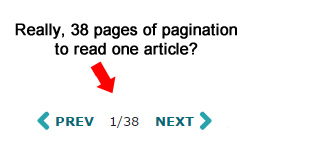

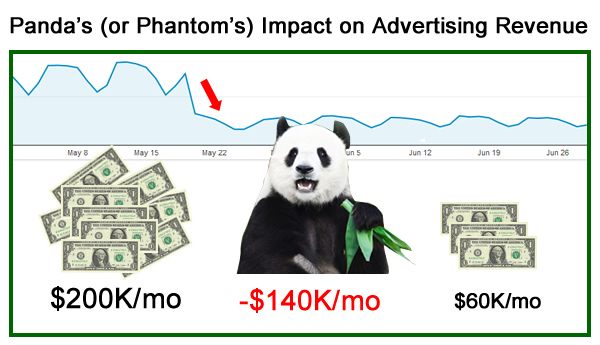

Excessive Pagination (for monetization purposes)

Forcing users to click through dozens of pages to view an article can cause serious user frustration. This is often done for monetization purposes, as each new component page can load more ads. Some sites I’ve helped with both Panda and Phantom problems were forcing users to click through 30 or more pages to view an article, with only a few paragraphs per page. A radical pagination change is typically a big move for many sites based on the drop in ad impressions from nuking the excessive pagination. But after getting smoked by Panda and/or Phantom, there’s usually not much of a choice.

Low Quality and Disorganized Supplementary Content

High quality supplementary content can be extremely valuable for users. But low quality supplementary content can negatively impact the user experience and perception of the site. And if the supplementary content is completely disorganized, then it might not even matter how high quality it is… I saw some pages that seemed to throw supplementary content all over the page. It was very 1997-like.

For example, scattered links, thumbnails, and lists in the right sidebar, left sidebar, and all around the primary content. It looked thrown together… Needless to say, it’s frustrating for users to traverse a page like that, they could mistakenly click the wrong link (or several), and the credibility of the site in question drops quickly. Choose supplementary content wisely. Think about users first and monetization second.

Popups and Prompts As You Scroll

Another annoying issue I saw across sites impacted by the 11/19 update involved the triggering of popups or modal windows as you scrolled down the page. Some prompted you to like the page on Facebook while others asked for email addresses. And some sites mixed that with infinite scroll. Talk about annoying and frustrating. Imagine scrolling down a page through numerous articles, which then freezes to reveal a popup asking you to like a page on Facebook. Then you need to take action to close that popup, only to get back to a clumsy infinite scroll. I had to force myself to stay on those sites to analyze more of the impact. I really wanted to bolt… And that’s the core problem. I’m sure many others feel the same way.

So, if you’re Google and you pick up on those horrible signals, would you still drive traffic there? No freaking way.

And Many More Examples of User Engagement Problems…

I’ll stop here, but I hope this sampling of user engagement problems helps you better understand what the 11/19 update targeted (and previous updates like 2/5 and Phantom as well).

Side Note: Taming The Monetization Beast

You’ll notice a number of the problems listed above are tied to advertising and monetization. I’ve seen this many times in my both my Panda and Phantom travels. You can read more about the impact of aggressive advertising on Panda in my Search Engine Watch column. And this problem definitely looks tied to Phantom as well. In a nutshell, don’t destroy your organic search traffic by pushing the envelope from a monetization standpoint. It won’t end well. Beware.

Phantom Recovery and Positive Movement

Now that I’ve covered a number of the user engagement problems riddling websites that were negatively impacted by the 11/19 update, let’s focus on the positive. As mentioned earlier, many sites that have been working hard to recover from Phantom 2 in May surged during the 11/19 update. Based on helping a number of companies with Phantom 2 hits, I’ll explain some of the actions they took after getting smoked.

First, review the list of negative factors above and reverse them. Seriously, although it’s just a sample of user engagement problems, it’s a healthy list to review (and has been tackled by a number of companies I’m helping with Phantom 2 hits). But to reinforce those points, here are some major items addressed by companies seeing recovery during the 11/19 update. You’ll see a lot of overlap with negative list above.

Some changes by websites that saw recovery from Phantom 2 on 11/19:

Removed Ad Deception

As I explained earlier, ads should be clearly labeled as ads. And they should not be weaved into the content. And they should not be cloaked as content (similar color scheme, layout, etc.) Don’t trick users into clicking ads. And this applies to affiliate links as well. Hell hath no fury like a user scorned.

Removed Annoying UX Problems

From clunky navigation to crazy infinite scroll implementations to popups and modal windows, be very careful with UX. If you frustrate users, Google will know about it. Removing user engagement barriers like this is critically important. Make it easy for users. Don’t let monetization get in the way of letting users find what they need. And don’t make traversing your website more like a warped game of whack-a-mole.

Nuked Thin Pages That Cannot Meet User Expectations

Hunting down thin and low quality content is extremely important from both a Panda and Phantom standpoint. If users searched for a solution, clicked through a search result, and found a thin piece of content that didn’t meet expectations, then that can send horrible signals back to the mothership. Once you surface thin and low quality content, you need to either nuke it or boost it. Some clients I’ve helped had hundreds of thousands of pages of thin content (or more). You need to tip the scales in the right direction.

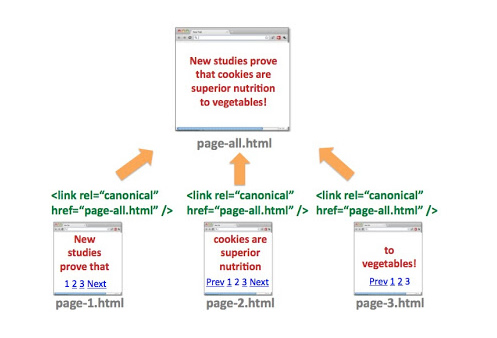

Cut Down, or Completely Removed Excessive Pagination

I mentioned earlier that some companies try to force users through many pages of pagination to read an article (for monetization purposes). Well, a number of companies I’ve helped with that problem changed their setup to reduce the amount of pagination, or completely nuked that tactic. The change resulted in higher quality content on one page plus happy users. In addition, some decided to keep the pagination, but provided a “view-all” page (and used the proper SEO setup to ensure the view-all is surfaced in the SERPs).

Provided Author and Source Information – YMYL

Google’s Quality Rating Guidelines mention content categorized as “your money or your life” or YMYL. These are pages that “impact the future happiness, health, or wealth of users”. For YMYL content, it’s important to know the author or source of the information. By doing so, you can build credibility and ensure users know the content is written by an experienced author (doctor, lawyer, industry expert, etc.) I recommend avoiding content written by “Author” or “Admin” or “Anonymous”. Instead, provide the actual author.

Here is information from Google’s Quality Rating Guidelines about Expertise, Authoritativeness, and Trust (E-A-T) and YMYL content.

“You should consider who is responsible for the content of the website or content of the page you are evaluating. Does the person or organization have sufficient expertise for the topic? If expertise, authoritativeness, or trustworthiness is lacking, use the Low rating.”

Revamped Content To *Exceed* User Expectations

When someone searches for a solution or answer, and then clicks through a search result, it’s critically important that they find a solid piece of content that meets (or exceeds) their expectations. There are many times that webmasters are too close to their own content to be objective. If you can’t meet or exceed user expectations, then maybe the content shouldn’t be on your site in the first place. It’s a tedious process to enhance a lot of content on a website, especially on larger sites, but it can help improve the experience for users.

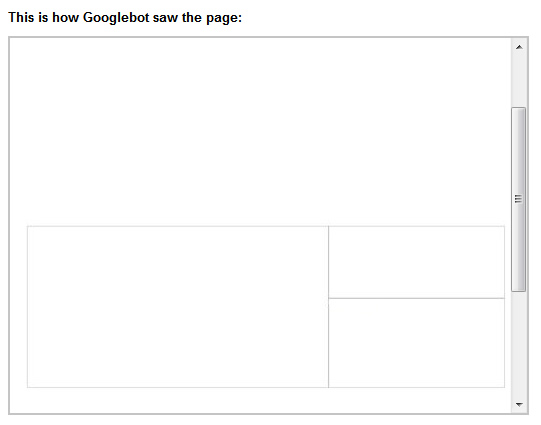

Fetch and Render in GSC – Understanding How Google Views Your Pages

Unfortunately, many still don’t know that you can use fetch and render in Google Search Console to truly understand how Google views your pages. You might be blocking important resources that help Google accurately render the page. And if Google cannot accurately render your page, then it might not be seeing everything it should see. In addition, there could be ads or content rendering in very strange ways. For example, I tested one site using fetch and render that revealed giant ads on top of their primary content. There was a technical problem causing that to happen. It was invisible to the naked eye, but Googlebot was seeing it. Not good. Fetch and render is your friend. Use it.

Below is an example of Googlebot running into major problems rendering content on a page. Run, don’t walk, to GSC to use fetch and render. You never know what you are going to find.

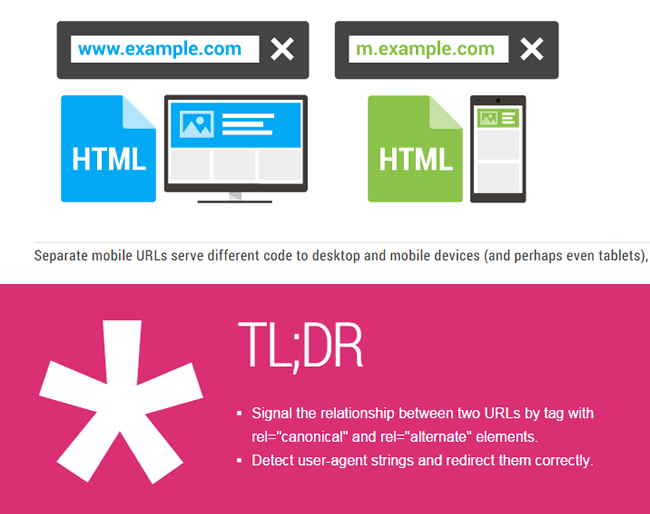

Properly Handling Mobile Pages (separate mobile urls)

I know of several sites that saw partial recovery during the 11/19 update that weren’t handling their mobile urls correctly. For example, they did not connect the desktop version of the url to the mobile version based on Google’s recommendations. That means using rel=alternate on the desktop version pointing to the mobile version, and then rel canonical on the mobile version pointing to the desktop version. Once those changes were implemented, Google could correctly associate the mobile version with the desktop version (and all that goes along with it from a rankings perspective).

Reeling In, and Organizing, Supplementary Content

I mentioned earlier that low quality and disorganized supplementary content can be problematic. For example, irrelevant secondary content around (or weaved into) the primary content. On the flip side, providing high quality supplementary content in an organized fashion can actually enhance the user experience. Don’t throw a boatload of “related links” all over the page. And make sure users know when they will be leaving your site (downstream to a third party website). Always think about supplementary content from a user’s perspective and not purely from a monetization perspective. That will usually lead you down the right path.

And much more… Basically, User Happiness = Win

Again, these were just some examples of what sites did to help fix their Phantom 2 problems. Each site is different and a thorough crawl analysis and audit can help surface important problems.

After analyzing many sites impacted by Google’s quality updates in 2015 (including the 11/19 update), I believe Phantom is basically looking out for users. I have yet to find one site negatively impacted by Phantom 2 or the 11/19 update that didn’t have some type of user engagement problem (and many had several problems). Be objective when reviewing your own site. That’s the only way to make the necessary changes that can lead to recovery or partial recovery.

Summary – How To Tackle Google’s Quality Updates

If you were impacted by the 11/19 update, then you should perform a deep crawl analysis and manual audit of your website (and analyze the site through the lens of Phantom). By doing so, you can surface key user engagement problems that could have caused a drop in rankings.

Also, and this is important to note, your site will probably not recovery quickly. That’s because Google will need to time to process all of the changes you implement (and re-measure user engagement). And of course you need to first audit your entire site (which can take a while depending on the size and complexity of the site at hand.) Think about what happened with Phantom 1 in 2013 and Phantom 2 in May 2015. It took sites multiple months to see recovery after performing a ton of remediation work. Unfortunately, it’s not a quick process.

So, it looks like we had four major quality updates in 2015 (2/5, Phantom 2 in May, The September Updates, and then 11/19). And I expect more quality updates to roll out in 2016 (plus a revamped Panda – which will possibly be real-time). The good news is that you can start analyzing your site today and then form a plan of attack. With the right changes, sites negatively impacted by the 11/19 update can see recovery during future quality updates. But again, that might takes months of hard work. Good luck.

GG

Thanks, Glenn! What a great reminder. I immediately went to my WebMaster tools and looked through every single aspect of it to make sure that I am making the cut :). A lot of your points are a great plan of action for me to have in front of me when blogging–content, content, content!

Thanks again!

Thanks Elena. I’m glad my post was helpful. It was amazing to see the connection between 11/19 and previous updates (especially Phantom 2 from May). It really seems like Phantom is focused on user engagement while Panda is focused on content quality. From a user engagement perspective, there are many sites employing the tactics I listed in my post (unfortunately). It’s so important to think about user engagement and potential barriers *before* they get you in trouble. I saw those problems time and time again while analyzing fresh hits.

Impressive analysis on the Phantom & Quality update Glenn. Question: What about contextual targeting which advertisers mostly follow for sites? Those can also be a kind of ad deception. Not sure if what I’m suggesting here is correct. What do you think? YMYL, EAT is no doubt, something which ought to be a part of end users satisfaction.

Thanks Amit. I’m glad you found my post valuable! And yes, content recommendation services fall under supplementary content (which I mentioned in my post). There are times users have no idea they are being sent to third party sites, which can be frustrating and deceptive. It really depends on how those modules are labeled, how they are presented, etc.

You also need to be very careful with downstream content (via those services). I mentioned that in previous posts about Panda. For example, driving users to sites containing malware, or pages that redirect to other dangerous sites. Some services vet the content more than others. Site owners need to be careful.

Great article!

My site organic traffic sligthly dropped on nov 19.Just some page traffic was affected, but these were popular pages. These were high quality content, but not so relevant pages to my whole site.

The time on page and bounce rate was worst than my whole site metrics (whole site: 7:00, these pages 2:30 with Advanced Bounce Rate 15% vs 50 %). So the User Engagment was worst than my whole site.

Interestingly the traffic of pages with relevant content get slightly more traffic.

What do you think about the non-relevant pages? Did you noticed similar thing?

Thanks Attila. I did notice that some sites lost rankings when the content could not meet user expectations. Also, I saw some lost rankings when the content was not extremely relevant to the topics that the site mainly focused on. That seems to fit into the situation you mentioned above. I hope that helps.

Here in Germany it seems as if classifieds portals were particularly hit quite heavily by Phantom 3. This week I’ve seen a bunch of car / job / real estate / wedding announcement sites filled with commercial content (most of them being run by local newspapers) losing 1/3 or even more of Google visibility. Probably, most portal owners won’t react quickly because as long as they still earn good money with advertising, everything seems to be o.k. for them, but this is very, very short-sighted. Surprisingly, a very large aggregator site named MeineStadt.de was not affected this time.

Thanks Jacek. I’ll try and check that out today. Regarding advertising revenue, if they lose massive amounts of Google organic traffic, they will notice the drop in impressions. And in my experience with helping companies with Panda and/or Phantom, that might get them to change. Time will tell.

Great thoughts Glenn, thanks again “Phantom Man”!

How do you explain that a lot of sites that had a negative impact with Phantom 2 (may) got a positive impact with Phantom 3? Even some sites that didn’t work a lot to enhance their quality.

With your captures, it looks like Phantom 3 reversed the action of Phantom 2

Thanks Olivier. I’m glad you found my post interesting! It’s hard to say without analyzing the sites. They might have made changes that aren’t readily apparent. Also, since Phantom is algorithmic, there’s always a possibility of swings in rankings (to any site). As some sites go down, others go up (and vice versa). We’ve seen this with Panda updates in the past. I do know sites that recovered or saw partial recovery from Phantom 2 that have completed a lot of work since May. I hope that helps!

As always you provide the highest quality intel on Phantom. I still owe you a beer, perhaps 2 beers after reading though this!

Thank you! A beer sounds great. Or maybe a glass of Phantom wine instead? :)

I love it!!! HAHAHAHAHAHA

Thank you for that article, that was really interesting to read !

One of our biggest website has been hit on the 19th. I’ve looked back in time and :

– 2 mai : +15%

– 2 september : -15%

– 9 september : no variation

– 19 november : -20%

The funny part is that we had took a major decision to remove all intrusive ads on the 14th november, moving the bounce rate from 60% to 15% and doubling the time per visit, but I guess it was just too late for the effect to be visible by the algorithme.

I hope there will be a follow up here on the next Phantom update, so I could check if those modifications had a good effect on this website.

We also had several websites moving up on the 19th, smaller ones with less pages, so maybe the effect (same ads reduction) had been visible by the algorithme more quickly.

Very interesting. So you saw movement during Phantom 2 in May, during the 9/2 update, and then a drop on 11/19? It sounds like you made some smart changes recently, so hopefully those will help you during a future quality update. Hard to say when that will be, though.

Also, I would perform a thorough analysis of the site through the lens of Phantom. Then I would make sure all user engagement and content quality problems are rectified. Make sure you are ready when another update rolls out. Good luck.

And glad to hear you have other sites that saw positive movement during the 11/19 update! That’s great news. It was a big update for sure.

Yes that’s correct, I’ve seen clear movements in those 3 dates.

The changes where made in order to increase the global quality of the website (that, do be honest, was full of ads).

Now that we have reduce the ads problem (well, there is just one left !), we are starting to work on improving the content by updating or removing all pages targeting long tail content and not providing a good content (bad bounce rate or time per visit).

Our websites are all videos websites, 2 of them where hit on the recent phantom and a lot of them had a boost in traffic and continue to get more traffic day after day. The major difference between the 2 that got hit and the rest of the network is that the average number of pages on the network is around 2,000 per website and the 2 that got hit have 15,000 and 53,000 pages.

Panda/Penguin was really bad for our overall income, but by removing the ads it’s like shooting yourself in the foot, it hurts a lot (like penalising yourself) ! Hopefully, we will see good results from this in the coming months.

I’ve started to read more on your blog (just find it today) and that will keep me busy for some hours, the content is really good ! Thanks for sharing !

Wow, very good piece I have to say. I have a pretty interesting experience from this latest update (and previous ones). I’ve always published only high-quality. Because I have various sites dating back all the way to 2003, my latest ones I’ve been able to carefully build using good SEO practices since day 1. Well, I have a site that has never been impacted negatively with any Google update. In fact, it has gone up at just about every major update. That is, until this November update where I lost around 25% in rankings.

Back in August or September, I implemented Ads inside the content. They are not cloaked, or blending in or anything. It’s just a 336px ad block in the center, under the first paragraph or two. I did this because I was being frustrated by the low Adsense income with the ads on the sidebar even with high traffic. I immediately saw an increase in ad revenue of about 100%. I was pretty happy. My ads are in no way as aggressive as some of the stuff I’ve been seeing on the web these days, specially from awful social-driven sites.

Anyway, I took a 25%-ish hit with this update. Now, here comes the dilemma. As you would expect, I suspect the ads had something to do with it. I would like my traffic back eventually. However, I took a 25% traffic hit after a 100% income increase from those ads. Guess what that means? I’m still making more than I was before and in my opinion, I haven’t really sacrificed the usability of said site. It’s just not as clean and as pretty as it was.

So I don’t know. Part of me wants that traffic back, but part of me is happy happy taking the traffic hit, while still having a higher income.

Very interesting situation Danny. Thanks for sharing. Since I haven’t seen the site in action, it’s hard for me to say how much the advertising impacted usability. But, I have seen many situations where ads blended into the primary content have caused problems. It sounds like that’s what you are doing.

Regarding the increase in clicks and ad revenue, I wonder how many of those clicks were by mistake. I’m not saying they definitely were, but when ads get blended into the content, it’s easy for people to mistakenly click those ads. And that’s especially the case when the ads match the content (color and style-wise). I’ve seen that a lot during my Phantom travels.

So it sounds like you aren’t making any changes, right? I would worry that future quality updates could impact the site more (negatively). Let me know how your situation progresses. It would be interesting to understand how the site is impacted during future updates. Thanks again!

Well, I’m in a kind of similar situation.

I have websites impacted by the last update and my ads where very agressive.

I’ve remove all ads a few days before the update, of course the money is gone too…

But I do not view this as something definitive, you can remove the ads until the next update, if nothing good happen then you can get the ads back.

That will be a lost in income for sure, but :

– Your website can go up on the next update, so you can continue to grow

– If you do not act, your website will suffer more and more over time

– The more you wait, the more painful it will be for you to remove the ads…

Do you have datas on how much your metrics have been impacted since you put this ad before the content ? (time on website, bouce rate and number of pages view per session).

What kind of traffic do you loose ? (home page vs internal pages)

I agree with you Glenn and I think another user engagement signal that Google factored in was site speed. I manage a site that made some speed improvements (while keeping everything else relatively the same) in early Nov which at first appeared to have now affect on traffic. However, after the 11/19 update their rankings shot up and have been fluxuating similar to what you point out above.

Thanks for sharing Matt. That’s interesting regarding site speed. I haven’t seen that as a factor for Phantom personally (especially since some of the sites I’ve analyzed that were positively impacted aren’t the best examples from a page speed standpoint).

Did you notice any core competitors drop heavily? It could be that you gained as they lost. That’s always the case with algorithm updates (as some lose, others win). If you want to share your domain with me via email, I can take a quick look. Thanks again for comment!

Really insightful post. We have a number of our sites to penalised by Phantom, and like you say, these are sites that have historically suffered from the likes of Panda and other quality based algo filters. These include expandable ad units above classified ad content, ads embedded in search results listings, pop ups for data capture etc.

Now the real challenge starts, persuading the client to remove these and lose (short term) revenue!

Thank you for this. Many of our most popular pages have been HAMMERED since Nov. 19. I’m at a loss to explain it, as we have none of the issues described above. The only issue I can think of is our site is not yet responsive. The site is a corporate website: Identicard.com. Anyone have any ideas on what’s going on?

Hi Eric. Thanks for your comment and I’m sorry to hear you were negatively impacted by the 11/19 update. It was a big one. It’s really hard to say without performing a deep crawl analysis and audit of the site. Looking at your trending over time, it does look like you’ve seen movement during Panda updates, and other quality updates. I would audit the site through the lens of Panda and Phantom (focusing on user engagement issues and low quality content).

You might want to start by running a Panda report (which can be used for any algorithm update). That might surface problematic pages. I hope that helps! http://www.hmtweb.com/marketing-blog/panda-report-top-landing-pages-google-organic/