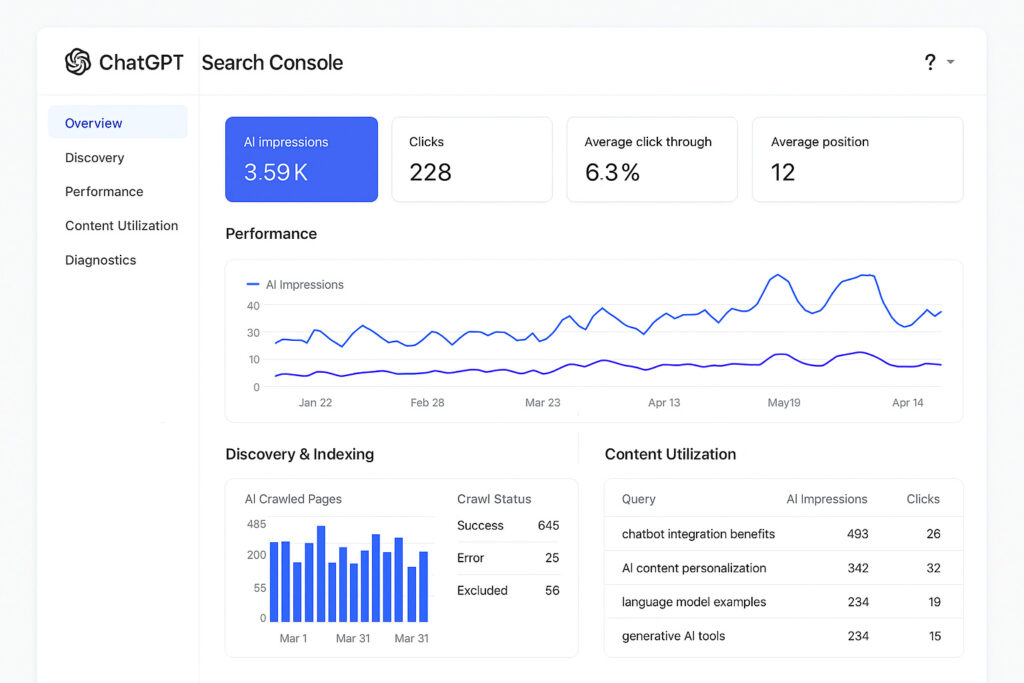

The Case for ASC: ChatGPT, Perplexity, and others need to build AI Search Console reporting ASAP to provide site owners much-needed AI Search data

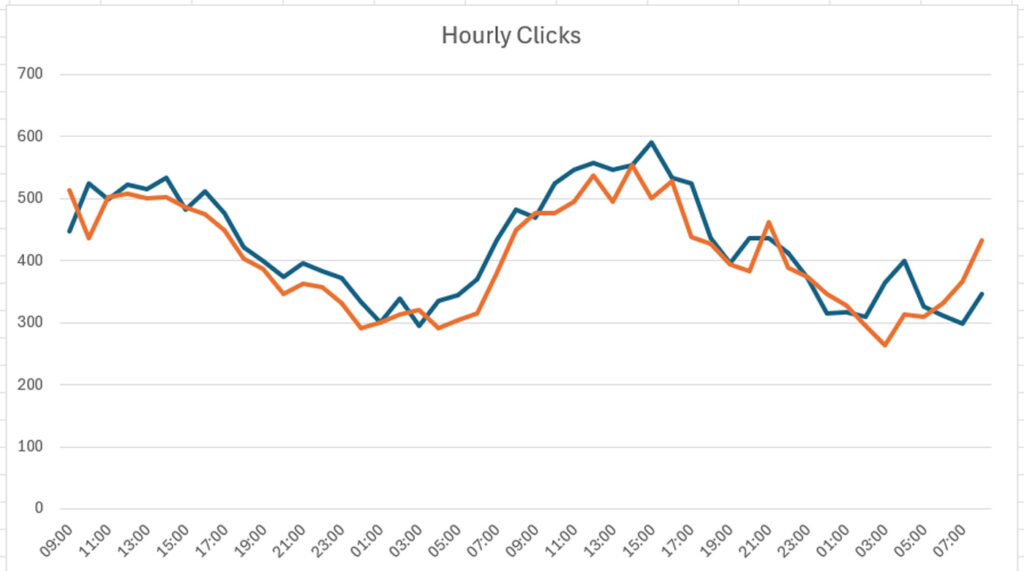

Like I said in my previous post about AI Search traffic, AI Search is here, it’s growing, and it’s important to analyze. It’s still a tiny piece of traffic for most sites (less than 1%), but again, it’s growing. Based on that growth, many site owners are extremely interested in understanding their visibility in AI … Read more