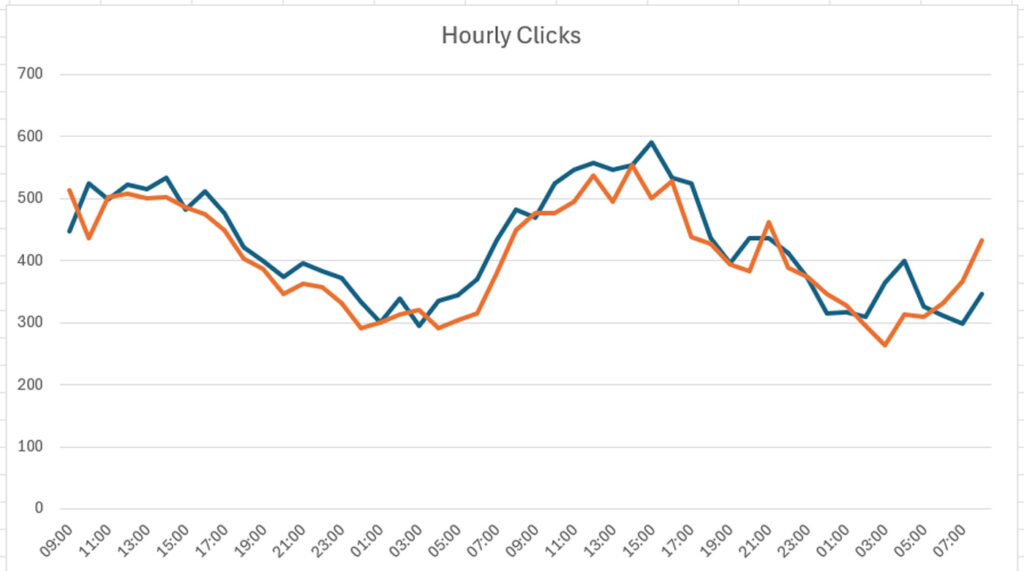

How to measure the impact of AI Overviews on clicks and click-through rate using third-party AIO data, the Google Search Console API, and Analytics Edge

In this tutorial, I’ll show you how to use AI Overview data from Semrush, ahrefs, and Sistrix and combine that with GSC data exported via the Search Console API. The resulting spreadsheet could uncover the true impact to clicks and click-through rate based on AIOs ranking in the SERPs for your queries. At I/O last … Read more