On May 20th, 2014 Google’s Matt Cutts announced that Panda 4.0 was rolling out. Leading up to that tweet, there was a lot of chatter across the industry about an algorithm update rolling out (based on reports of rankings volatility and traffic gains/losses). I was also seeing lots of movement across clients that had been impacted by previous algorithm updates, while also having new companies contact me about massive changes in rankings and traffic. I knew something serious was happening, but didn’t know exactly what it was. I thought for a while that it could be the pre-rollout and testing of Penguin, but it ended up being a new Panda update instead.

When Panda 4.0 was officially announced, I had already been analyzing sites seeing an impact (starting on Saturday May 17th, 2014). I was noticing major swings in rankings and traffic with companies I’ve been helping with previous algo trouble. And like I said above, several companies started reaching out to me via email about new hits starting that weekend.

And I was glad to hear a confirmation from Matt Cutts about Panda 4.0 rolling out. That enabled me to hone my analysis. I’ve mentioned in the past how unconfirmed Panda updates can drive webmasters insane. When you have confirmation, it’s important to analyze the impact through the lens of a specific algorithm update (when possible). In other words, content quality for Panda, unnatural links for Penguin, ad ratio and placement for Top Heavy, etc.

And by the way, since Google named this update Panda 4.0, we must assume it’s a new algorithm. That means new factors could have been added or other factors refined. Needless to say, I was eager to dig into sites that had been impacted to see if I could glean any insights about our new bamboo-eating friend.

Digging into the Panda 4.0 Data (and the Power of Human Barometers)

I’ve written before about the power of having access to a lot of Panda data. For example, working with many sites that had been previously impacted by Panda. It’s often easier to see unconfirmed Panda updates when you can analyze many sites impacted previously by the algorithm update. I’ve helped a lot of companies with Panda hits since February of 2011 when Panda first rolled out. Therefore, I can often see Panda fluctuations, even when those updates aren’t confirmed. That’s because I can analyze the Panda data set I have access to in addition to new companies that reach out to me after getting hit by those Panda updates. The fresh hits enable me to line up dates with Panda recoveries to better understand when Google rolls out unconfirmed updates. I’ve documented several of the unconfirmed updates here on my blog (in case you wanted to go back and check the dates against your own data).

So, when Google announced Panda 4.0, I was able to quickly start checking all the clients I have helped with Panda recovery (in addition to the ones I was already seeing jump in the rankings). And it didn’t take long to see the impact. A number of sites were clearly being positively impacted by P4.0.

Then, I analyzed new sites that were negatively impacted, based on those companies reaching out to me after getting hit (starting on 5/17/14). Together, I have been able to analyze a boatload of Panda 4.0 data. And it’s been fascinating to analyze.

I have now analyzed 27 websites impacted by Panda 4.0. The sites I analyzed ranged from large sites receiving a lot of Google Organic traffic (1M+ visits per month) to medium-sized ecommerce retailers and publishers (receiving tens of thousands of visits per month) to niche blogs focused on very specific topics (seeing 5K to 10K visits per month). It was awesome to be able to see how Panda 4.0 affected sites across industries, categories, volume of traffic, etc. And as usual, I was able to travel from one Panda 4.0 rabbit hole to another as I uncovered more sites impacted per category.

What This Post Covers – Key Findings Based on Heavily Analyzing Websites That Were Impacted by Panda 4.0

I can write ten different posts about Panda 4.0 based on my analysis over the past few days, but that’s the not the point of this initial post. Instead, I want to provide some core findings based on helping companies with previous Panda or Phantom hits that recovered during Panda 4.0. Yes, I said Phantom recoveries. More on that soon.

In addition, I want to provide findings based on analyzing sites that were negatively impacted by Panda 4.0. The findings in this post strike a nice balance between recovery and negative impact. As many of you know, there’s a lot you can learn about the signature of an algorithm update from fresh hits.

Before I provide my findings, I wanted to emphasize that this is simply my first post about Panda 4.0. I plan to write several additional posts focused on specific findings and scenarios. There were several websites that were fascinating to analyze and deserve their own dedicated posts. If you are interested in learning about those cases, the definitely subscribe to my feed (and make sure you check my Search Engine Watch column). There’s a lot to cover for sure. But for now, let’s jump into some Panda 4.0 findings.

Panda 4.0 Key Findings

The Nuclear Option – The Power of Making Hard Decisions and Executing

When new companies contact me about Panda, they often want to know their chances of recovery. My answer sometimes shocks them. I explain that once the initial audit has been completed, there will be hard decisions to make. I’m talking about really hard decisions that can impact a business.

Beyond the hard decisions, they will need to thoroughly execute those changes at a rapid pace (which is critically important). I explain that if they listen to me, make those hard decisions, and execute fully, then there is an excellent chance of recovery. But not all companies make hard decisions and execute thoroughly. Unfortunately, those companies often sit in the grey area of Panda, never knowing how close they are to recovery.

Well, Panda 4.0 reinforced my philosophy (although there were some anomalies which I’ll cover later). During P4.0, I had several clients recover that implemented HUGE changes over a multi-month period. And when I say huge changes, I’m talking significant amounts of work. One of my Panda audits yielded close to 20 pages of recommendations in Word. When something like that is presented, I can tell how deflated some clients feel. I get it, but it’s at that critical juncture that you can tell which clients will win. They either take those recommendations and run, or they don’t.

To give you a feel for what I’m talking about, I’ve provided some of the challenges that those clients had to overcome below:

- Nuking low-quality content.

- Greatly improving technical SEO.

- Gutting over-optimization.

- Removing doorway pages.

- Addressing serious canonicalization problems.

- Writing great content. Read that again. :)

- Revamping internal linking structure and navigation.

- Hunting down duplicate content and properly handling it.

- Hunting down thin content and noindexing or nuking it.

- Removing manual actions (yep, I’ve included this here).

- Stop scraping content and remove the content that has been scraped.

- Creating mobile friendly pages or go responsive.

- Dealing with risky affiliate marketing setups.

- Greatly increasing page speed (and handling bloated pages, file size-wise).

- Hunting down rogue risky pages and subdomains and properly dealing with that content.

- And in extreme cases, completely redesigning the site. And several of my clients did just that. That’s the nuclear option by the way. More about that soon.

- And even more changes.

Now, when I recommend a boatload of changes, there are various levels of client execution. Some clients implement 75% of the changes, while some can only implement 25%. As you can guess, the ones that execute more have a greater chance at a quicker recovery.

But then there are those rare cases where clients implement 100% of the changes I recommend. And that’s freaking awesome from my standpoint. But with massive effort comes massive expectations. If you are going to make big changes, you want big results. And unfortunately, that can take time.

Important Note: This is an incredibly important point for anyone dealing with a massive Panda or Penguin problem. If you’ve been spamming Google for a long time (years), providing low-quality content, that’s over-optimized, using doorway pages to gain Google traffic, etc., then you might have to wait a while after changes have been implemented. John Mueller is on record saying you can expect to wait 6 months or longer to see recovery. I don’t think his recommendation is far off. Sure, I’ve seen some quicker recoveries, but in extreme spamming cases, it can take time to see recovery.

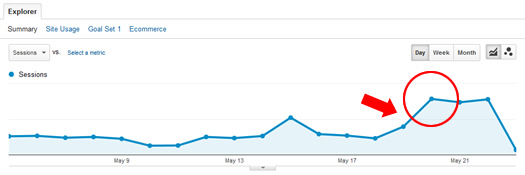

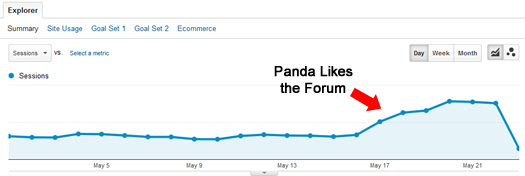

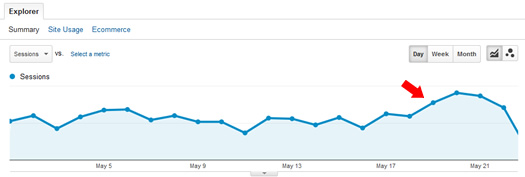

Fast forward to Panda 4.0. It was AWESOME to see clients that made massive changes see substantial recovery during P4.0. And several of those clients chose the nuclear option of completely redesigning their websites. One client is up 130% since 5/17, while another that chose the nuclear option is up 86%. Here’s a quick screenshot of the bump starting on 5/17:

Side Note: The Nuclear Option is a Smart One When Needed

For some of the companies I was helping, there were so many items to fix that a complete redesign was a smart option. And no, that doesn’t come cheap. There’s time, effort, resources, and budget involved versus just making changes to specific areas. It’s a big deal, but can pay huge dividends down the line.

One client made almost all of the changes I recommended, including going responsive. The site is so much better usability-wise, content-wise, and mobile-wise. And with Panda 4.0, they are up 110% since 5/18 (when they first started seeing improvement).

I’ve mentioned before that for Panda recovery, SEO band-aids won’t work. Well, the clients that fully redesigned their sites and are seeing big improvements underscore the point that the nuclear option may be your best solution (if you have massive changes to make). Keep that in mind if you are dealing with a massive Panda problem.

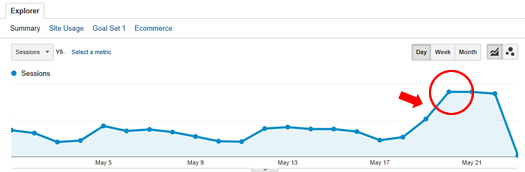

Phantom Victims Recover

On May 8th, 2013, I picked up a significant algorithm update. After analyzing a number of websites hit by the update, I decided to call it “Phantom”. It simply had a mysterious, yet powerful signature, so Phantom made sense to me. Hey, it stuck. :)

Phantom was a tough algorithm update. Some companies lost 60% of their traffic overnight. And after auditing a number of sites hit by Phantom, my recommendations were often tough to hear (for business owners). Phantom targeted low-quality content, similar to Panda. But I often found scraped content being an issue, over-optimized content, doorway pages, cross-linking of company-owned domains, etc. I’ve helped a number of Phantom victims recover, but there were still many out there that never saw a big recovery.

The interesting part about Panda 4.0 was that I saw six Phantom victims recover (out of the 27 sites I analyzed with previous content quality problems). It’s hard to say exactly what P4.0 took into account that led to those Phantom recoveries, but those victims clearly had a good day. It’s worth noting that 5 out of the 6 sites impacted by Phantom actively made changes to rectify their content problems.

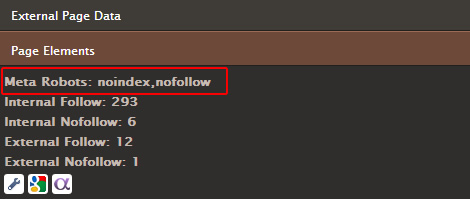

One of the sites did nothing to fix the problems and ended up recovering anyway. This could be due to the softening of Panda, which is definitely possible. There were definitely some sites I analyzed that showed increases after Panda 4.0 that didn’t necessarily tackle many problems they were facing. But in this situation, the site was a forum, which I cover next. Note, you can read my post about the softening of Panda and what I saw during the March 24, 2014 Panda update to learn more about the situation.

Forums Rebound During Panda 4.0

My next finding was interesting, since I’ve helped a number of forums deal with previous Panda and/or Phantom hits. I came across four different forums that recovered during Panda 4.0. Three were relatively large forums, while one was a smaller niche forum run by an category expert.

One of the larger forums (1M+ visits per month) made a boatload of changes to address thin content, spammy user-generated content, etc. They were able to gut low-quality pages, noindex thinner ones, and hunt down user-generated spam. They greatly increased the quality of the forum overall (from an SEO perspective). And they are up 24% since Panda 4.0 rolled out.

A second forum (1.5M visits per month) tackled some of the problems I picked up during an audit, but wasn’t able to tackle a number of items (based on a lack of resources). And it’s important to know that they are a leader in their niche and have some outstanding content and advice. During my audit I found they had some serious technical issues causing duplicate and thin content, but I’m not sure they ever deserved to get hammered like they did. But after Panda 4.0, they are up 54%.

And the expert-run forum that experienced both Panda and Phantom hits rebounded nicely after Panda 4.0. The site has some outstanding content, advice, conversations, etc. Again, it’s run by an expert that knows her stuff. Sure, some of the content is shorter in nature, but it’s a forum that will naturally have some quick answers. It’s important to note that the website owner did nothing to address the previous Panda and Phantom problems. And that site experienced a huge uptick based on Panda 4.0. Again, that could be due to the softening of Panda or a fix to Panda that cut down on collateral damage. It’s hard to say for sure. Anyway, the site is up 119% since May 17th.

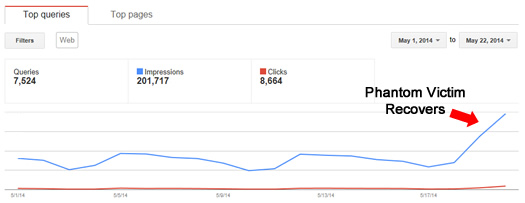

Industry Experts Rise

During my research, I saw several examples of individual bloggers that focus heavily on niche areas see nice bumps in Google Organic traffic after Panda 4.0 rolled out. Now, Matt Cutts explained Google was looking to boost the rankings of experts in their respective industries. I have no idea if what I was seeing during my research was that “expert lift”, but it sure looked like it.

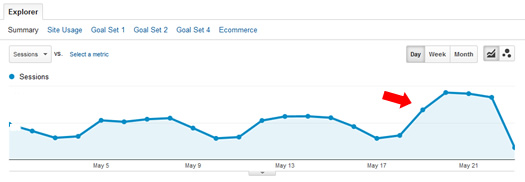

Here’s an example of a marketing professional that saw a 38% lift after Panda 4.0:

And here’s a sports medicine expert that has shown a 46% lift:

It was great to see these bloggers rise in the rankings, since their content is outstanding, and they deserved to rank higher! They just didn’t have the power that some of the other blogs and sites in their industries had. But it seems Google surfaced them during Panda 4.0. I need to analyze more sites like this to better understand what’s going, but it’s worth noting.

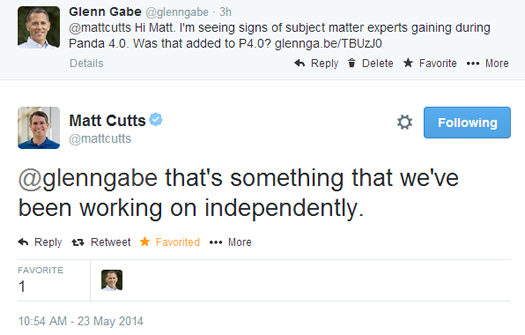

Update: I reached out to Matt Cutts via Twitter to see if Panda 4.0 incorporated the “authority” algo update I mentioned earlier. Matt replied this afternoon and explained that they are working on that independently. So, it doesn’t seem like the bloggers I analyzed benefited from the “authority” algo, but instead, benefited from overall quality signals. It was great to get a response from Matt. See screenshot below.

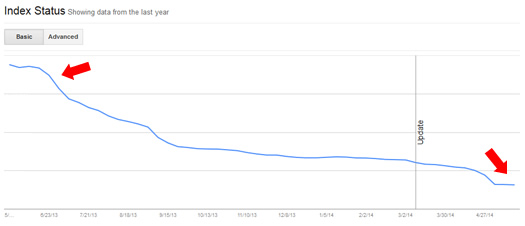

An Indexation Reality Check – It’s Not The Quantity, But the Quality That Matters

After conducting a laser-focused Panda audit, it’s not uncommon for me to recommend nuking or noindexing a substantial amount of content. That is usually an uncomfortable decision for clients to make. It’s hard to nuke content that you created, that ranked well at one point, etc. But nuking low-quality content is a strong way to proceed when you have a Panda problem.

So, it was awesome to see clients that removed large amounts of content recover during Panda 4.0. As an extreme example, one client removed 83% of their content from Google’s index. Yes, you read that correctly. And guess what, they are getting more traffic from Google than when they had all of that low-quality and risky content indexed. It’s a great example about quality versus quantity when it comes to Panda.

On the other hand, I analyzed a fresh Panda 4.0 hit, where the site has 40M+ pages indexed. And you guessed it, it has serious content quality problems. They got hammered by Panda 4.0, losing about 40% of their Google organic traffic overnight.

If you have been impacted by Panda, and you have a lot of risky content indexed by Google, then have a content audit completed now. I’m not kidding. Hunt down thin pages, duplicate pages, low-quality pages, etc. and nuke them or noindex them. Make sure Google has the right content indexed.

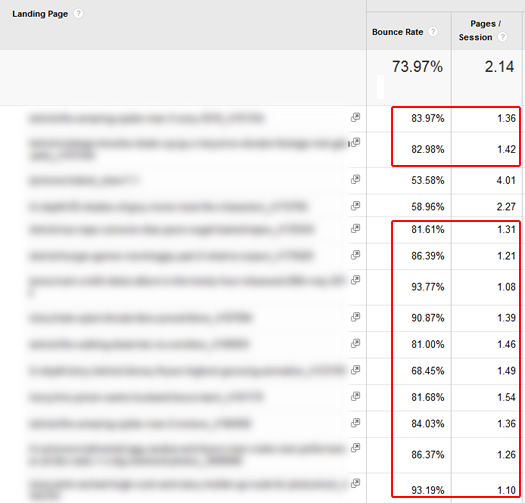

Engagement and Usability Matter

While analyzing the fresh hits, it was hard to overlook the serious engagement issues I was coming across. For example, stimulus overload on the pages that were receiving a lot of Google organic traffic prior to the hit. There were ads that expanded into or over the content, double-serving of video ads, stacked “recommended articles” on the page, lack of white space, a long and confusing navigation, etc. All of this led to me wanting to bounce off the page faster than a superball on concrete. And again, high bounce rates and low dwell times can get you killed by Panda. Avoid that like the plague.

Check out the bounce rates and pages per session for a site crushed by Panda 4.0:

Side Note: To hunt down low-quality content, you can run this Panda report in Google Analytics. My post walks you through exporting data from GA and then using Excel to isolate problematic landing pages from Google Organic.

Downstream Matters

While analyzing fresh Panda 4.0 hits, it was also hard to overlook links and ads that drove me to strange and risky sites that were auto-downloading software, files, etc. You know, those sites where it feels like your browser is being taken over by hackers. This can lead to users clicking the back button twice and returning to Google’s search results. And if they do, that can send bad signals to Google about your site and content. In addition, risky downstream activity can lead to some people reporting your site to Google or to other organizations like Web of Trust (WOT).

And as I’ve said several times in this post, Panda is tied to engagement. Engagement is tied to users. Don’t anger users. It will come back to bite you (literally).

Summary – Panda 4.0 Brings Hope

As I said earlier, it was fascinating to analyze the impact of Panda 4.0. And again, this is just my first post on the subject. I plan to write several more about specific situations I’ve analyzed. Based on what I’ve seen so far, it seems Panda 4.0 definitely rewarded sites that took the time to make the necessary changes to improve content quality, engagement, usability, etc. And that’s awesome to see.

But on the flip side, there were sites that got hammered by P4.0. All I can say to them is pull yourself up by your bootstraps and get to work. It takes time, but Panda recovery is definitely possible. You just need to make hard decisions and then execute. :)

GG