{Updated on 3/28/16 to reflect the name change from Google Webmaster Tools to Google Search Console.}

In late July, Google added Index Status to Google Search Console to help site owners better understand how many pages are indexed on their websites. In addition, Index Status can also help webmasters diagnose indexation problems, which can be caused by redirects, canonicalization issues, duplicate content, or security problems. Until now, many webmasters relied on using less-than-optimal methods for determining true indexation. For example, running site: commands against a domain, subdomain, subdirectory, etc. This was a maddening exercise for many SEOs, since the number shown could radically change (and quickly).

So, Google adding Index Status was a welcome addition to Search Console. That said, I’m getting a lot of questions about what the reports mean, how to analyze the data, and how to diagnose potential indexation problems. So that’s exactly what I’m going to address in this post. I’ll introduce the reports and then explain how to use that data to better understand your site’s indexation. Note, it’s important to understand that Index Status doesn’t necessarily answer questions. Instead, it might raise red flags and prompt more questions. Unfortunately, it won’t tell you where the indexation problems reside on your site. That’s up to you and your team to figure out.

Index Status

The Index Status reports are under the “Google Index” tab in Google Search Console. The default report (or “Basic” report) will show you a trending graph of total pages indexed for the past year. This report alone can signal potential problems. For most sites, you’ll see normal trending over time. If you are continaully building content, the number should keep increasing. If you are weeding out content, the number might decrease. But overall, most sites shouldn’t see any crazy spikes.

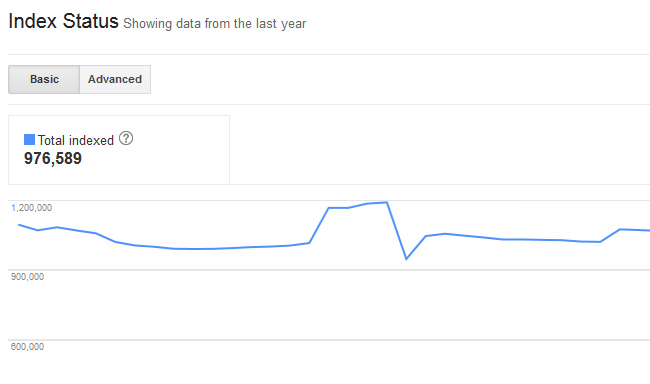

For example, this is a relatively normal indexation graph. The site might have weeded out some older content and then began building new content (which is why you see a slight decrease first, and then it increases down the line):

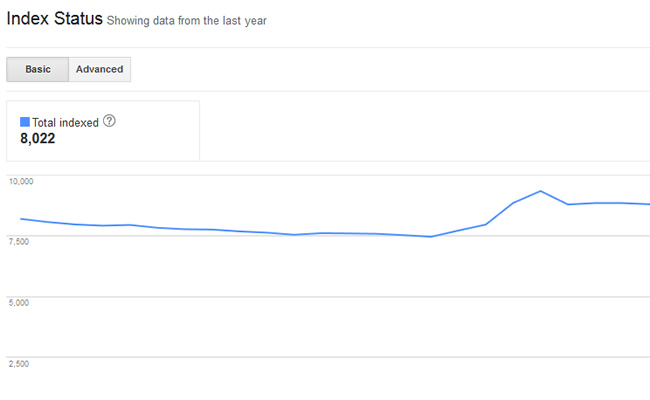

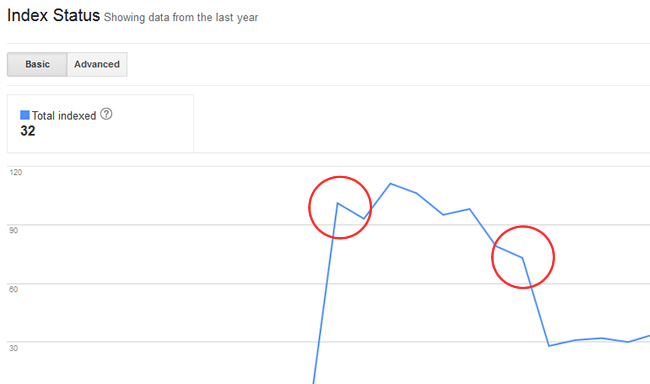

But what about a trending graph that shows spikes and valleys? If you see something like the graph below, it very well could mean you are experiencing indexation issues. Notice how the indexation graph spikes, then drops a few weeks later. There may be legitimate reasons why this is happening, based on changes you made to your site. But, you might have no idea why your indexation is spiking, and would require further site analysis to understand what’s going on. Once again, this is why SEO Audits are so powerful.

Advanced Report

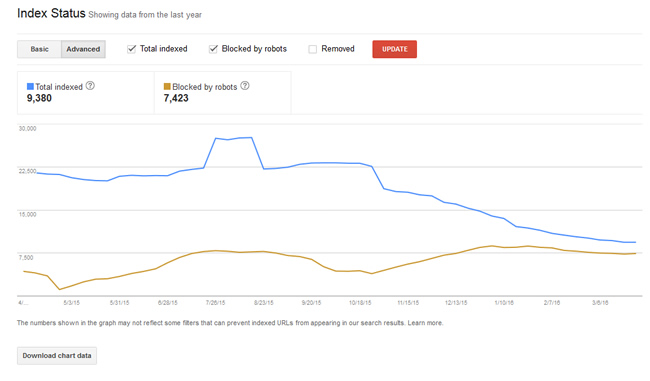

Now it’s time to dig into the advanced report, which definitely provides more data. When you click the “Advanced” tab, you’ll see two trending lines in the graph. The data includes:

- Total Indexed

- Blocked by Robots

“Total indexed” is the same data we saw in the basic report. “Blocked by robots” is just that, pages that you are choosing to block via robots.txt. Note, those are pages you are hopefully choosing to block… More about that below. Also, you can click “Removed” to see the number of urls that have been removed as a result of a URL removal request. You’ll need to click “Update” to see the new trending line for urls that have been removed.

What You Can Learn From Index Status

When you analyze the advanced report, you might notice some strange trending right off the bat. For example, if you see the number of pages blocked by robots.txt spike, then you know someone added new directives. For example, one of my clients had that number jump from 0 to 20,000+ URL’s in a short period of time. Again, if you want this to happen, then that’s totally fine. But if this surprises you, then you should dig deeper.

Depending on how you structure a robots.txt file, you can easily block important URLs from being crawled and indexed. It would be smart to analyze your robots.txt directives to make sure they are accurate. Speak with your developers to better understand the changes that were made, and why. You never know what you are going to find.

Security Breach

Index Status can also flag potential hacking scenarios. If you notice the number of pages indexed spike or drop significantly, then it could mean that someone (or some bot) is adding or deleting pages from your site. For example, someone might be adding pages to your site that link out a number of other websites delivering malware. Or maybe they are inserting rich anchor text links to other risky sites from newly-created pages on your site. You get the picture.

Again, these reports don’t answer your questions, they prompt you to ask more questions. Take the data and speak with your developers. Find out what has changed on the site, and why. If you are still baffled, then have an SEO audit completed. As you can guess, these reports would be much more useful if the problematic URLs were listed. That would provide actionable data right within the Index Status reports in Google Search Console (GSC). My hope is that Google adds that data some day.

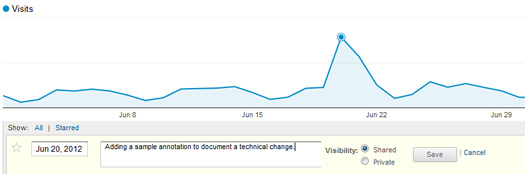

Bonus Tip: Use Annotations to Document Site Changes

For many websites, change is a constant occurrence. If you are rolling out new changes to your site on a regular basis, then you need a good way to document those changes. One way of doing this is by using annotations in Google Analytics. Using annotations, you can add notes for a specific date that are shared across users of the GA view. I use them often when changes are made SEO-wise. Then it’s easier to identify why certain changes in your reporting are happening. So, if you see strange trending in Index Status, then double check your annotations. The answer may be sitting right in Google Analytics. :)

Summary – Analyzing Your Index Status

I think the moral of the story here is that normal trending can indicate strong SEO health. You typically want to see gradual increases in indexation over time. That said, not every site will show that natural increase (as documented above in the screenshots). There may be spikes and valleys as technical changes are made to a website. So, it’s important to analyze the data to better understand the number of pages that are indexed, how many are being blocked by robots.txt, and how many have been removed. What you find might be completely expected, which would be good. But, you might be uncovering a serious issue that’s inhibiting important pages from being crawled and indexed. And that can be a killer SEO-wise.

GG