As AI Search platforms like ChatGPT, Perplexity, and Claude continue to grow, I’m seeing more and more recommendations for improving visibility through tactics that seem risky as heck. And not just risky for AI platforms, but for Search as well (Google, Bing, etc.) On that note, I wrote a post a few months ago explaining how ignoring Google and implementing tactics just for AI Search could potentially lead to big problems in search visibility (as those tactics could yield low-quality content or even spam over time).

Although that post was focused more on the Google impact, it’s important to understand that short-term or spammy tactics to game AI Search could also yield big problems down the line with those AI platforms. The AI Search platforms are still in their infancy and will become more sophisticated over time.

First, AI platforms are signaling moving to their own search indexes, which would require building strong core systems for grounding their responses. That would obviously impact those responses including citations and sources being presented to the user. In addition, AI platforms will need to develop strong anti-spam systems to combat the constant gaming of visibility across their platforms. That’s no small feat and I’ll cover more about that soon.

So, if companies are implementing risky or spammy tactics to game AI Search visibility, then those sites could be impacted negatively as the various AI Search platforms continue to develop their own core systems and anti-spam systems. So what works today might not work forever (or even tomorrow). Like many of us have said that have tracked spam in Google’s search results for a long time, “It works until it doesn’t…”

The importance of AI Search platforms building their own indexes:

Like I said earlier, AI Search platforms like ChatGPT and Perplexity are signaling (some more than others) about moving to their own search indexes. Let’s face it, those platforms will not want to rely on third-parties for a search index, if possible. That would put them in an awkward and risky position… The third-party owning the search index has a ton of leverage over the AI platform leveraging that index. In my opinion, the AI platforms will need to develop their own indexes and then core systems (more about core systems soon.)

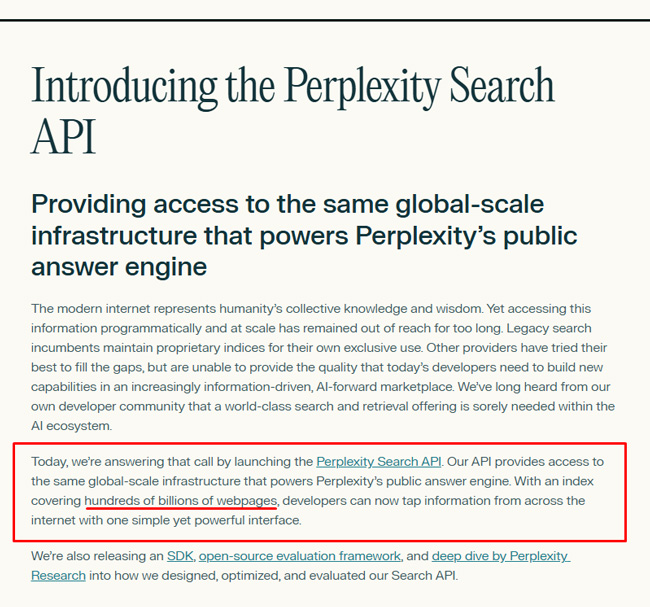

As a good example, when Perplexity recently announced its Search API, they explained that it now has hundreds of billions of documents in its own search index. Well, that escalated quickly…

So instead of relying on well-established indexes and ranking systems from Bing and Google, the AI Search platforms are indexing the web at a frantic pace and will be creating their own core systems. That’s much easier said than done, but it’s what I believe will happen. And remember, ChatGPT and Perplexity were caught scraping Google’s search results recently, which led to Google shutting down the num=100 parameter (making it much harder for companies to effectively scrape Google’s results).

AI platforms do not want to rely on third-party data like that. It’s not a long-term strategy.

The Need For Anti-Spam Systems in AI Search Platforms:

Since the AI Search platforms are so new, there are some companies trying to game those systems to gain more visibility in AI responses. I began covering this topic already in an earlier post, and it’s a shortsighted approach in my opinion. As the AI platforms inevitably develop anti-spam systems, the sites trying to game those platforms could face serious trouble down the line. Those anti-spam measures could yield the AI platforms’ version of spam updates or even the equivalent of manual actions (penalties applied to sites via AI platforms).

Google and Bing have been tackling spam for decades and have sophisticated systems for identifying and dealing with that spam. For example, Google has SpamBrain which is an AI spam prevention system that can handle and neutralize many different types of webspam. The AI Search platforms haven’t communicated about anti-spam systems they have in place now, and we have already seen spam be extremely visible in some AI responses. So the AI platforms have a long way to go on that front.

Here is more about SpamBrain from Google. The AI Search platforms will need something like this, that’s for sure.

Regarding risky tactics, here’s a short list of recommendations I have seen thrown around for gaining visibility across AI platforms. I would steer clear of these:

- Using invisible text so LLMs can pick up that content but users can’t see it.

- Cloaking content so only AI bots can pick up certain content (while users and other bots do not see it).

- Scaling pages that answer every imaginable question around a specific top (so basically scaled content abuse).

- Along the same lines, pumping out a ton of AI-generated content to flood the LLMs (and Google/Bing) with content optimized for many different prompts.

- Other more aggressive LLM manipulation tactics like what we just saw with ASCII smuggling attacks, prompt injections to hack AI, etc.

So if sites are spamming the AI Search platforms now and those platforms start implementing stronger spam detection systems, and maybe even implement some type of manual action system (penalties), then those sites could be digging a large hole that will be hard to get out of easily.

I think Lily Ray summed this up nicely:

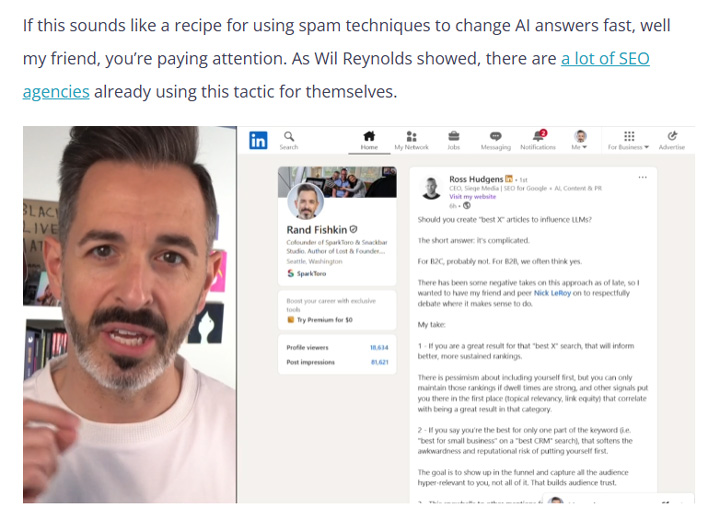

In addition, Rand Fishkin published a post and video recently covering spam in LLMs (including how the AI platforms do not have strong anti-spam systems yet). Rand specifically covers the “best product/service/provider” situation where companies are publishing many low-quality articles and plastering them everywhere in order to rank across AI Search tools. And it’s working… for now.

Here’s a good quote from Rand: “So it appears that unlike Google, these LLMs don’t sort of have the spam and manipulation and intelligence signals to figure out when they’re getting manipulated.”

But like I said earlier, I believe this will change. And you don’t want to be on the wrong end of those systems when updates get rolled out.

The Importance of Developing Site-level Quality and Trust Signals:

As the AI Search platforms like ChatGPT, Perplexity, Claude, and others develop their own core systems, it’s going to be very important to have a strong understanding of authority, site-level quality and trust. If not, any site out there writing about any topic could ultimately be returned via AI responses. And that could be extremely dangerous for users while also destroying trust of those AI Search platforms.

I have covered Google’s site-level quality algorithms for a very long time. You can read more about that in my mega-post about site-level quality scoring which includes every quote, video, and document that Google has shared about the topic. I used the “Gabeback Machine” to find every mention I’ve documented over time. There are quite a few…

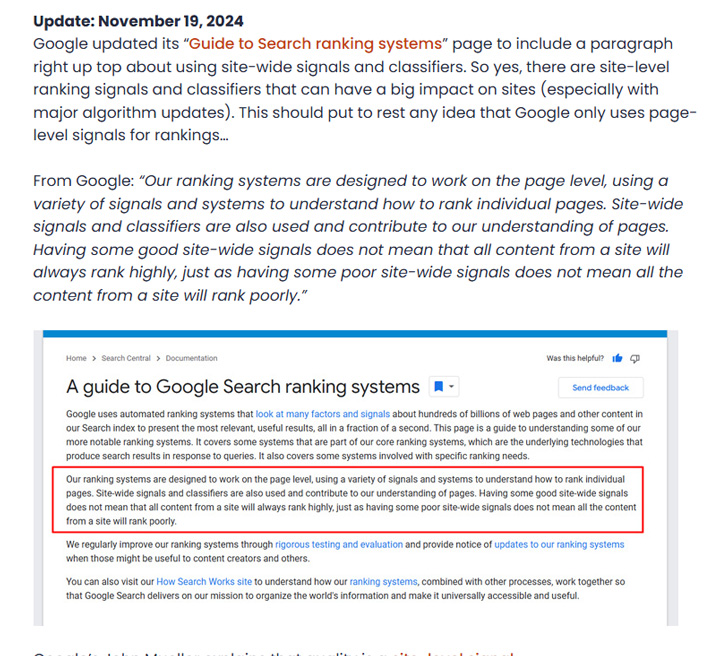

Here is just one example (of many) of Google explaining site-level quality:

When AI Search platforms develop stronger systems to determine site-level quality, then the stronger the site-level quality evaluation, the more that site’s content might be returned and cited in AI responses. The lower the site-level quality evaluation, the less it might be returned or cited. And if those systems work like Google and Bing’s systems, that can impact the entire site (all of the content across the site could be boosted, or dragged down, depending on the scoring). And that scoring could impact the site in various features across AI platforms like Instant Checkout, local results being returned, visual results, and more.

This is why getting hit by a Google broad core update can drag an entire site down rankings-wise. You can read my post about broad core updates to learn more about how site-level quality algorithms work:

So as the AI Search platforms evolve their core systems, and include site-level quality in their algorithms, sites gaming the system, scaling spam, etc., could face a very tough situation. I expect big changes to roll out across the AI Search platforms over time that feel like Panda or Penguin of the past for AI Search visibility. And if you don’t know about those updates, please read about them. They were crafted to target specific tactics that site owners were using to game Google’s algorithms, and the results were catastrophic for sites heavily impacted.

The AI Search Challenge For Understanding “Authority”:

Regarding authority, I watched a recent podcast with Mark Williams-Cook about AI Search and there was a great segment about “Authority”, how PageRank enables Google to better understand authoritative sites (and even in a given niche), and more.

He also covers how most AI Search platforms don’t have a version of the link graph like Google does and how that can cause problems when trying to build the right answer (especially when you need expert information.) Note, Gemini and Copilot can always leverage Google and Bing’s systems, so they can benefit from a strong link graph, but other AI Search platforms don’t have that…

In addition, Mark explains how the AI platforms are supposedly buying link data from various third-party companies. That underscores the importance of that data for the AI platforms and supports the idea that they will want to build systems that partially rely on those types of signals.

You can watch Mark cover all of this below at 33:16 in the video:

And understanding authority and site-level quality will be especially important for YMYL categories (“Your Money or Your Life”) like health and medical, finance, legal, etc. You don’t want some fly-by-night site giving you financial advice or providing critical health information. This is also why Google has explained that when it determines a user is searching for a YMYL topic, it gives more weight in its ranking systems to factors supporting its understanding of E-E-A-T. And the “A” in double EAT represents authority, which is heavily influenced by PageRank. And again, listen to Mark’s comments from the video above about the importance of the link graph for understanding authority, site-level quality, etc.

Here is my X thread about this topic, which includes Google’s documentation:

Combining site-level quality scoring and anti-spam systems could yield serious volatility as the AI Search platforms grow.

Let’s quickly jump back to Panda and Penguin of the past, product reviews updates, and more. Those types of algorithm updates that targeted loopholes in Google’s algorithms could be coming, but for AI Search platforms instead. And if you’re not familiar with updates like Panda and Penguin, please read about them for a while. Because in my opinion, the AI Search platform versions of those updates are coming – and maybe soon.

Here is what a big Panda hit looked like in the past. Search visibility plummeted when Panda rolled out and traffic followed. I think this could happen, but in AI Search for those gaming the AI platforms:

There is no way the AI Search platforms like ChatGPT, Perplexity, and Claude can let people game visibility while destroying user trust over time. The more people get burned by seeking out valuable content and receiving lower-quality content forced into AI Search via some loophole, the more those people will find other solutions (like jumping to Google or competing AI Search platforms that are building stronger core systems and more sophisticated anti-spam measures).

Of course, tracking AI visibility across many sites is a challenge right now so tracking these updates could be challenging. Unfortunately, there aren’t strong solutions for tracking AI visibility like we’ve had for SEO. E.g. Semrush, ahrefs, and Sistrix have been key players for tracking search visibility over time (tracking thousands, hundreds of thousands, or even millions of queries per site), but no company has created that for AI Search yet (not reliably anyway). This will make it very hard to understand when sites surge or plummet in AI Search visibility over time.

And based on speaking with many companies about AI Search over the past year or two, I’m not even confident they can track their own AI visibility, let alone visibility across third-party sites. Tracking a handful of synthetic prompts won’t cut it in my opinion. There needs to be much deeper tracking to truly understand visibility changes over time, how that might drop when AI platforms push big changes, etc.

Also, the AI visibility tracking tools are very expensive for the most part. Too expensive for many marketers to invest in. I’ll cover more about that in another post, but for now just understand that tracking AI visibility at scale, across industries, countries, etc., is not really happening yet in a strong way. But it needs to happen, and quickly. Stay tuned on that front.

Summary – Big changes are coming to AI Search platforms. Don’t get yourself into trouble by spamming those platforms…

To summarize, the AI Search platforms are in their infancy now and will probably be leveraging their own indexes and search systems as time goes on. And as they do, those AI platforms will need to develop strong core systems, implement some level of trust or site-quality scoring, and then implement anti-spam systems that can scale and adapt quickly. And as all of that happens, I expect we will see a lot of serious volatility in AI Search visibility across sites and verticals. And some of that might happen during very specific updates (like Panda and Penguin of the past, but for AI platforms.)

So although sites can implement tactics now that try to game AI Search platforms for visibility, I highly recommend avoiding the temptation to do that. I have seen firsthand over decades how that ends up for sites pushing the limits. It’s ugly, can cause serious financial harm to those companies, and creates a giant hole that site owners need to dig themselves out of. Again, I recommend avoiding that at all costs. Beware.

GG