The mad dash is on to clean up link profiles. Penguin 4.0 is quickly approaching and many companies are heavily analyzing their link profiles in order to flag and handle unnatural links. Penguin 4.0 is supposed to be the real-time Penguin, which is great news for SEOs and webmasters that have dealt with Penguin issues in the past. That said, you never know how Google’s algorithms are going to behave until they are actually released in the wild (as we have seen before). We’ll hopefully learn more about Penguin 4 very soon.

I’ve been helping several companies prepare for the arrival of our cute, black and white, allegedly real-time, Antarctic friend. Yes, go ahead and say that five times fast. :) And while helping those clients, I’ve received a lot of questions about how to save time while analyzing links. For example, which tools, tips, and resources can help as you go through the process of analyzing a link profile? So, based on those questions, I’ve decided to write this post to cover six time-saving tips. My hope is they can make your SEO life a little easier as you fight unnatural links.

Below, I’ll cover plugins, resources, SEO tools, and Excel tips. Here we go… the icy waters of Penguin await. :)

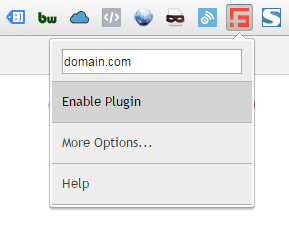

SpamFlag Chrome Extension

I was part of the beta when SpamFlag first launched, and I’ve loved the plugin ever since. SpamFlag is a Chrome extension that enables you to quickly identify a link on a webpage that’s pointing to a specific domain. You can enter the domain in the plugin settings, along with additional project domains (so you can list several to surface for while analyzing a webpage). Then when you visit a url, SpamFlag will highlight links to the domain(s) in question. It’s a huge timesaver while analyzing unnatural links.

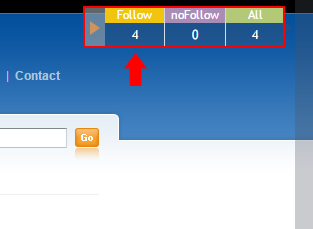

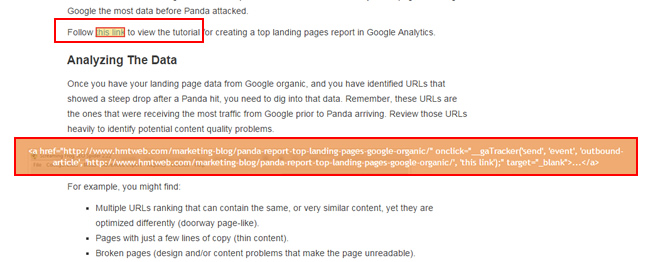

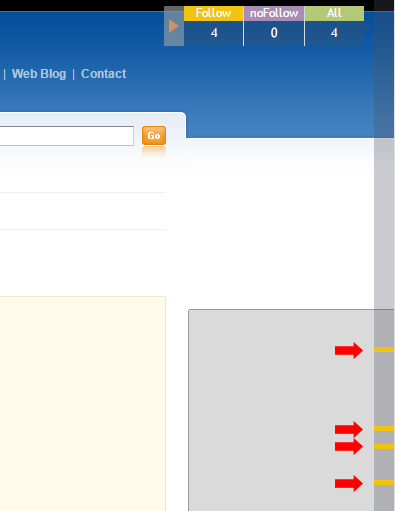

SpamFlag provides a main notification menu at the top, which shows you if there is a link to the domain you are analyzing, how many of the links are nofollowed, how many are followed, and then a total number of links found (based on the domain you are analyzing). You can also click the link-type in the menu to hop down to the first link on the page that was flagged. For example, by clicking the “followed links” category in the top menu, you will be taken to the first followed link on the page that’s pointing to the domain you are analyzing.

Each link is highlighted in yellow, so you can easily spot them on the page. That’s very helpful, especially when you are digging through thousands of links. When you hover over the highlighted link, you can view the html code for the link (which enables you to truly see if it’s nofollowed, the destination url on the domain you are analyzing, etc.) Again, it’s a big time saver versus choosing “view source” and then digging through the source code.

And last, but not least, you can view small highlighted bars on the right side of the page (near the scrollbar in the browser) that show you where the links are located on the page. And you can click those highlighted bars to jump to that specific link. Awesome.

So, if you are working on many link analysis projects, then I highly recommend checking out SpamFlag. You might love it as much as I do.

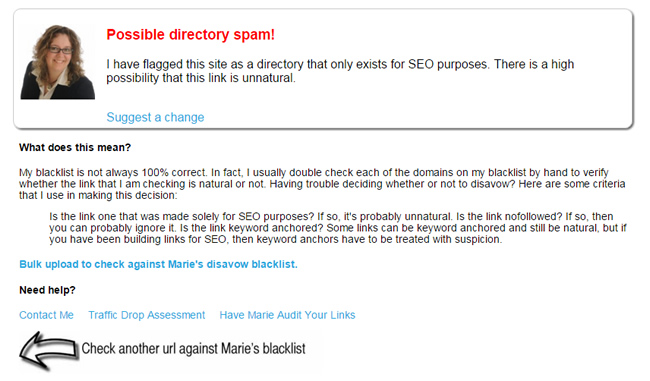

Marie Haynes Disavow Blacklist

If you’re neck deep in SEO, and you have read about unnatural links, then you are probabaly familiar with Marie Haynes. Marie has been heavily involved with both manual actions and Penguin work and has completed a boatload of link audits.

Given what I just explained, it was great of her to build a tool that enables anyone to check a domain against her own blacklist. Just enter a domain and click “Check it!” Marie’s tool will return a message explaining more about the domain, what her recommendation is, etc. There are several classifications of links that Marie covers in her responses.

When performing link audits, you’ll come across both highly spammy domains and clean domains. But in SEO, there are often many shades of gray. For example, you might come across a domain that seems a little spammy, but you’re not sure if you should use the domain operator or just disavow the url. For times like that, checking Marie’s blacklist can be very helpful. Again, Marie has come across many domains during her Penguin travels, so there’s a good chance she has flagged many you are coming across too. And I know she continually updates the database, which is great.

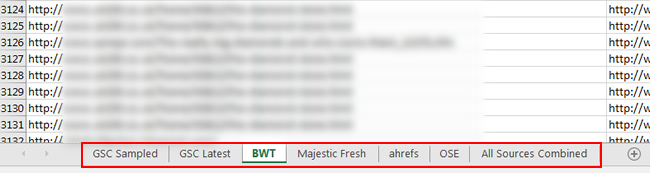

Which Sources Of Links Should I Use?

Whenever link analysis comes up, I’m always asked which sources of links to use. Is it ok to just use Google Search Console (GSC)? Should you include link analysis tools like Majestic? How much is too much? These are all great questions and it’s important to make sure you cover your bases.

First, you definitely don’t want to miss any unnatural links. That can happen if you simply use one or two tools for collecting links. Also, Google Search Console (GSC) only provides up to 100K of your links per “site” verified in GSC, which isn’t sufficient when you are analyzing a site with hundreds of thousands, or millions, of links. Sure, there are ways to squeeze more out of GSC, but it’s a little tedious. For example, verifying directories in GSC and then exporting links based on directory. That’s totally doable, but will require some additional work. And it’s still limited to 100K per “site” in GSC.

So, I usually recommend exporting links from the following sources:

- Google Search Console (GSC)

Hey, it’s Google, definitely start here! Just make sure to export both sampled links and latest links. Then dedupe those. I’ll explain how to do that soon. - Bing Webmaster Tools (BWT)

Yes, Bing is a search engine too, so don’t overlook it. - Majestic

To me, it’s the best link analysis tool on the market and provides a wealth of link data. - ahrefs

A close second to Majestic. It’s a great link analysis tool that also provides a wealth of link data. - Open Site Explorer (OSE)

Typically, it doesn’t contain the most in-depth view of your link profile, but it’s a good supplemental source of links. Remember, you’re trying to cover as many links to your site as possible. Adding OSE is a good idea.

Note, I recommend keeping a spreadsheet that contains all sources of links, but keep each source in its own worksheet. So you should have six different worksheets in your spreadsheet (one per source, including one for GSC sampled and one for GSC latest). Then you’ll want to create a master list in another worksheet (more about that shortly).

By gathering links from all of the sources listed above, you can rest assured you are covering your bases. There shouldn’t be many link surprises like there can be if you simply choose one or two of those sources. Now, once you download your links, you’ll likely be staring at a boatload of link data. Don’t worry, you can cut that list down pretty quickly. I’ll cover a required link tactic next – deduplication.

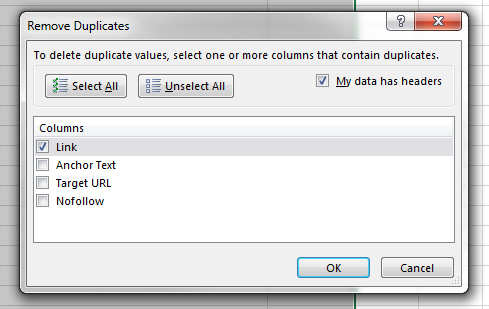

Dedupe In Excel

Once you gather all of your links from across sources (as documented above), you’ll definitely want to create a master list to analyze. For example, there will probably be many overlapping links across sources, so you don’t want to waste your time analyzing the same links over and over.

Enter Excel, the Swiss Army knife for SEOs, less the bottle opener. Once you create a worksheet that contains all links from across sources, simply click the Data tab, and then Remove Duplicates. Once you do, Excel will prompt you to choose the column to base the deduplication on. Once you choose the column containing your links, and click OK, Excel will quickly remove any duplicates and inform you how man it found. Voila. :)

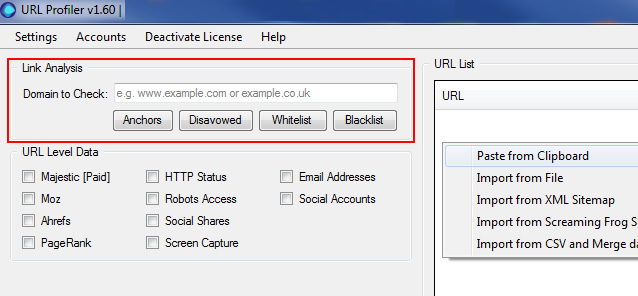

URL Profiler

URL Profiler is of my favorite SEO tools, especially when performing link analyses. You can do many things with URL Profiler, but I’ll focus on how it helps with analyzing and sorting links.

After gathering links from across data sources, you’ll notice that the data from Google Search Console does not contain any information beyond the actual link. For example, you don’t have anchor text, target url, or any other piece of valuable data that comes from link analysis tools like Majestic, ahrefs, and Open Site Explorer. That makes it tougher to analyze links from GSC.

Enter URL Profiler. Once I dedupe all of the link data, I run that deduped list through URL Profiler. By doing so, I receive a boatload of data back, including anchor text, target url, classification of links, whether it’s nofollowed, header response codes, the root domain extracted for me, etc. The additional fields of data you receive from URL Profiler are extremely helpful while analyzing links.

Also, before crawling your links, you can add domains to a whitelist, blacklist, and you can import your disavow file (to focus the crawl on what you need). Yes, more time-saving features.

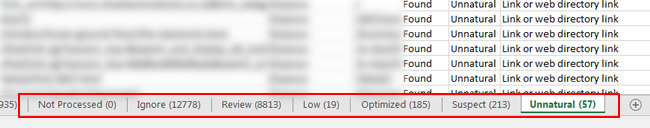

But it gets even better. URL Profiler breaks your links into specific worksheets by category (based on what it finds during the crawl). For example, you will find worksheets titled unnatural, review, ignore, none (no link found), etc. Note, I still believe you should manually analyze all links, since no system is perfect. But, the breakdown enables you to start with the riskiest links and move your way through the spreadsheet.

Bonus: Concatenation in Excel And The Last Mile For Disavow Files

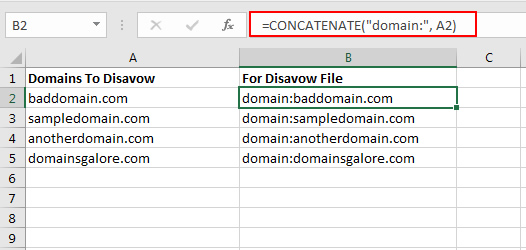

Last, but not least, let’s cover one of my favorite functions in Excel – concatenation. The concatenation function enables you to combine strings. For our purposes, you will inevitably want to add the domain operator (domain:) to the final list of domains you flag during your analysis. Then, the final list can be copied to your disavow file.

So instead of copying and pasting “domain:” before every domain you want to include, you could simply create a new column that will automatically do this for you. Let’s say you have a final list of domains to disavow in a worksheet (and they are in column A). In column B, add the following formula:

=CONCATENATE(“domain:”, A2)

That will prepend “domain:” before the domain name listed in column A. Then simply hover over the bottom-right corner of the cell containing your formula and double click. Now that formula will be copied to every row in that column (and you will have a list of domains to disavow). All you will have to do is copy and paste that column into your disavow file.

Summary – Save Time While Preparing For Penguin 4.0

Performing a deep link analysis is extremely time consuming. Therefore, it’s important to save time any way you can. In this post, I covered important tools, resources, and tips that can save SEOs time, from working in Excel to choosing link sources to using third party tools like URL Profiler. The more time you save, the more links you can get through. And the more links you can flag, the greater chance you have at keeping Penguin at bay. And that’s always a good thing.

GG

Do you think that Google might start punishing domains which appear in many disavow files from other webmasters? So, if they notice baddomain.com being consistently disavowed, they could discern that the site is probably garbage and demote it accordingly … And then subsequently lift penalties against other web sites which may have taken a hit because of baddomain’s garbage links but did not explicitly define baddomain.com in their disavow file? Wouldn’t that be fantastic?

Great question. John Mueller confirmed that “at the moment”, they don’t use disavowed domains against any website. You can watch the video here (at 20:35 in the video) -> https://www.youtube.com/watch?v=BAcEz_-ujCw&feature=share&t=20m35s

But again, he said “at the moment”, so anything is possible. For the spammiest sites, I don’t think Google needs any help. It’s pretty obvious. But for sites in the gray area, disavow data could lead to some very interesting findings for Google. :)

Thanks, Thanks, Thanks, Glenn. I am so glad I found your website searching for posts about Thin-Content yesterday. Your posts are amazing.

After reading your posts about Panda, I already deleted 20% (40 pages) of my small website and rewrote or added content to several others. Unfortunately, in the past, I sold paid links in articles. Is it safe to delete the paid links, but keep the good quality articles?

Furthermore, I went through my list of external links and checked them all with Marie Haynes tool. Is it right for me to disavow everything which she blacklists as: Possible SEO bookmark spam, spam link or low-quality article spam?

Also, a few years ago I created some so-called Web 2.0 Tier pages with thin content (mostly one or two 300-word pages) on websites like: wordpres.com, weebly.com, angelfire, tripod. Should I delete them all? I guess nuclear option and deleting everything is right?

I am still confused about all of this, but thanks for your awesome advice, I feel I am gaining the control of my small website back, bit by bit. Thank you a lot.

Hey, thanks. I’m glad my posts have been helpful! Regarding the articles where you sold paid text links, it’s really hard to say without actually seeing them. If they are high quality articles, then you can simply nofollow the links (or remove them). If the posts aren’t great quality and they were created for the sole purpose of linking out, then nuke the pages.

Regarding Marie’s blacklist, it’s helpful to check the tool when you are on the fence about a specific domain. If the domain is listed and Marie has flagged it, then there’s a really good chance you would want to follow her direction. But you should analyze your links with an understanding of your own niche. So you might have a different opinion based on data you have access to. But again, Marie has done a lot of link analysis, so her recommendations are strong.

Regarding the tier pages, if they were created for links, then nuke them. I would have to see them to give a solid recommendation, but it sounds like they should go. :) I hope that helps.