Google’s quality algorithms are always at work. And Google’s John Mueller has explained a number of times that if you are seeing a decrease in rankings during algorithm updates, and over the long-term, then it could mean that Google’s quality algorithms might not be convinced that your site is the best possible result for users. I would even go a step further and say it also includes user experience.

In addition, John has explained several times that ALL pages indexed are taken into account when Google evaluates quality. So yes, every page that’s indexed counts towards your site’s “quality score”.

Here are some clips from John explaining this:

At 10:06 in the video:

At 25:20 in the video:

And here’s a quote from a blog post from Jennifer Slegg where she covers John explaining this in a webmaster hangout video.

In the post John explains:

“From our point of view, our quality algorithms do look at the website overall, so they do look at everything that’s indexed.”

In addition, Panda (which has changed since being incorporated into Google’s core ranking algorithm) is also on the hunt for sites with low-quality content. Panda now continually runs and slowly rolls out over time. It still requires a refresh, but does not roll out on one day. Last October, Gary Illyes was interviewed and explained that Panda evaluates quality for the entire site by looking at a vast amount of its pages. Then it will adjust rankings accordingly.

Here’s the quote from the interview with Gary (you can read Barry’s post and listen to the audio interview via that post):

“It measures the quality of a site pretty much by looking at the vast majority of the pages at least. But essentially allows us to take quality of the whole site into account when ranking pages from that particular site and adjust the ranking accordingly for the pages.”

So from what we’ve been told, Panda is still a site-level score, which then can impact specific pieces of content based on query. That means site-level quality is still evaluated, which also means that all content on the site is evaluated. And remember, John has said that ALL pages indexed are taken into account by Google’s quality algorithms.

The reason I bring all of this up is because Gary Illyes recently said at SMX East that removing low-quality or thin content shouldn’t help with Panda, and it never should have. So there’s definitely confusion on the subject and many are taking Gary’s statement and quickly believing that removing low-quality or thin content won’t help their situation from a quality standpoint. Or, that they need to boost all low-quality content on a site, even when that includes thousands, tens of thousands, or more urls.

Anyone that has worked on large-scale sites (1M+ urls indexed) knows that it’s nearly impossible to boost the content on thousands of thin pages, or more (at least in the short-term). And then there are times that you surface many urls that are blank, contain almost no content, are autogenerated, etc. In that situation, you would obviously want to remove all of that low-quality and thin content from the site. And you would do that for both users and SEO. Yes, BOTH.

Clarification From John Mueller AGAIN About Removing Low-Quality Content:

John Mueller held another webmaster hangout on Tuesday and I was able to submit a pretty detailed question about this situation. I was hoping John could clarify when it’s ok to nuke content, boost content, etc. John answered my question, and it was a great answer.

He explained that when you surface low-quality or thin content across your site, you have two options. First, you can boost content quality. He highly recommends doing that if you can and he also explained that Google’s Search engineers also confirm that’s the best approach. So if you have content that might be lacking, then definitely try to boost it. I totally agree with that.

Your second option is when there is so much low-quality or thin content, that it’s just not feasible to boost that content. For example, if you find 20K thin urls will no real purpose. In that case, John explained it’s totally ok to nuke that content via 404s or noindexing the urls. In addition, you could use a 410 header response (Gone) to signal to Google that the content is definitely being removed for good. That can quicken up the process of having the content removed from the index. It’s not much quicker, but slightly quicker. John has explained that before as well.

Here is John’s response (at 6:21 in the video):

Note, it was on Halloween, hence the wig. :)

Seeing The Forest Through The Trees – Pruning Content For Users And For SEO:

Removing low-quality content from your site is NOT just for SEO purposes. It’s for users and for SEO. You should always look at your site through the lens of users (just read my posts about Google’s quality updates to learn how a negative user experience can lead to horrible drops in traffic when Google refreshes its quality algorithms.)

Well, how do you think users feel when they search, click a result, and then land on a thin article that doesn’t meet their needs? Or worse, hit a page with thin or low-quality content and aggressive ads? Maybe there are more ads than content and the content didn’t meet their needs. That combination is the kiss of death.

They probably won’t be so thrilled about your site. And Google can pick up user happiness in aggregate. And if that looks horrible over time, then you will probably not fare well when Google refreshes its quality algorithms down the line.

So it’s ultra-important that you provide the best content and user experience as searchers click a result and reach your site. That’s probably the most important reason to maintain “quality indexation”. More on that soon, but my core point is that index management is important for users and not just some weird SEO tactic.

Below, I’ll quickly cover the age-old problem of dealing with low-quality content. And I’ll also provide some examples of how increasing your quality by managing “quality indexation” can positively impact a site SEO-wise.

Nuking Bad Content – An Age-Old Problem

Way back in web history, there was an algorithm named Panda. It was mean, tough, and you could turn to stone by looking into its eyes. OK, that’s a bit dramatic, but I often call old-school Panda “medieval Panda” based on how severe the hits were.

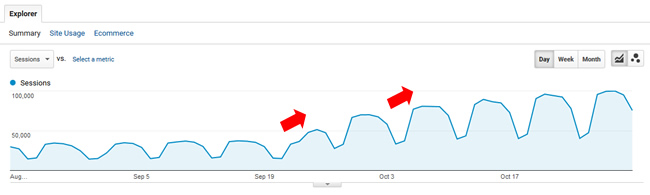

For example, the worst hit I’ve ever seen was 91%. Yes, the site lost 91% of its traffic overnight.

Here’s what a big-time Panda hit looked like. And there were many like this when medieval Panda rolled out:

The Decision Matrix From 2014 – That Works

When helping companies with Panda, you were always looking for low-quality content, thin content, etc. On larger-scale victims (1M+ pages indexed), it wasn’t unusual to find thousands or tens of thousands of low-quality pages. And when you found that type of thin content on a site that’s been hammered by Panda, you would explain to a client that they need to deal with it, and soon.

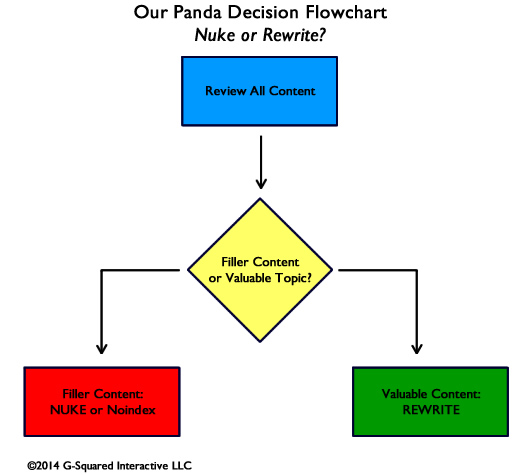

That’s when I came up with a very simple decision matrix. If you can boost the content, then do that. If you can’t improve it, but it’s fine for users to find once they are on the site, then noindex it. And if it’s ok to remove for good, then 404 it.

Here is the decision matrix from my older Panda posts (and it’s still relevant today):

I called this “managing quality indexation” and it’s extremely important for keeping the highest quality content in Google’s index. And when you do that, you can steer clear of getting hit by Google’s quality algorithms, and Panda.

So, it was never just about nuking content. It was about making sure users find what they need, ensuring it’s high-quality and that it meets or exceeds user expectations, and making sure Google only indexes your highest quality content. You can think of it as index management as well.

Therefore, boost low-quality content where you can, nuke where you can’t (404 or 410), and keep on the site with noindex if it’s ok for users once they are on the site. This is about IMPROVING QUALITY OVERALL. It’s not a trick. It’s about crafting the best possible site for users. And that can help SEO-wise.

It’s Never Just Nuking Content, It’s Improving Quality Overall

There’s also another important point I wanted to make. When helping clients that have been negatively impacted by major algorithm updates focused on quality, they NEVER just nuke content. There’s a full remediation plan that takes many factors into account from improving content quality, to removing UX barriers to cutting down aggressive, disruptive, or deceptive advertising, and more.

Therefore, I’m not saying that JUST nuking content will result is some amazing turnaround for a site. It’s about improving quality overall. And by the way, Google’s John Mueller has explained this before. He said if you have been impacted by quality updates, then you should look to significantly improve quality over the long-term.

Here’s a video of John explaining that (at 39:33 in the video):

And What Can This All Lead To? Some examples:

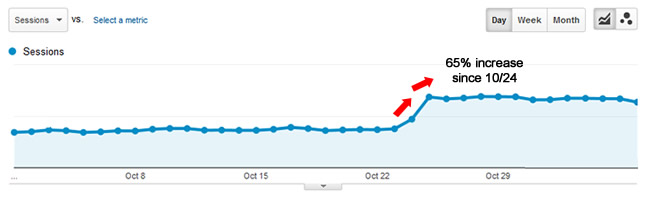

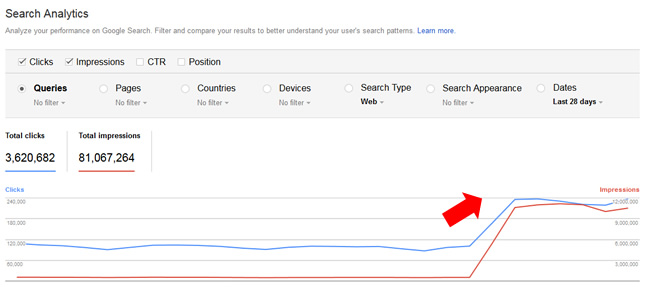

Below, you can see trending for sites that worked hard on improving quality overall, including improving their index management (making sure only their highest-quality content is indexed). Note, they didn’t just nuke content… They fixed technical SEO problems, published more high-quality content, but they also removed a large chunk of low-quality or thin content. Some of the sites removed significant amounts of low-quality content (tens or even hundreds of thousands of urls in total). They are large-scale sites with millions of pages indexed.

And there are many more examples that fit into this situation. Always look to improve quality indexation by boosting content quality AND removing low-quality or thin content. If you do, great things can happen.

My Advice, Which Hasn’t Changed At All (nor should it)

After reading this post, I hope you take a few important points away. First, you should focus on “quality indexation”. Google’s quality algorithms take all pages indexed into account when evaluating quality for a site. So make sure you’re best content is indexed. From a low-quality or thin content standpoint, you should either improve, noindex, or 404 the content. That’s based on how much there is, if it’s worthwhile to improve, if it’s valuable to users once they visit your site, etc. You can read the section earlier for more information on the decision matrix.

In closing, don’t be afraid to remove low-quality or thin content from your site. And no, you DON’T need to boost every piece of low-quality content found on your site. That’s nearly impossible for large-scale sites. Instead, you can noindex or 404 that content. Google’s John Mueller even said that’s a viable strategy.

So fire away. :)

GG

interesting read, thank you very much! Perhaps this is a super stupid question, in which case my apologies, but what about pages that are part of the navigational structure of a site that are semi-automatically created by the CMS (in my case WordPress)? Is a tag page which only features a link to one single article a low quality page or does Google recognize this is a common type of page that’s part of the navigation? If not, should I de-index these kind of pages until there are multiple articles with the same tag?

Thanks Sabrina. I’m glad my post was helpful. And that’s a great question. Tag and category pages are often great candidates for using the meta robots tag with noindex, follow. Then Google won’t index the pages, but will follow the links to your blog posts. Those are really the pages you want indexed (and want Google to focus on). So using the meta robots tag there would be a great way to go. I hope that helps.

Definitely! Thanks so much for your (quick!) reply.

An extremely helpful post, Glenn. In our blog we have a bunch of old low-quality posts (after 6 years, we now know how to create high-quality content so much better than before), and we feel that we need to revisit all those posts and make decisions about what to do with them. This post is a great guide to know how to deal with that, so thank you for that!

Hey, thanks Franpu. I appreciate it. I’m glad my post was helpful. I know there’s a lot of confusion about what to do with low-quality or thin content once surfaced, so I figured I would write up a post with information from Google, my advice based on helping many companies deal with this situation, etc. Definitely boost if you can, but don’t be afraid to remove if you need to. Focus on having your best content indexed, since every page will be evaluated from a quality standpoint.

Thank you Glenn for this great read.

I’ve crossed logs and analytics data and found that in some websites, some pages got visits but were not crawled by Googlebot. So they are not that interesting to Google, but collectivelly bring some trafic.

Noindexing those pages will cut the trafic they get, and in the short term I can’t improve their content.

So the question is, do you think that the overall quality improvement can exceed the trafic loss in de-indexing the thin content ?

Thanks Victor. I’m glad you found my post valuable. That’s an interesting situation. To help with understanding what should be indexed, it’s always important to ask yourself a few simple questions:

Why would the page be indexed? And what would users be looking for that this post could help them with?

If there is unique and valuable content that can help users searching for solutions, then definitely have it indexed. If it can’t, but can help other users not coming from Search, then use the meta robots tag with noindex. And if it’s just low quality or ultra-thin and shouldn’t be on the site, then 404 it. I hope that helps.