{Update July 2022: I just published my post about the May 2022 broad core update with five micro-case studies covering drops and surges.}

On Monday May 4, 2020 Google pushed another broad core update, which are only released three to four times per year. They are huge updates, global, and can have a significant impact for sites across categories and countries. Although the update can take about two weeks to fully roll out, you can usually begin to see impact within a few days. And we saw impact even quicker with this update. For example, we saw big swings in rankings and traffic on May 5th, just one day after the update rolled out.

In this post, I’ll first cover some key points related to core updates, since I’m hearing a lot of confusion from site owners about certain topics. These points are important for site owners to understand for several reasons and I’ll cover those below. After going through those topics, I’ll cover four case studies that provide some valuable lessons about core updates, about the need for continual monitoring and improvement, and what happens when you don’t nip SEO problems in the bud. Above all, the cases emphasize the complexity of Google’s broad core updates.

The four cases include a recovery from a previous core update, a site seeing (mostly) long-term wins during core updates, a massive drop during the May core update, and then a recovery from a non-core update during the May 2020 core update.

Clearing Up Some Confusion About Core Updates

When core updates roll out, it can be very confusing and stressful for site owners. And you can see that confusion in many of the questions that are being asked across the web, along with the questions that are sent to me privately (via email, Twitter direct messages, Facebook messages, LinkedIn, etc.)

I’ve covered core updates many times in my previous posts, so those posts always a good place to learn more about how broad core updates work. That said, I’ll organize some key points below that site owners should be aware of in order to make sound decisions about how to move forward.

1. There’s Never One Smoking Gun, There’s Typically A Battery Of Them

If you’ve read my previous posts about core updates, then you probably know this one already. With core updates, Google is taking many factors into account over an extended period of time. Based on what Google has said many times, and what I have seen in the field while helping companies that have been impacted, there’s never one or two problems that cause negative impact. Instead, there’s usually a battery of problems across the site. In addition, Google always pushes relevance adjustments, which aren’t necessarily a bad thing. More about that soon.

Regarding problems that could be causing negative impact, they span content quality, user experience, aggressive, disruptive, or deceptive advertising, technical SEO problems that cause quality problems, and more. In addition, Google is always looking to surface the most authoritative content written by experts in a niche. And that’s especially true for what Google calls “Your Money or Your Life” (YMYL) content. So on top of what I already mentioned, there could be several issues related to expertise, authoritativeness, and trust (E-A-T) which is heavily covered in Google’s quality rater guidelines and in their blog post about core updates.

Based on what I just explained, it’s not uncommon to surface many issues on a site that’s been negatively impacted, and across a number of categories. That’s why I recommend using “The Kitchen Sink” approach to remediation, which involves surfacing all potential problems on a site and fixing as many as you possibly can (all of them if that’s possible). I’ll cover this in the case studies later in this post.

2. Relevance Adjustments

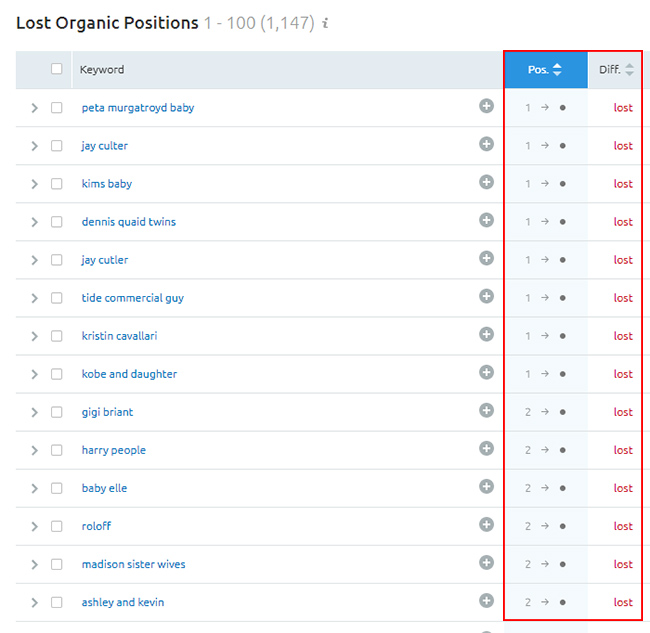

Beyond the problems I listed above, there are always relevance adjustments during core updates. That’s when rankings can drop when content just isn’t as relevant anymore for a query. As an example, you can often see this happen on news publisher sites that might have an article ranking for super-competitive head terms for a given subject (like a person’s name, a company name, movie, TV show, event, etc.)

As time goes on, that article might not be as relevant anymore and rankings drop. Those types of adjustments aren’t necessarily a bad thing. It’s just important to distinguish between relevance adjustments and when you have major problems throughout a site. And you might have a combination of both (many sites often do).

For example, see the ranking changes for head terms below for a news site:

3. “Millions of baby algorithms”

Gary Illyes from Google once explained that its core ranking algorithm is made up of “millions of baby algorithms working together to output a score.” I love that quote and it makes complete sense. This is also why you shouldn’t just focus on just one or two factors. You should look at your site holistically and surface all potential problems… and then fix them all if that’s possible. That’s also why I recommend running user studies through the lens of core updates. You can learn a lot about your site when you have real people provide real feedback based on traversing your site.

4. Recovery From Core Updates

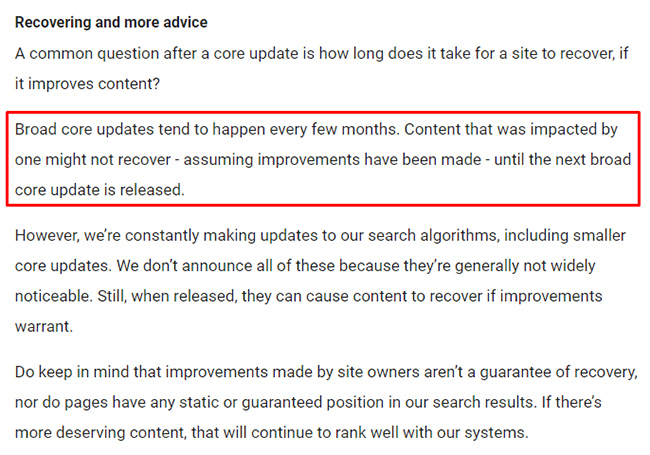

This is ultra-important to understand and it’s something Google has even confirmed in its post about core updates. If you have been negatively impacted by a core update, you (mostly) cannot see recovery from that until another core update. In addition, you will only see recovery if you significantly improve the site over the long-term. If you haven’t done enough to improve the site overall, you might have to wait several updates to see an increase as you keep improving the site. And since core updates are typically separated by 3-4 months, that means you might need to wait a while.

Here is a section from Google’s blog post about core updates:

One of the reasons it’s important to understand how this works is because some companies are implementing short-term testing of changes, and then rolling those changes back if they don’t see positive movement. That will not work, since you won’t know if those changes are helping UNTIL ANOTHER CORE UPDATE ROLLS OUT. Sure, you might see subtle changes as Google pushes many smaller updates over time, but you won’t typically see massive changes until another core update rolls out.

That means you can be spinning your wheels by testing certain things, and maybe rolling back the right changes because you don’t see short-term gains. I recommend implementing the right changes for your users and site and keeping them in place for the long-term. Significantly improve your site, your content, the user experience, and more.

5. Recent Changes Will Not Be Reflected In Core Updates

If you recently implemented a lot of changes before a core update rolled out, and either dropped or didn’t see positive impact, it’s important to understand that recent changes will often not be reflected in core updates. For example, rolling out big changes 2-3 weeks before a core update rolls out will typically not be reflected.

Google is doing a ton of evaluation over the long-term for core updates, so making a recent change before a core update rolls out is usually not the reason you saw a big drop, or even a big gain. Google’s John Mueller has explained this before and you can watch the video below to hear him explain this. Below is my tweet about this with a link to a video with Google’s John Mueller.

So again, make the right changes and keep them in place for the long-term. It’s also worth noting that Google’s Paul Haahr, a lead ranking engineer at Google, also explained to me at the Google Webmaster Conference in Mountain View that Google does a lot of evaluation in between core updates (and it can be a bottleneck that inhibits them from rolling out broad core updates more often). He also said they could potentially decouple certain algorithms from broad core updates and roll those out at a different time, but we are still looking at 3-4 updates per year in the short-term.

With those five points out of the way, let’s move on to the case studies I mentioned earlier.

May 2020 Core Update – Four Case Studies, Four Lessons

Below, I’m going to cover four cases based on sites that were impacted by Google’s May 2020 core update. Each is a unique case, with unique learnings, and my hope is site owners can learn something from each one. I can write a book about each one, but I’ll try and keep each case as concise as possible.

I’ll cover a recovery from a previous core update, a site that has (mostly) surged over time during several core updates, a major drop during the May 2020 update, and then a recovery from a non-core update during the May 2020 core update. Again, I think the cases underscore how complex core updates are, how many factors are involved, etc.

But before we jump in, there are two points I want to quickly cover. First, I wanted to thank my clients for letting me document their cases in this post. They are blinded case studies, but my clients were nice enough to let me explain more about each situation, provide data from GA and GSC, and more.

Second, I need to provide my typical disclaimer about broad core updates.

I do not work for Google. I don’t have access to its core ranking algorithm. I have been to Google Headquarters in both California and New York, and it was tempting to put a John Mueller or Martin Splitt mask on to try and speak with the search engineers, but I figured that wouldn’t end up very well. :)

Case 1 – Recovery From The March 2019 Core Update

The first case is a site that provides a unique service in the health/medical niche. The site got hammered during the March 2019 core update and dropped by 40% overnight. Like many, the site owners were extremely confused about why they dropped.

Once I started digging into the site, there were many problems I surfaced in my audit (and those problems spanned a number of topics). Remember what I said about “millions of baby algorithms” and the complexity of core updates?

Note, some of what I’ll cover below would yield an indirect effect, while other changes could yield more of a direct effect (especially when combined together). But again, the focus was to improve the site overall, and across content quality, user experience, technical SEO, and more. Google has explained many times they are looking at many factors for core updates and I think this case demonstrates that well.

User Experience

First, the site had several key UX issues, including having giant calls to action pushing down the main content throughout the site. That situation was bad on desktop, but even worse on mobile. When viewing the content on a mobile device, the entire viewport was filled with a lead capture form. I also surfaced key navigation problems on mobile which inhibited users from traversing the site via the site’s global navigation. I can’t even imagine how frustrating that could be for users.

A lead form like this one filled the viewport, above the main content:

Content Quality and Structure

Content-wise, I definitely surfaced some thin content across the site, which the site owners handled pretty quickly. This wasn’t a big problem, but was worth handling for sure. The site also combined some important content on one page that should have been on multiple pages. So instead of having specific pages covering a certain topic, they were covered on one page trying to explain it all (while visibly revealing that content via UI elements). They (mostly) fixed that and provided all of that content on their own respective pages.

Also, my client has worked hard to go back through a lot of their core content and update it, when needed (refreshing the content and enhancing it). In addition, they are now “on the pulse” of their target market and have brought in various departments that interact directly with the users of the site. That helps them build content that addresses current needs in a thorough way. Needless to say, this is extremely important when it comes to meeting or exceeding user expectations via high-quality content.

AMP, your first impression from Search

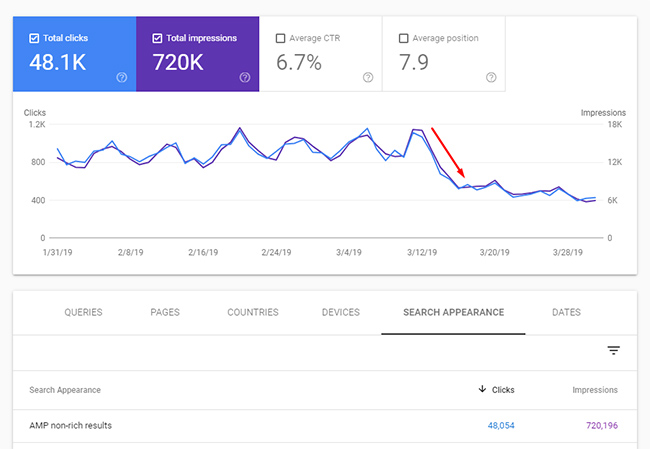

When checking GSC, you could see that many users were visiting AMP urls from the search results, so it was super-important to review those pages (usability-wise, content-wise, parity-wise, etc.) Unfortunately, the CMS was limiting with what the site could do AMP-wise and the pages were literally barebones versions of the responsive pages. The site owners did what they could do improve that experience, although it’s still not perfect. But overall, it’s a stronger user experience (and at least has stronger branding).

When filtering by AMP in Google Search Console (GSC), you can see a significant number of users from Search visiting AMP urls. For many, this was their first impression of the site:

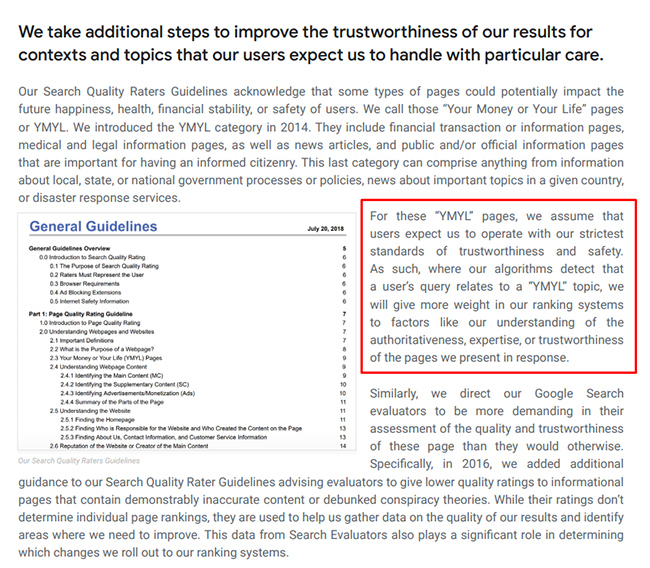

YMYL & Authority

Next, the site is in the health/medical niche so it’s categorized as “Your money or your life” (YMYL). Any site that can “impact a person’s future happiness, health, financial stability, or safety” is held to a higher standard. Since this site publishes health and medical content, and provides a service tied to the vertical, making sure that content is written by expert-level authors was extremely important.

And beyond having expert authors produce high-quality content, making sure users (and Google) know who the authors are, and understand their experience and background, was an important change. When conducting user studies, I’ve watched, and listened to, real users comment about this. The site owners focused on making a number of key changes on this front.

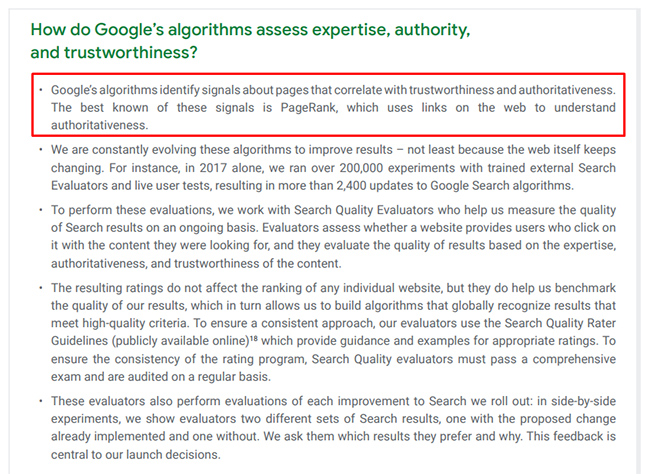

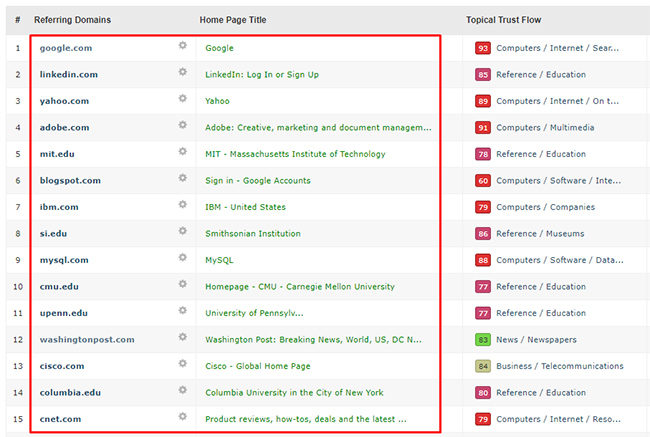

From an authority standpoint, Google has explained that for assessing “authority”, the best known signal they use is PageRank (or links from across the web). So yes, the right links matter, and especially for YMYL content.

In addition, Marie Haynes asked Google’s Gary Illyes about E-A-T at Pubcon in 2018. Gary explained that it is largely about links and mentions from well-known sites. You can see Marie’s tweet below. So again, the right links matter.

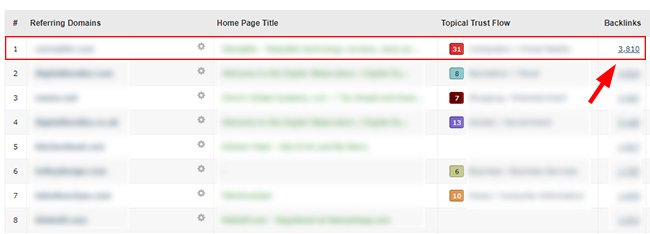

Unfortunately, the site just didn’t compare well against the competition when reviewing links and mentions from well-known sites. Actually, the overall link profile was weaker in general. I brought this up several times during the audit and explained this was not an easy or quick thing to fix. Over the past year, the site has naturally gained more links, and from some important sources. This clearly could have helped the situation, especially when combined with the other changes.

Technical SEO

There were a number of technical SEO problems as well, which spanned several categories. For example, the site’s internal linking structure was problematic, which was inhibiting key pages throughout the site from linking directly to other key pages (and that included problems with the homepage as well).

There were also canonical problems on the site, including a number of pages containing double canonical tags (each pointing to different urls). When Google sees multiple canonical tags, it can simply ignore those and select one on its own. That’s a dangerous game of “canonical roulette” to play with Google.

Last, but not least, the site owners had a full-on cage match with their robots.txt file. When starting the audit, I noticed that some important pages were being blocked from crawling. You definitely don’t want to block Google from crawling important pages, so my client removed those directives quickly. But later in the engagement, I noticed that the old robots.txt file was back! My client immediately dug in to find out what was going on, to add the new robots.txt file back, etc. Note, I’ve written several times about robots.txt issues, and this was just another case of bad things happening. Anyway, it’s just worth noting that they struggled with this problem over time. The issue has been fixed and the new file is currently running.

The Waiting Game

As additional core updates rolled out after the March 2019 update, the site would only gain or drop marginally. This wasn’t necessarily surprising since there were a lot of changes implemented from May through September and Google would need to see those changes over the long-term in order for the site to recover.

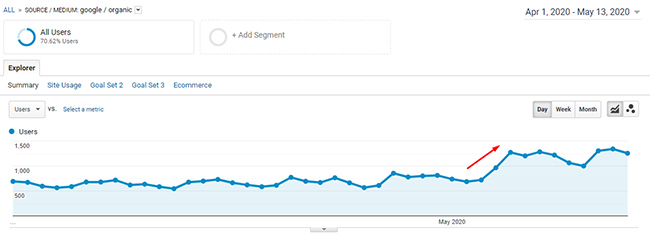

Finally, during the May 2020 core update, the site surged. It’s up 53% since the update rolled out, which is great to see. It’s not back 100% to where it was before the March 2019 update, but it’s pretty close.

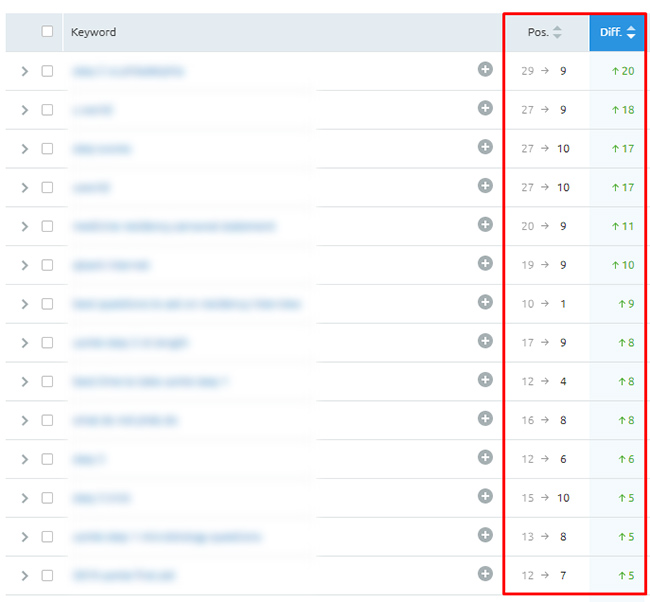

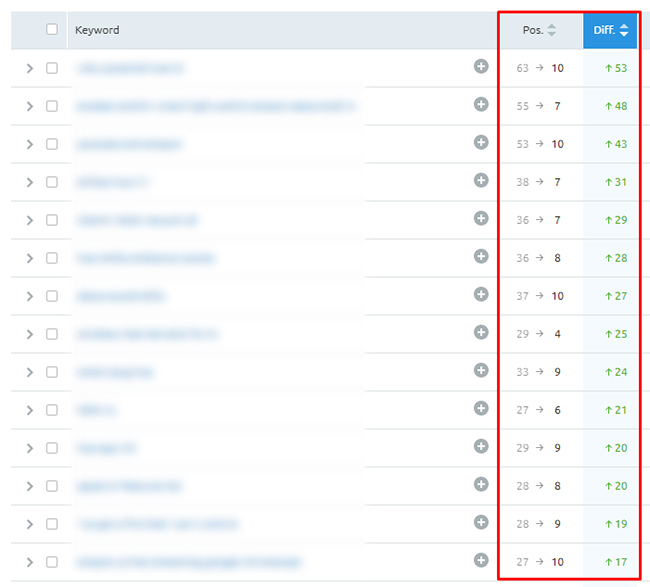

Here is a snapshot of some rankings increases for the site following the May 2020 core update. There were nice gains across many different keywords:

Case 2 – From Medieval Panda to Core Updates and Beyond

The next case I’ll cover is a long-term client of mine that has done extremely well over the years after getting hit hard by medieval Panda. Since then, they have worked hard to improve the site overall, but also to continually audit the site through the lens of core updates. It’s a large-scale, complex site in the news vertical.

The site has seen nice gains during previous core updates, but has also seen some relevance adjustments during others (with some drops). The relevance adjustments make sense as older content simply isn’t as relevant for broad queries as they once were. That’s totally fine from my standpoint (and theirs) and the main focus is to maintain strong SEO hygiene over the long-term.

Again, it’s a large-scale site with many moving parts. There are hundreds of new urls published every day, the site has millions of pages indexed, and there is an international component as well.

During audits, a number of issues tend to get nipped in the bud, which helps stop those issues from becoming bigger problems. I’ll cover some things below that have been addressed as audits are conducted several times per year.

Maintaining Strong Quality Indexation

Even though the site primarily publishes high-quality content, it has always had to deal with some content that’s lower-quality or thin. So, it’s always important to surface those lower-quality urls that slip through the cracks. Remember, this is a complex site publishing many new urls per day, so it’s not simple from a quality control perspective.

Here is an example of a page with just a paragraph that didn’t cover the subject matter thoroughly. Surfacing and handling these types of pages is critically important for this site.

Technical SEO issues that can cause quality problems

Since it’s a complex site, there are times that technical SEO problems pop up, and could roll out to many pages (thousands, tens of thousands, or even more). It’s important to continually analyze the site to surface those and fix them as quickly as possible. For example, canonical problems, render problems, meta robots tag issues, robots.txt issues, performance problems, and more.

Also, with a lot of content published per day, and based on how this site works, there is always the risk of multiple urls being published containing the same, or similar, content without the correct canonical tags set up. Over time, this can yield a lot of content splitting power across multiple urls that contain the same exact content. This can dilute the power of any given piece of content, so it’s important to catch that quickly and rectify. Remember, it’s a huge site.

In addition, I surfaced a number of urls where canonical tags were pointing at urls that don’t resolve with 200s. So those canonical urls were 404s, redirects, etc. It ends up there was a coding issue where the urls in rel canonical were malformed. That’s never a good thing, especially on a large-scale site with millions of pages indexed.

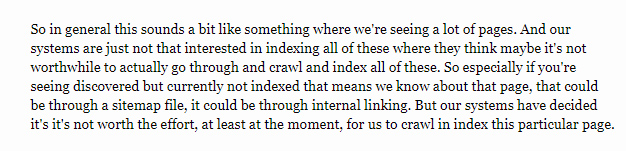

Also, there were tens of thousands of urls listed as “Discovered, not indexed”, which as John Mueller has explained, can signal quality problems or even crawl budget problems. Analyzing those urls, findings patterns, and addressing those issues was important.

Google’s John Mueller about “Discovered, not indexed”:

As you can see, there are many things being surfaced during audits that are conducted throughout the year (even beyond what I’ve covered here today). For this site, maintaining strong SEO hygiene has helped it increase in visibility over time, while seeing surges during several core updates (including the May 2020 core update).

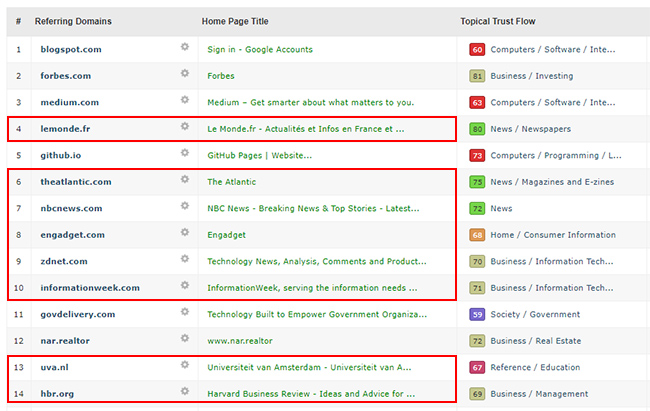

Authority – The importance of A in E-A-T

Beyond content and technical SEO, the A in E-A-T is very important (and I covered that earlier in the post). This site is definitely seen an authoritative source of news. Their content is often referenced from some of the most powerful sites on the web. I’m sure this is helping the site with core updates, especially for news coverage.

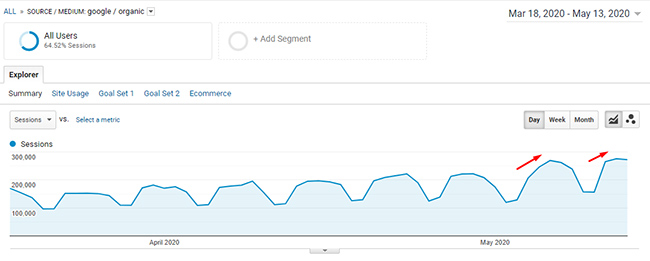

Based on the May update, the site is up 35% accounting for an additional 40-60K sessions per day from Google organic. This is after surging during the September 2019 update.

Case 3 – The Ghost of Fred And A Reminder To Continually Analyze

Next up is a site that has continually battled major algorithm updates over time, has recovered from several, including a massive surge during Fred, but just got hit hard during the May 2020 update. It’s in a tough niche, since it contains the same, or very similar, content to many other sites. I helped the site in the past and have been in close contact with the site owners since then. The site hasn’t been audited in quite some time and they quickly reached out to me after seeing a significant drop in rankings and traffic based on the May core update.

Tough Niche and The Need To Clearly Differentiate Your Site

Google’s John Mueller has covered the topic of having the same, or similar, content as many other sites several times over the years. He has explained that you need to differentiate your site as much as possible from the rest. If not, it’s very hard for Google to determine which site should rank well over the others.

Here is my tweet about the subject with a link to a video of John Mueller explaining more about the situation:

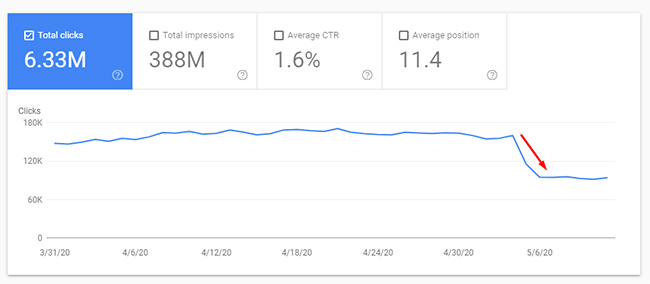

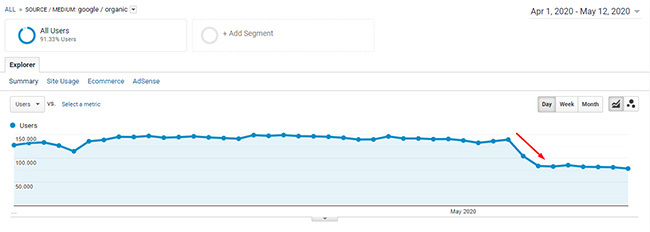

For this site, it’s a case where it has not been audited on a regular basis and several problems have crept in. And over time, a number of important issues have riddled the site. And the result during the May core update was disastrous. Google organic traffic is down 40% since the update rolled out.

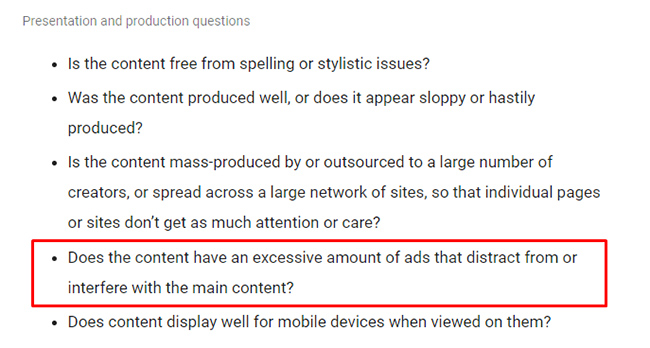

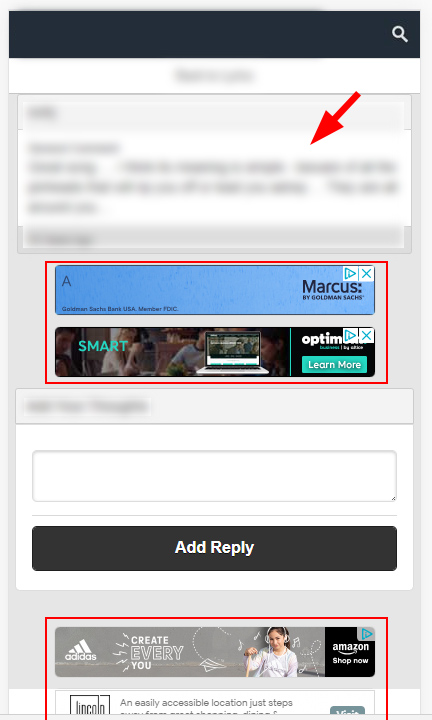

Aggressive and Disruptive Advertising

This is a problem that the site has struggled with over the years and it’s pretty aggressive again. The aggressive and disruptive advertising situation inhibits the user experience across both desktop and mobile. Ads are pushing the main content down the page, sometimes double ads are delivered in certain sections of the page, some are auto-playing video, and more. I’ve written many times about the danger of aggressive monetization tactics, and even Google has explained this in their blog post about core updates.

Quality Indexation

From a quality indexation standpoint, there is a lot of thin content across the site based on the niche. For example, thousands of pages that contain very little content, or even no content on some pages. In addition, there are also thousands of pages that shouldn’t be indexed at all that are currently being indexed. They aren’t the canonical versions of the pages, but are still being indexed. Those should definitely be nuked.

Here are some examples of thin content from the site. There are thousands of pages like this across the site:

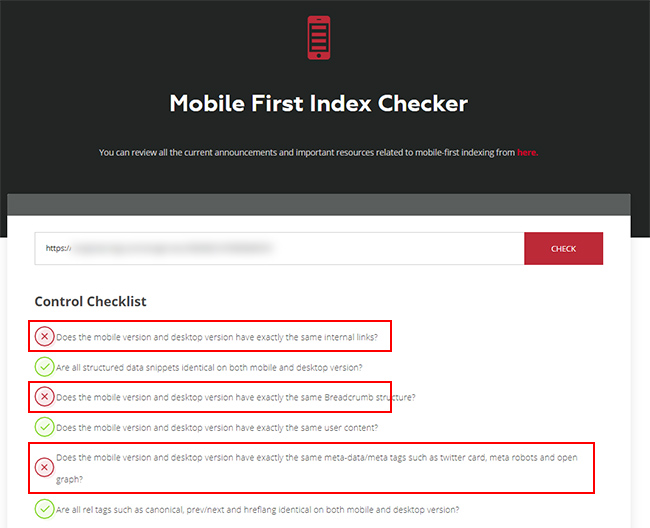

Separate Mobile URLs – Lack Of Parity

In addition, there are separate mobile urls and those pages do not match the desktop pages across several key elements including links, navigation, and some content. As you can guess, the site has not been moved to mobile-first indexing yet and most of their traffic is via mobile.

So, the site has an aggressive ad situation mixed with mobile problems on a site that contains the same, or very similar, content as many other sites on the web. Then add a thin content problem and that makes the situation even worse. It’s not a great recipe.

The site owners are already working on changes, which is great. The tough part is they will have to wait for another core update to see recovery (or possibly multiple core updates).

Case 4 – Recovery From A Non-Core Update During The May 2020 Core Update

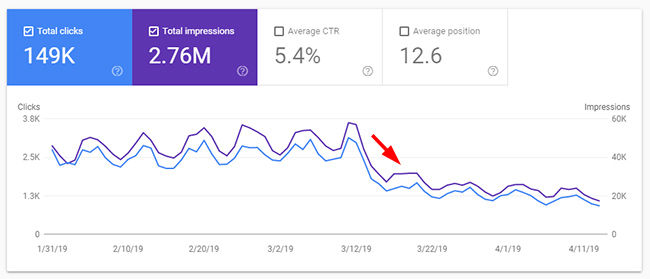

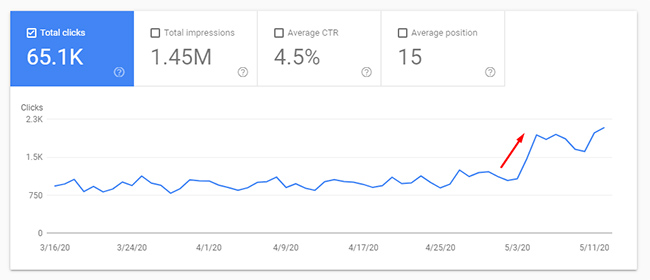

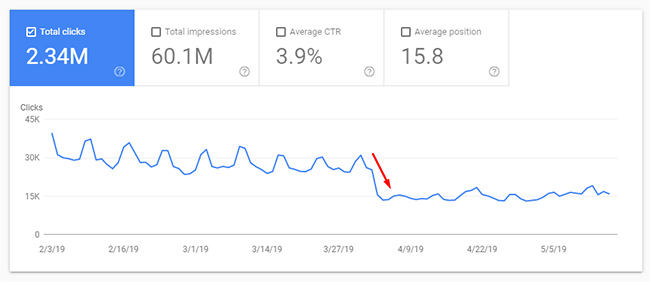

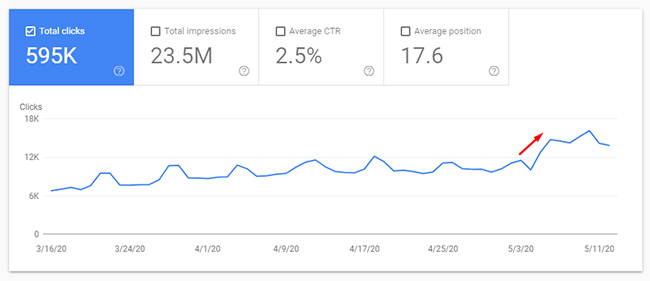

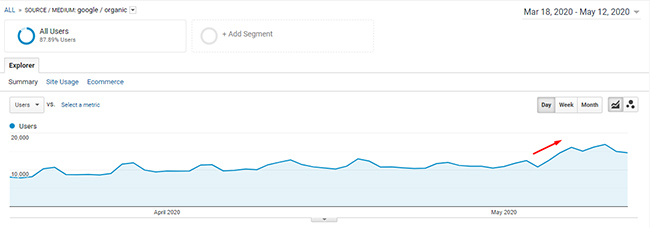

The last case I’ll cover is the most interesting one in my opinion. The site got smoked by an unconfirmed update on April 2, 2019, and when I say “smoked”, I mean it was hit really, really hard… Check the trending below from GSC and GA:

It’s an affiliate site that got blindsided and dropped by a whopping 44% overnight. The site owners reached out to me soon after, but I couldn’t start helping them until the summer of 2019. That April update wasn’t a big one overall (there weren’t many sites impacted across the web), but there were some I found (with very similar extreme drops). Also, some of those were affiliate sites as well. Just an interesting side note.

First, the site now produces high-quality, and helpful, content about the products they are covering. So, if you checked much of the site’s current content, you would definitely say it’s high-quality content. But the devil is in the details and why it’s always important to heavily, and thoroughly, analyze a site. That’s when you can start to surface problems that might not be apparent at first glance.

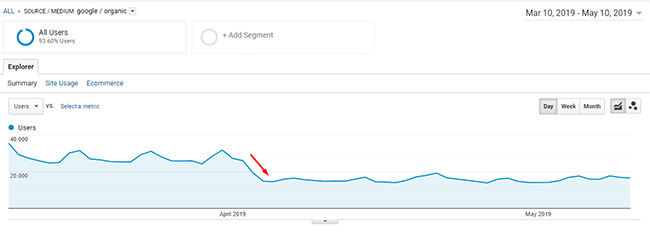

Aggressive Affiliate Setup

First, it was hard to ignore the aggressive affiliate situation on the site. The pages had large and disruptive affiliate modules weaved throughout each page. And some were located at the top pushing the main content down the page (especially on mobile).

I asked my client more about the affiliate situation, and some interesting bits of information came from that conversation. For example, it ends up they implemented a much more aggressive affiliate setup in January, just a few months before the April drop. Therefore, the site made a clear move from an acceptable user experience to an aggressive and disruptive one (in my opinion).

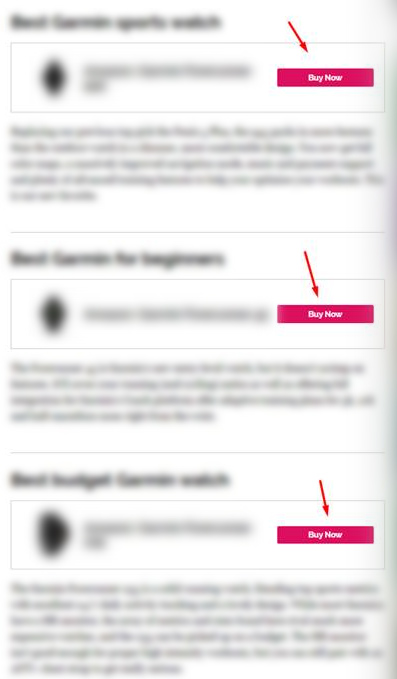

Lower-Quality/Thin Content

Next, I did surface pages that were thin or lower-quality. This wasn’t a huge problem for the site, but it was definitely worth addressing. As Google has explained before, every page indexed is taken into account when evaluating quality.

For example, there were some articles that were only a paragraph or two in length that didn’t thoroughly cover the subject. Also, Google’s John Mueller has explained several times that affiliate sites are fine from Google’s standpoint SEO-wise, but affiliate sites with low-quality or thin content are NOT fine (and that’s usually the problem when they experience drops in traffic).

Here is a thin article I surfaced during the audit:

Cutting (Redirect) Ties and Killing Mutual Links

I can’t explain too much on this front, but there is another site they own that covers a different vertical. There were thousands of links from that sister site to this one and many were followed. There were also redirects set up from a number of older urls on that site to the one I’m covering here. Also, both sites were hit on 4/2/19. Yep, it was an interesting case for this company.

So, the site owners finally made the decision to remove all ties from that second site to this one. All links and redirects were removed during the engagement. Hard to say how much this impacted the situation, but this site is surging during the May core update, and the other site has increased as well. It’s just an interesting side note.

Example of links from the sister site to this one. All were nuked, including all redirects from the sister sister site to urls on this site:

Nuked Comments

Since user comments are seen as part of the overall page content, they can drag quality down if they aren’t heavily moderated and high-quality. That’s why it’s important to know how Google is handling your comments from a rendering and indexing standpoint. Based on the current state of commenting on the site, in addition to technical problems with the comments, the site owners decided to nuke all comments from all pages.

E-A-T

The site has naturally built some very strong links over time, which again, can help greatly with building authority. Over the past year, they have continued to build links and mentions from well-known sites. Like the other examples above, I’m sure this has helped their situation.

Also, the site has strong writers that cover the niche, so making sure users, and Google, understood who was writing the content was important. Several changes were implemented on that front.

And beyond what I mentioned already, I was surfacing a number of technical SEO problems along the way. The site owners were fixing those quickly as I surfaced them.

I can’t cover everything that was surfaced and fixed, but I think you get the picture. There were a number of important problems that were riddling the site. One of my emails to the site owners summed up the situation nicely (written during the engagement). I’ve included that paragraph below. It’s important to understand that there’s never one smoking gun. Instead, there’s typically a battery of them. This was a great example of that:

“It could very well be multiple issues working together that could be causing problems. E.g. aggressive affiliate marketing + thin content + technical SEO issues causing quality issues + everything else I’ve sent along. And… then what I haven’t sent yet. I’m not done analyzing the site.”

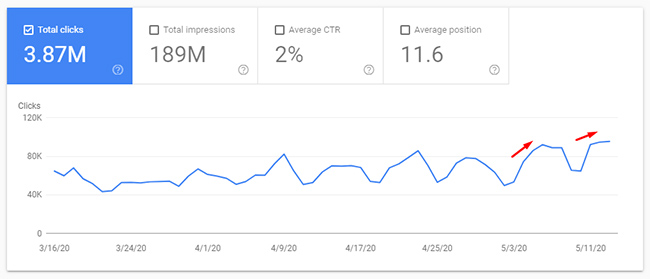

So the site owners moved to fix as much as they could over time. They definitely implemented many of the changes I recommended during the engagement. Since the site had been impacted by a non-core update on April 2, 2019, we were eager to see how it was impacted during future core updates. Then the May core update rolled out and the site started to surge. It was great to see.

The site is up 36% since the update started rolling out and their rankings have shot up for many keywords across categories. And that includes gaining a number of featured snippets (which can happen when you are rewarded during a core update). They aren’t back to where they were before April of 2019, but they will take a 36% increase for sure. :)

I have provided GSC and GA data below, in addition to a snapshot of rankings that increased after the update:

Core Updates: Some Final Tips and Recommendations

- “Millions of baby algorithms” – Remember, Google’s Gary Illyes explained that its core ranking algorithm is made up of “millions of baby algorithms” working together to output a score. Don’t try and look for that one thing that’s causing a problem. Surface all potential problems and fix them all, if possible. Use the “kitchen sink” approach to remediation that I’ve covered many times.

- Objectively analyze your site. And if you can, run a user study through the lens of core updates. I wrote a case study about that last year that explained how it works, what you can learn, etc. And don’t just have a few people close to you go through the site (like your spouse, kids, or coworkers). Have objective third-parties review the site and give real feedback. That’s how you can improve.

- Understand the key points about core updates that I covered earlier regarding recovery, recent changes not impacting core updates, Google looking to see long-term, significant improvement, and more. This is definitely not a “band-aid” situation. You need to dig deep and tackle big issues on the site from content to UX to advertising to technical SEO, and more.

- Google’s Documentation – Read Google’s blog post about core updates and then read Google’s quality rater guidelines (QRG) several times. The blog post contains a lot of good information about core updates directly from Google, while the QRG contains 168 pages of SEO gold. The QRG doesn’t map one to one with Google’s algorithms, but it sure gives you some amazing pieces of information about what Google deems low versus high-quality.

- Don’t roll back changes too fast. I explained earlier that you can only recover from a core update during a subsequent core update (as confirmed by Google and based on what I’ve seen many times while helping companies). Implement the right changes for your users and site, and keep them in place over the long-term. If not, you might roll back the right changes after not seeing short-term gains (and miss the long-term gains you could have seen during future core updates).

Summary – Core updates are complex.

I hope it was helpful to read through the four case studies I covered in this post. I think they demonstrate the various ways in which a core update can impact a site, the types of problems that can potentially cause negative impact, and what sites can do to recover.

And above all, the cases demonstrate how complex Google’s core updates are. So, if you have been negatively impacted by the May 2020 core update, I would begin to objectively analyze the site soon. And then work to significantly improve the site over the long-term. That’s exactly what Google wants to see. Good luck.

GG