{Updated on April 18, 2016 to cover the latest changes in Google Search Console (GSC).}

Any marketer focused on SEO will tell you that it’s sometimes frustrating to wait for Googlebot to recrawl an updated page. The reason is simple. Until Googlebot recrawls the page, the old content will show up in the search results. For example, imagine someone added content that shouldn’t be on a page, and that new content was already indexed by Google. Since it’s an important page, you don’t want to take the entire page down. In a situation like this, you would typically update the page, resubmit your xml sitemap, and hope Googlebot stops by soon. As you can guess, that doesn’t make anyone involved with the update very happy.

For some companies, Googlebot is visiting their website multiple times per day. But for others, it could take much longer to get recrawled. So, how can you make sure that a recently updated page gets into Google’s index as quickly as possible? Well, Google has you covered. There’s a tool called Fetch as Google that can be accessed within Google Search Console (GSC) that you can use for this purpose. Let’s explore Fetch as Google in greater detail below.

Fetch as Google and Submit to Index

If you aren’t using Google Search Console (GSC), you should be. It’s an incredible resource offered by Google that enables webmasters to receive data directly from Google about their verified websites. Google Search Console also includes a number of valuable tools for diagnosing website issues. One of the tools is called Fetch as Google.

The primary purpose of Fetch as Google is to submit a url, and test how Google crawls and renders the page. This can help you diagnose issues with the url at hand. For example, is Googlebot not seeing the right content, is the wrong header response code being returned, etc? You can also use fetch and render to see how Googlebot is actually rendering the content (like the way a typical browser would). This is extremely important, especially for Google to understand how your content is handled on mobile devices.

But, those aren’t the only uses for Fetch as Google. Google also has functionality for submitting that url to its index, right from the tool itself. You can submit up to 500 urls per month via Fetch as Google, which should be sufficient for most websites. This can be a great solution for times when you updated a webpage and want that page refreshed in Google’s index as quickly as possible. In addition, Google provides an option for submitting the url and its direct links to the index. This enables you to have the page at hand submitted to the index, but also other pages that are linked to from that url. You can do this up to 10 times per month, so make sure you need it if you use it!

Let’s go through the process of using Fetch as Google to submit a recently updated page to Google’s index. I’ll walk you step by step through the process below.

How to Use Fetch as Google to Submit a Recently Updated Page to Google’s Index

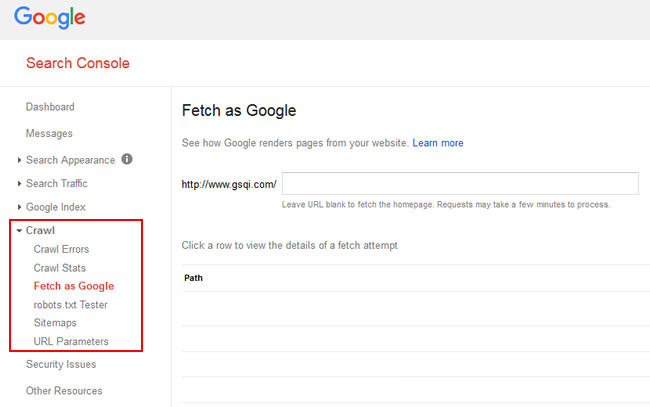

1. Access Google Search Console and Find “Fetch as Google”

You need a verified website in Google Search Console in order to use Fetch as Google. Sign into Google Search Console, select the website you want to work on, expand the left side navigation link for “Crawl”. Then click the link for “Fetch as Google”.

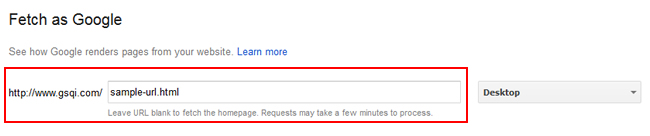

2. Enter the URL to Fetch

You will see a text field that begins with your domain name. This is where you want to add the url of the page you want submitted to Google’s index. Enter the url and leave the default option for Google type as “Desktop”, which will use Google’s standard web crawler (versus one of its mobile crawlers). Then click “Fetch”.

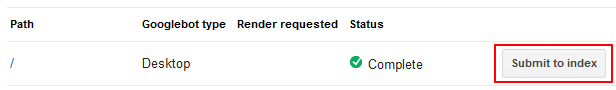

3. Submit to Index

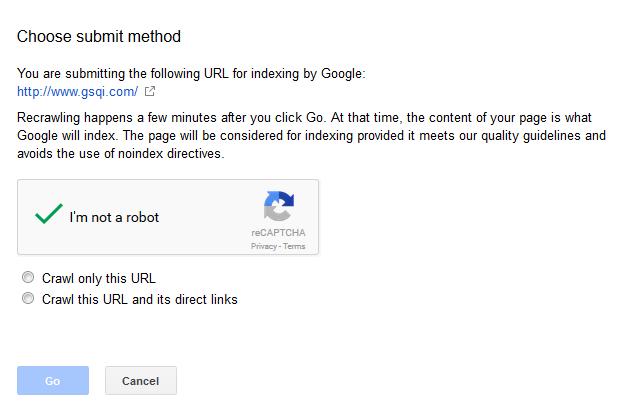

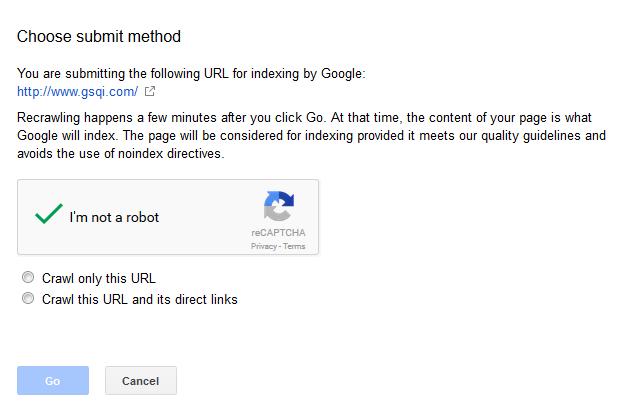

Once you click Fetch, Google will fetch the page and provide the results below. At this point, you can view the status of the fetch and click through that status to learn more. But, you’ll notice another option next to the status field that says, “Submit to index”. Clicking that link brings up a dialog box asking if you want just the url submitted or the url and its direct links. Select the option you want and then click “Go”. Note, you will also have to click the captcha confirming you are human. Google added that in late 2015 based on automated abuse it was seeing from some webmasters.

A Successful Fetch:

The Submit to Index Dialog Box:

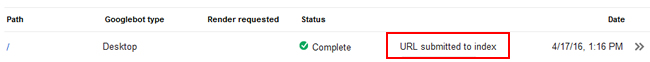

4. Submit to Index Complete:

Once you click “Go”, Google will present a message that your url has been submitted to the index.

That’s it! You just successfully added an updated page to Google’s index.

Note, this doesn’t mean the page will automatically be updated in the index. It can take a little time for this to happen, but I’ve seen this happen pretty quickly (sometimes in just a few hours). The update might not happen as quickly for every website, but again, it should be quicker than waiting for Googlebot to recrawl your site. I would bank on a day or two before you see the new page in Google’s cache (and the updated content reflected in the search results).

Expedite Updates Using Fetch as Google

Let’s face it, nobody likes waiting. And that’s especially the case when you have updated content that you want indexed by Google! If you have a page that’s been recently updated, then I recommend using Fetch as Google to make sure the page gets updated as quickly as possible. It’s easy to use, fast, and can also be used to submit all linked urls from the page at hand. Go ahead, try it out today.

GG