It can be extremely frustrating when experiencing a slow decline in rankings and organic search traffic. Could it be an algorithm update, technical SEO problems, or something else? The fact of the matter is that it could be a number of serious problems causing the drop. And unless you can isolate the problem, it’s hard to rectify. And when there’s confusion, it’s easy to implement changes that can either have no impact at all, or that make the situation worse. It’s not good, to say the least.

The Call About A Decline in Google Traffic + A Quick Health Check

After my last Search Engine Land column about robots.txt problems and a slow decline in organic search traffic, I had a company reach out to me about a similar decline. They just couldn’t understand what was going on. Also, they explained that they were not SEO-savvy, they had made some site changes over the past twelve months, and simply had no idea why they dropped in rankings.

As you can guess by the bolding above, I heard “site changes” and decided to do a quick health check of their top landing pages from organic search. Similar to the case study I wrote on Search Engine Land, it’s important to ensure the top landing pages from organic search still resolve properly, aren’t experiencing technical problems, content-related problems, etc.

But First, A Clean Search History (Algo-wise)

One of the first things I do when helping a new client is perform a search history analysis. I wrote an entire post about it on Search Engine Land and I highly recommend you check it out. In a nutshell, I match Google organic search trending with both confirmed and unconfirmed algorithm updates. It helps all involved better understand the impact over time, and which algorithms could be causing problems. It’s a relatively quick deliverable, but sets the stage for a deep audit and analysis.

I was specifically looking to see if the drop in traffic lined up with any algorithm updates from 2015. It ends up the search history analysis did not reveal any crazy hits during the various algorithm updates I have tracked. So the decline wasn’t from Panda, Phantom 2, the mobile-friendly update, or any of the quality updates that rolled out in 2015. That finding, along with the “site changes” quote from earlier led me to think there could be some technical SEO problems on the site. My next step was to perform a quick health check, as noted earlier in the post.

Crawling Top Landing Pages From Organic Search – Bingo

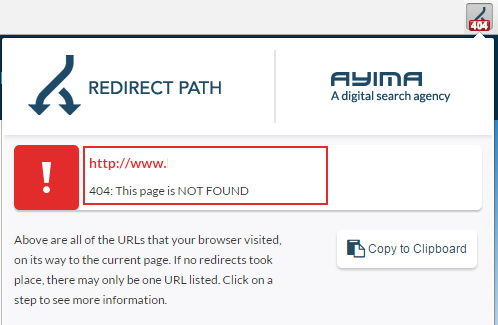

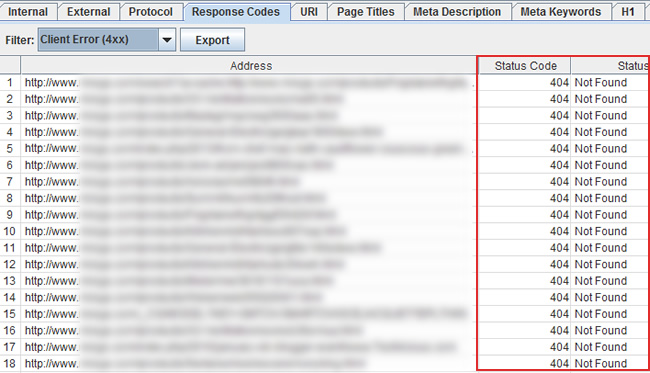

So I quickly jumped into Google Search Console, Google Analytics, and Bing Webmaster Tools to check out the top landing pages from organic search (from before the drop started). Upon checking a few dozen manually, I noticed some strange things. Some resolved fine, while others had various issues. There were some 404s, 500s, some title and content issues, a few weird redirects, and other misc. problems. And that’s just checking thirty to forty urls.

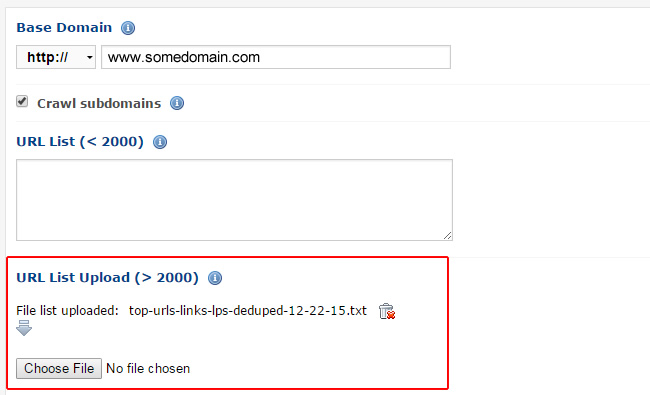

It was time for a crawl. There were about 12K deduped urls based on pulling landing page data from GSC, GA, and BWT. I scheduled two crawls of the urls and went back to manually checking urls. I had a feeling the crawl would yield some answers by checking all the urls in bulk.

When A Health Check and Quick Crawl Yield Pure Gold

Once the crawl completed, it was clear why the site was experiencing a drop in organic search traffic. Many of the pages that were once ranking were not resolving properly. Just like I found during the spot checks I performed earlier, the same problems were showing up in bulk now.

For example, I surfaced 404s, 500s, pages literally without content, weird redirects to unrelated pages, etc. And when pages don’t resolve properly, they have little shot of maintaining strong rankings over time. That led to the decline that the company was experiencing.

I sent my findings through and my client is working with their technical team to better understand how this happened. Some of the problems look like they were human error, while other problems were related to their CMS. The combination led to many pages not resolving properly. And that led to a drop in rankings and organic search traffic. It’s a great example of how a search history analysis, a health check, and a quick crawl of top landing pages could yield important findings SEO-wise.

I don’t know if the company can recover all of the lost rankings and traffic at this point, but uncovering the core problem is a great start. They now have a smoking gun (which you don’t always have SEO-wise). Let’s face it, it’s important to know what’s riddling the site in order to rectify those problems. And only then can you begin to recover rankings and traffic.

Now It’s Your Turn To Gather, Crawl, Freak and Fix

Some of you might be reading this post and finding the story about a decline in traffic over time extremely familiar. If that’s the case, then I highly recommend following the approach I took with this case and running a quick health check of top landing pages. Those urls may be fine on your site, but they might not be. It’s always smart to run a scan like this from time to time (especially when you see a drop in traffic).

I recommend starting with a search history analysis, identify any drops based on algorithm updates, and document your findings. After which, you should gather and crawl your top landing pages from organic search (including before the drop started). I have provided a bulleted list below of the steps involved.

How To Run A Health Check of Top Landing Pages:

1. Gather Your Intel

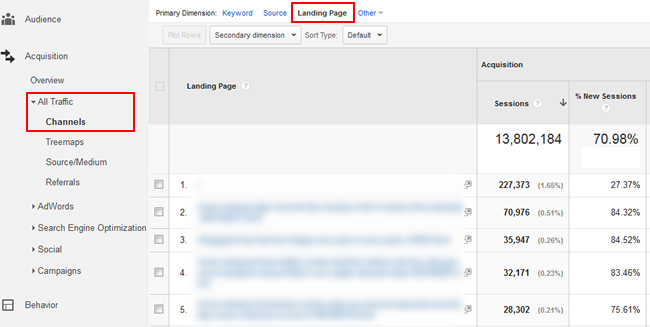

Head over to Google Analytics and export all landing pages from organic search. I wouldn’t keep this isolated to Google organic, but you can if you want. If you want all landing pages from organic search (without sampling), then access the Channels report, then Organic Search, and then dimension by landing page.

2. Fire Up Google Search Console (GSC) and Export Top Landing Pages

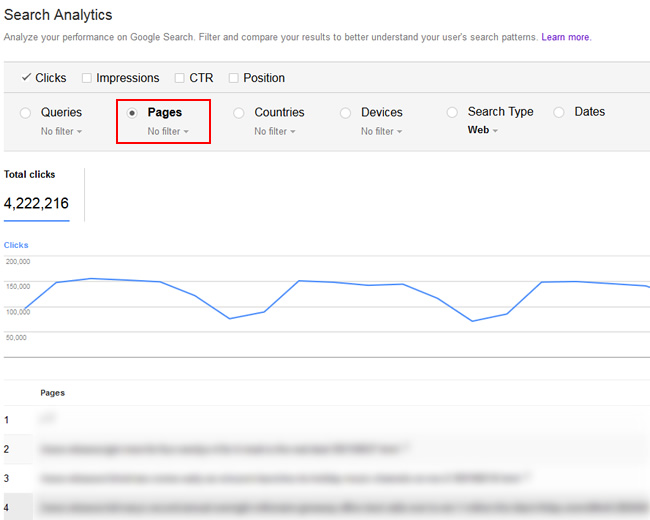

Note, you can only export the top one 1,000 urls for the site at hand via the UI. But that’s not entirely accurate. If you use filtering with the Pages grouping report, you can isolate specific urls or directories on your site. When you export that filtered report, it will provide that filtered data. So, you can get much more than just 1,000 urls, but it involves some manual work.

3. Don’t Forget About Bing Webmaster Tools

Yes, there is such a thing! And you can export all top landing pages that are receiving impressions and clicks from Bing. You can set the timeframe for any dates during the past six months (which is more than GSC gives you with just three months.)

4. Choose Your Crawler of Choice

I use both DeepCrawl and Screaming Frog extensively. DeepCrawl is an amazing enterprise-level crawler and can handle large sites and many urls very well. Note, I’m on the customer advisory board for DeepCrawl. I also use Screaming Frog for smaller websites and for surgical crawls. It’s common for me to use both tools when analyzing a website. Each tool enables you to upload a list of urls to crawl. And that’s what you want to do here after gathering your intel. Then crawl away…

5. Analyze The Crawl

Once the crawl completes, you should dig into the data to understand how those urls are resolving. There are many things you can find, so I can’t cover them all here. That said, below is a list of some important things you can surface. You might be surprised by what you are seeing (which can be good and bad).

- 404s

- 500s

- Faulty Redirects

- Noindexed Pages

- Pages Noindexed Via the X-Robots Tag (included in the header response)

- Titles and Metadata Changed or Removed

- Primary Content Removed or Broken (resulting in extremely thin content or user engagement problems)

- Robots.txt Blocking URLs From Being Crawled

- Canonicalization Problems

- And more…

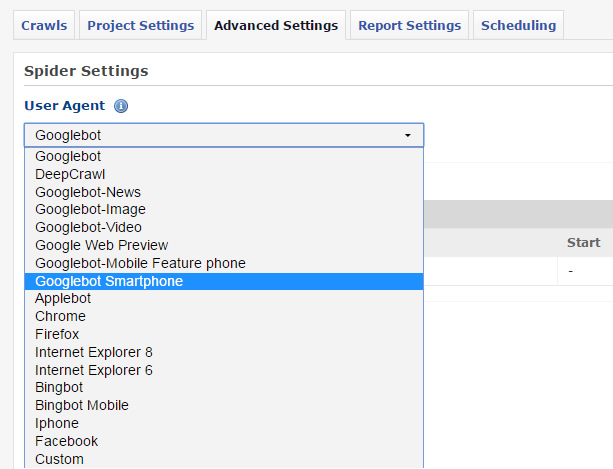

Bonus: Think you’re done? Not so fast. Go Mobile…

Remember, we live in a mobile world. And Google has a crawler focused on smartphones called Googlebot for Smartphones. So I highly recommend running a second crawl that uses Googlebot for Smartphones as the user agent. You might find that your site is handling mobile visitors differently than you thought… For example, you could find faulty redirects, 500s, content problems, etc.

Summary – You Won’t Know Until You Pull The Trigger

It’s smart to run a health check periodically to ensure your top landing pages from organic search are resolving ok. And I would not wait until you see a decline in traffic to go through the process listed above. I would try to check your urls monthly or bi-monthly at least. By doing so, you can pick up potential problems before they cause a drop in rankings and traffic. The good news is that you can start today. Go ahead and gather your intel and run a health check. You never know what you are going to find. Crawl away.

GG

Great Synopsis Glenn!!

We do employ tools like screaming frog, moz crawlers and reports from Google search console. But never thought of Bing webmaster page. Thanks for that hat tip. Do you also find a data mismatch when analyzing landing page data from GA and Google Search console? I find it too often. I trust the Search console ones. Is that ok?

Thanks Amit! I’m glad you found my post helpful. Regarding your question, that’s why I combine urls from different sources. Then I dedupe. That’s a great way to ensure you are getting as many urls as possible. So, GA, GSC, and BWT typically cover what you need. :)

Very informative article, Glenn! I just wonder how often you recommend to do such checks?

Thanks Olivier! I’m glad you enjoyed my post. I recommend performing a health check like this once per month (or at least every other month). It’s a strong way to nip SEO problems in the bud. I hope that helps.