I’ve written about Murphy’s Law for SEO before, and it’s scary as heck. And that’s especially the case for large-scale websites with many moving parts. Murphy’s law is an old adage that says, “anything that can go wrong, will go wrong.” For example, no matter how much you plan and prep for large-scale SEO changes, there’s a good possibility that something will go wrong.

And when SEOs are implementing major changes across complex sites, whether that be CMS migrations, domain name changes, https migrations, etc., you can bet Murphy will be hanging around. You just need to be prepared for Murphy to pay a visit.

I recently helped a company move to https, and overall, it was a clean migration. I’ve helped this company with a number of SEO projects over the past several years and I was extremely familiar with their site, content, and technical SEO setup. The site was doing extremely well SEO-wise leading up to the migration, so we definitely wanted to make sure the move to https went as smoothly as possible.

We prepared heavily leading up to the migration, so we could all be confident when finally pulling the trigger. When a site moves to https there are a number of checks I perform prior to, during, and then after the migration takes place. And that includes several crawls to ensure everything is ok. In addition, my client is SEO savvy and they were checking the site from their end as well.

But remember our friend Murphy? Read on.

Site Commands Are Useless, Or Are They?

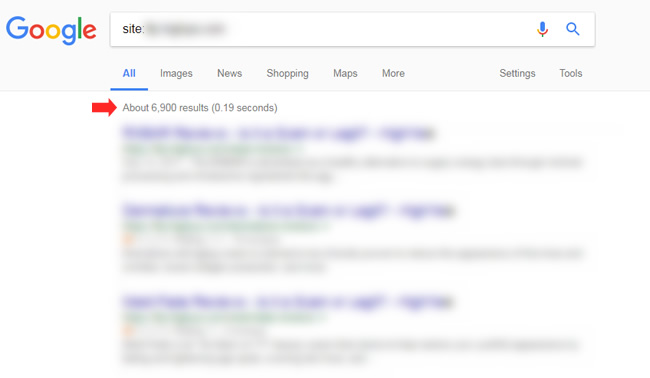

As we were tracking some of the normal volatility you can see during a migration, my client was checking indexation across some important urls. While performing some site commands, they noticed a rogue subdomain showing up with copies of the core website’s content. When I quickly checked that subdomain (via a site command) the subdomain revealed close to 7K pages indexed.

It seems when they pulled the trigger and moved to https, Murphy came to pay a visit. A subdomain that was never existent, suddenly appeared. And for some reason, the site was duplicating all pages from the core site on that subdomain.

Luckily, we were relatively early into the migration so we were able to start tackling the problem quickly. We clearly wanted to get those urls out of Google’s index as quickly as possible and then handle the subdomain properly. Below, I’ll document what we did to overcome a rogue subdomain showing up containing a lot of duplicate content.

Indexation, Rankings, and Traffic

One of the first things I told my client was to add that rogue subdomain to Google Search Console (GSC). That would enable us to see reporting directly from Google for that subdomain, including indexation, search analytics data, crawl errors, etc. It would also enable us to submit xml sitemaps and use the Removal Urls Tool if we thought that would be helpful. They quickly set up the GSC property and we waited for data to come in.

While we waited for data to arrive in GSC, I told my client to make sure all of the urls across the rogue subdomain 404. They did that pretty quickly. So the urls were only active for a relatively short period of time, but long enough for thousands to be indexed.

Once data arrived in GSC for the subdomain, we were able to see exactly what we were dealing with. There were 7,479 urls indexed according to Index Status reporting in GSC.

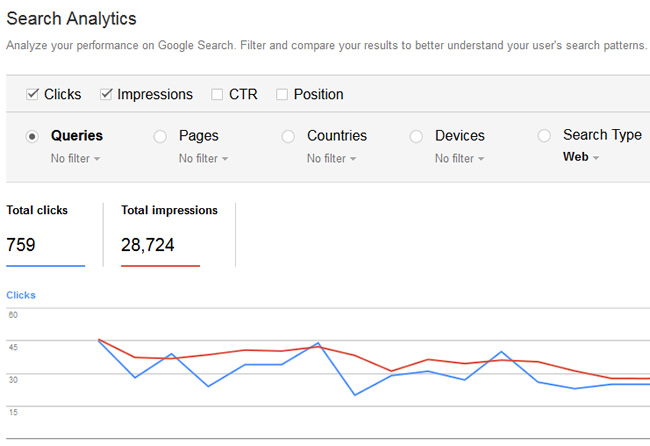

In addition, some of the urls were actually ranking for queries and driving clicks. We saw 759 clicks and 28,724 impressions once the urls were indexed. Note, that was a small percentage of Google organic traffic compared to the core website, but clearly not a good thing that the rogue subdomain was driving impressions and clicks.

So we had a problem on our hands. Below, I’ll document the steps we took to remove the rogue subdomain from the Google search results while also working to have the pages removed from the index long-term. We also wanted to make sure that any subdomain urls that were ranking in the SERPs were correctly switched to the core site urls.

How To Remove Rogue URLs From Google’s Index:

1. 404 (or 410) all of the pages.

I mentioned this earlier, but I told my client to make sure all urls on the subdomain were 404ing. And yes, you can use 410s if you want. That could quicken up the process slightly for having the urls removed from the index. John Mueller has explained in the past that it could quicken up the process “a tiny bit”.

Here’s a video of John explaining that:

2. Verify the property in Google Search Console (GSC) – for several reasons.

We verified the subdomain in GSC so we could gain reporting directly from Google related to indexation, search traffic and impressions, xml sitemaps, crawl errors, and more. In addition, we would have access to the Remove Urls Tool, which can be very helpful with quickly removing urls from the Google search results (temporarily).

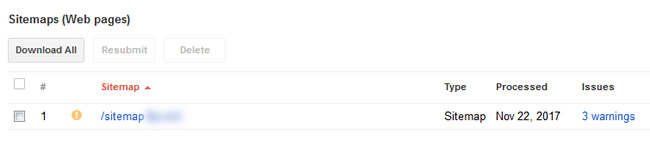

3. Create and submit an xml sitemap with all urls that are 404ing (via GSC).

We created an xml sitemap with all rogue urls and submitted that sitemap via GSC for the subdomain in question. That can help Google find the rogue urls that are now 404ing and hopefully get them out of the index quicker.

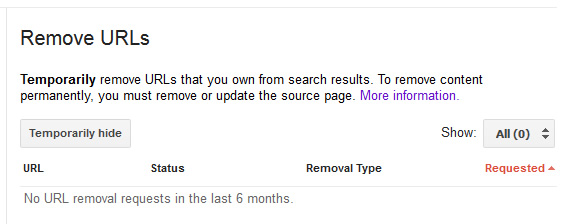

4. Use the Removal URLs Tool in GSC for the new property.

In Google Search Console (GSC), there is a very powerful, yet dangerous, tool called the Remove URLs Tool. I find there’s a lot of confusion about how to use the tool, how it works, what will happen long-term, etc.

First, it’s important to know that it’s a temporary removal (90 days). Using the tool, you can temporarily remove urls from the Google SERPs (in case the urls contain sensitive information, confidential information, etc. that shouldn’t be displayed in the search results). This is probably the most common misconception. Some site owners remove a url or a directory using the tool and then think it’s gone forever. That’s not the case. You have to make sure you remove the urls via 404 or 410, or by noindexing the urls, in order for them to be truly removed from the index. You can also put the content behind a login (forcing a username/password to log in). If not, the urls can reappear 90 days later.

Second, you can remove specific urls, directories, or entire sites by using the tool in GSC. And the Remove URLs Tool is case-sensitive and precise (character by character). So make sure you enter the exact url when using the tool, or it might not work the way you want it to. Also, to remove an entire site or subdomain, just leave the form blank and click the button labeled “Continue”. All of the urls for the root GSC property you are dealing with will be removed.

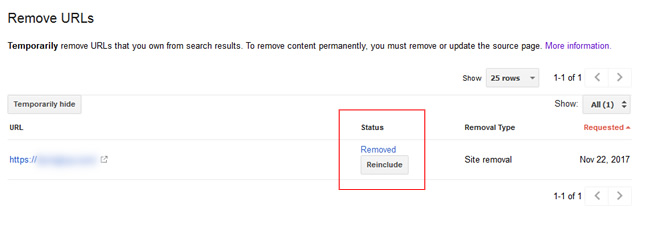

Since we were dealing with a rogue subdomain, we wanted to nuke all of the urls residing there. So we simply left the field blank for the subdomain in GSC and pushed the giant nuclear button. Below you can see the subdomain removal request based on using the Remove URLs Tool.

Important Note: Make sure you are working with the right GSC property or you can incorrectly remove your core website from the SERPs. Remember I said the tool can be dangerous?

How Fast Will The URLs Be Removed?

That’s a trick question. There are two ways to think about the problem. First, how fast will the urls be removed from the SERPs? And then how fast before Google truly deindexes the urls (for the long-term). The Remove URLs Tool can work very quickly. Google’s John Mueller has said it can take less than a day for the urls to be removed from the SERPs, but I’ve seen it work even faster.

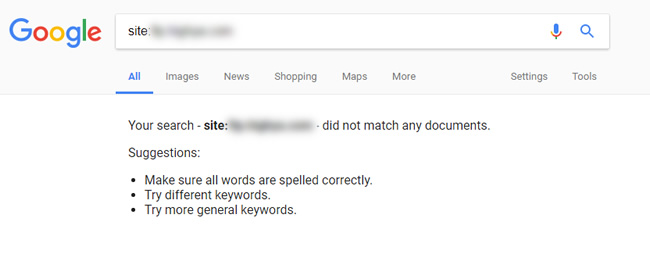

For this situation, it was just a few hours before a site command showed 0 results.

But remember, it’s a temporary removal. You still need to handle the urls on the site correctly in order for them to be removed over the long-term. That means having the urls resolve with a 404 or 410, putting the content behind a login, or by using the meta robots tag using “noindex”. If you do one of those things, then the urls won’t reappear in Google’s index 90 days later.

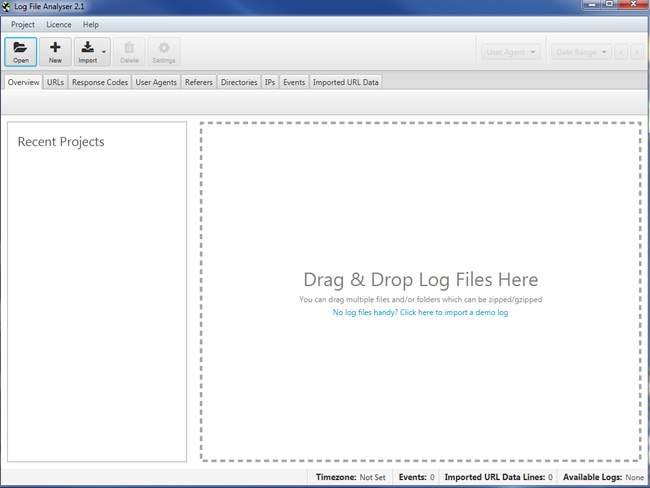

Bonus: Double Check Logs To Ensure The Pages Aren’t Being Visited Anymore

And for those of you that dig analyzing log files, you can check your logs to make sure traffic drops off to the urls on the rogue subdomain. You can use Screaming Frog Log File Analyzer to import your logs and check activity (and hopefully see declining activity.)

Moving Forward: Monitoring The Problem Over The Long-Term

Once the above steps are completed, you shouldn’t just sit back with a frozen margarita in your hand gazing at Google Analytics. Instead, you should monitor the situation to make sure all is ok long-term. That includes reviewing the GSC reporting for the rogue subdomain, which can reveal several key things.

1. Make sure indexation drops.

Check the index status report and perform site commands to make sure the urls remain out of Google’s index. If you see them come back for some reason, make sure the urls are 404ing, they are properly noindexed, or behind a login. You should also make sure someone didn’t reinclude the urls in the Removal URLs Tool in GSC.

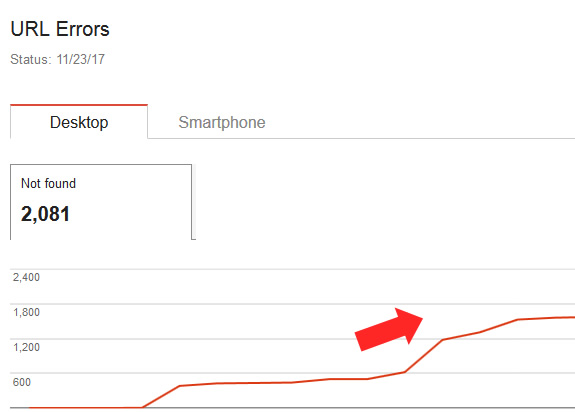

2. Make sure 404s show up in crawl errors.

By checking the crawl errors reporting in GSC, make sure the urls are showing up as 404s. For the subdomain we were working on, you can see the rise of 404s since my client implemented the correct changes. If you don’t see 404s increasing, you might not be correctly implementing 404s across the site or subdomain.

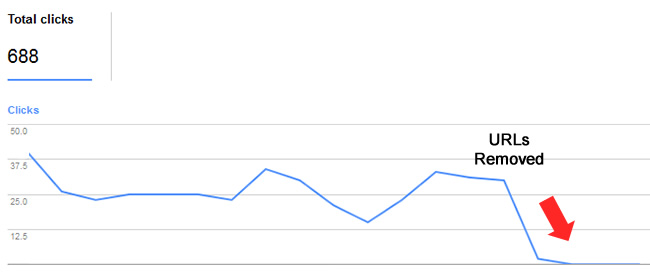

3. Make sure traffic drops off.

By checking the search analytics reporting in GSC, you can see impressions and clicks over time. Make sure they are dropping after removing the urls from Google. If you don’t see a drop in impressions and clicks for the rogue urls, then double check the Remove URLs tool, make sure the urls are indeed 404ing, being noindexed, etc.

Summary – Removing URLs… and Showing Murphy The Exit Door

If you find yourself in a situation where a rogue subdomain gets indexed with duplicate content, then use all of the tools available to you to rectify the situation. And that includes the Remove URLs Tool in GSC. Unfortunately, when implementing large-scale changes on complex sites, site owners should understand that things can, and will, go wrong. Murphy’s Law for SEO is real and can definitely throw a wrench into your operation. My advice is to be ready for Murphy to show up, and then move quickly to kick him out.

GG

Nice description of a set of events that I am sure many sites are suffering but have absolutely no idea that its has happened! My other favourite is the dev site sitting on an agency domain that is older then the actual site and outranks it. See a few of those – massive slapped wrist for the Design/build agency for not protecting their clients interests!

Thanks Simon. Yes, totally agree. The first step is knowing it happened! Then site owners need to move quickly to rectify the situation. The Remove URLs Tool can be very handy for the short-term removal. Then the urls need to be handled properly on the site (404, 410, noindex, or password protect).

And yes, staging servers getting indexed is another example of massive duplicate content on a rogue domain. Like you said, many sites probably don’t even know it’s happening. Not good.

Very good article @ Glenn

Hey, thanks Neeraj. I appreciate it. I know there’s a lot of confusion about the Remove URLs Tool and this was a great example of needing to use it! It’s also a good example of Murphy’s Law for SEO, which can be scary as heck. :)

One suggestion for point 1 – 404 or 410.

Usually all CMS systems using redirect to load index.php (or other languages) that can handle all requests. So accessing http://www.example.com/news where is no /news in filesystem trigger this. And bad news is that you can remove them quickly backend but all 404 or 410 pages will goes on and on making serious CPU/DB usage. Because 404/410 pages can’t be cached efficient. And if you cache them you can get 200 OK and this can went you in other trouble.

So here are mine recipes you can make .htaccess rules for accelerating this via Apache.

-=-=-=-

Redirect 410 /folder

-=-=-=-

RewriteEngine On

RewriteBase /

RewriteRule ^folder/ – [G]

-=-=-=-

Redirect 404 /folder

-=-=-=-

RewriteEngine On

RewriteBase /

RewriteRule ^folder/ – [L,R=404]

-=-=-=-

You can use any Redirect or RewriteRule – both works perfect. Just one return 410 other 404. You need to put this in beginning of .htaccess and requests to that folder will be no passed to CMS.

PS: I forgot – i use these recipes in cases of hack and injected content in folders. Once i clean site, i add that codes and it doesn’t mess anymore mine Analytics or CPU.

Hi Peter. Definitely interesting, but I think I need to understand more about what you’re referring to. I’ll send you an email shortly! :)

Glenn, thanks for the detailed case study. Just used it to remove hundreds of rogue posts created by an RSS aggregator plugin. Now for the waiting and monitoring part. Thanks again.

That’s outstanding! I’m glad my post was helpful and that you could use this approach immediately. Definitely monitor the GSC property to make sure all is ok over the long-term. And you can go a step further and check your logs too (if you want another check). Let me know how it goes.

Great post Glenn. Dealt with a similar situation recently using the xml sitemap technique like you’ve suggested. Don’t know for sure if this sped up the process but we frequently updated the XML sitemap by removing the URLs as they were deindexed, the logic being that it would speed up getting the rest crawled and deindexed.

Thanks Will. I appreciate it! The xml sitemap technique can definitely quicken up the process. John Mueller explained that can help Google find the 404s or 410s quicker. And if there are urls that you need removed from the SERPs quickly, then the Remove URLs Tool can really help out. Were you able to use that or was it just a matter of making sure the pages dropped from the index over time?