For site migrations, I’ve always said that Murphy’s Law is real. “Anything that can go wrong, will go wrong.” You can prepare like crazy, think you have everything nailed down, only to see a migration go sideways once it launches.

That’s also why I believe that when something does go wrong (which it will), it’s more super-important to address those problems quickly and efficiently. If you can nip migration problems in the bud, you can avoid those problems becoming major issues that impact SEO. That’s why it’s important to prepare as much as you can, have all of the necessary intelligence in front of you while the migration goes live, and then move quickly to attack any problems that arise.

By the way, if you think you’re immune to site migration problems, then listen to the episode of Google’s Search Off The Record podcast where John Mueller, Gary Illyes, Cherry Prommawin, and Martin Splitt talk about the migration of the webmaster central site to the new search central site. It ends up they ran into several problems just like any other site owner could and had to move quickly to rectify those issues. So, if it can happen to Google, it sure can happen to you. :)

Adding Google’s Crawl Stats Reporting To Your Site Migration Checklist:

There are plenty of checklists and tools out there to help with site migrations. For example, Google’s testing tools in GSC, third-party crawlers like Screaming Frog, DeepCrawl, and Sitebulb, site monitoring tools, log file analysis tools, and more.

And on the topic of log files, they provide the quickest way to understand how Google is crawling your site post-migration. You don’t need to wait for data to populate in a tool, you don’t have to guess how Google is treating urls, redirects, etc., and there are several log file analysis tools hungry to consume your logs.

But there’s a catch… trying to get log files is like attempting to complete a mission as Tom Cruise in one of his great Mission Impossible movies. If gaining log files was a scene in Mission Impossible, I could hear Tom now:

“Wait, so we have to scuba dive under a bridge heavily guarded by troops, climb a 200 story building in our underwear (in 20 degree weather), use elaborate yoga moves to dodge a scattered laser security system, steal the ancient lamp of Mueller which is protected by special forces, hack into a computer system protected by six layers of ciphers, download the log files, and then parachute off the building back into the water, only to scuba dive back under the bridge to safety? No problem… hold my coffee.”

OK, it’s not that bad, but any SEO that has attempted to get log files from a client knows how frustrating that situation can be. They are huge files, seemingly not owned by one group or person in a company, and you can even find some companies not keeping logs for more than a few days (if that). So, it’s no easy feat to get a hold of them.

What’s an SEO to do?

Meet The New Crawl Stats Report in GSC: A (Pretty) Good Proxy For Log Files

In November of 2020 Google launched the new Crawl Stats reporting in GSC. The reporting is outstanding, and it was a huge improvement from the previous version. The new reporting provides a boatload of data based on Google crawling your site. I won’t go through all of the reports and data in this post, but you can check out the documentation to learn more about each of the report sections.

I’m going to cover what Google considers “site moves with url changes”, which covers domain name changes and url migrations. I’ll focus on domain name changes, but you can absolutely use the new Crawl Stats reporting to troubleshoot url migrations as well.

For domain name changes, you can view crawl stats reporting for both the domain you are moving to and the domain you are moving from. So, using the crawl stats reporting can supplement your current migration checks and enable you to see how Google is handling the migration at the source (the old domain).

And for url migrations, you can also surface problems that Google is experiencing post-migration. It’s not as clear as a domain name change, since you can’t isolate the crawl stats reporting by domain, but it can still help you surface issues based on bulk-changing urls.

Note: There is a delay in the Crawl Stats reporting.

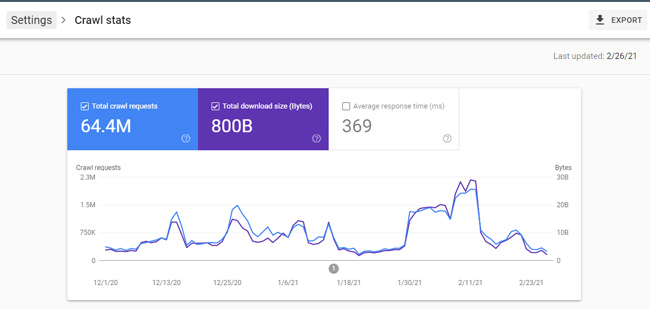

The Crawl Stats reporting lags by a few days, so log files are still important if you want to see a real-time view of how Google is handling a site migration. The reporting updates daily, but lags by 3-4 days from what I have seen. Below, you can see the report was last updated on 2/26/21, but today is 3/2/21.

How to identify problems with domain name changes and url migrations using the Crawl Stats reporting in GSC:

As mentioned above, for domain name changes, you can analyze the Crawl Stats reporting for the domain name you are moving from, and the domain name you are moving to. Below, I’ll cover some of the ways you can use the reporting to surface potential issues.

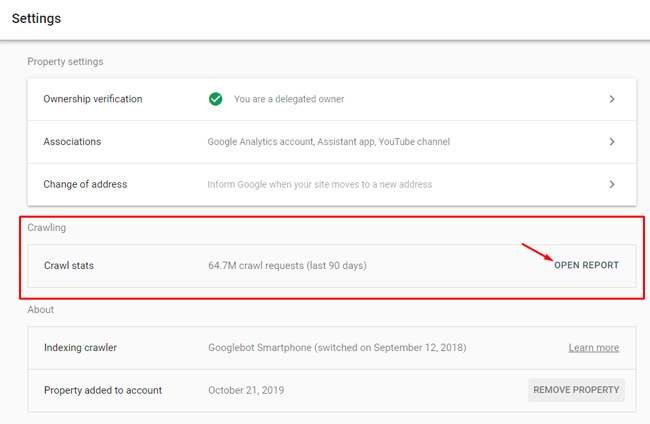

How To Find The New Crawl Stats Report in Google Search Console (GSC):

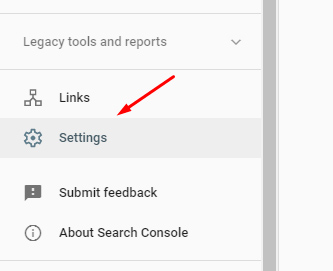

First, I know there’s some confusion about where the new Crawl Stats report is located. You will not find the report in the left-side navigation in GSC. Instead, you first need to click “Settings”, find the Crawling section of the page which contains top-level crawl stats, and then click “Open Report” to view the full Crawl Stats reporting.

Now that you’ve found the Crawl Stats reporting, here are some of the things you can find when analyzing and troubleshooting a site migration.

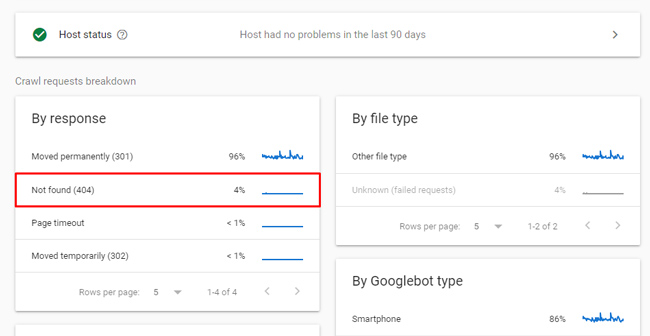

404s and Broken Redirects:

The Crawl Stats reporting for the domain you are moving from will list urls that Google is crawling that end up as 404s. All urls during a domain name change should map to their equivalent url on the new domain (via 301 redirects). By analyzing the source domain name that’s part of the migration, you can view urls that Googlebot is coming across that end up as 404s. And that can help you find gaps in your 301 redirection plan.

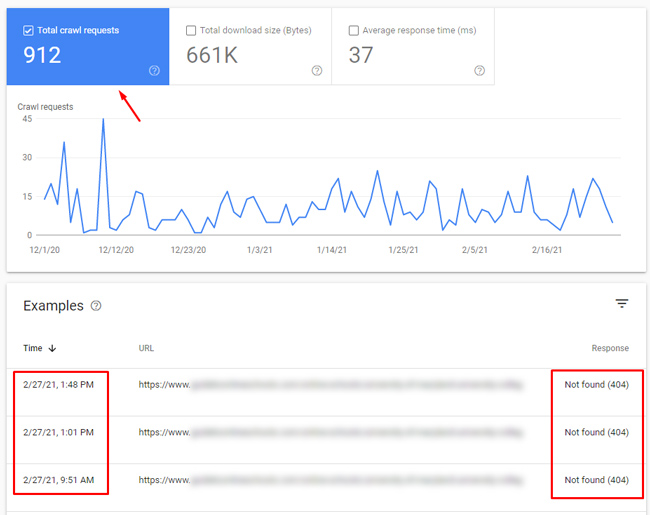

For example, you can see the reporting for a site that went through a domain name change below. 4% of the crawl requests were ending up as 404s when most of the urls should be redirecting to urls that return a 200 header response code on the new domain.

If you click into that report, you can see a sample of the top 1,000 urls with that issue and you can inspect the urls as well:

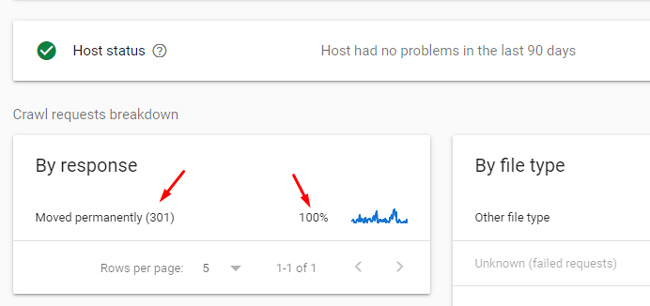

And here is what it should look like. 100% of the requests are 301 redirecting to the equivalent urls on the new domain:

Important (and often confusing) note: It’s worth noting that GSC reports on the destination url, so a 404 showing up for the old domain name could actually be showing you a redirect to the new domain name, but that new url 404s. In other words, the 404 is actually on the new domain, but shows up in the reporting for the old domain name. That’s extremely important to understand overall with GSC, and it can cause confusion while analyzing the reporting. I tweeted about this in January with regard to the Coverage reporting:

Image Search: Googlebot Image

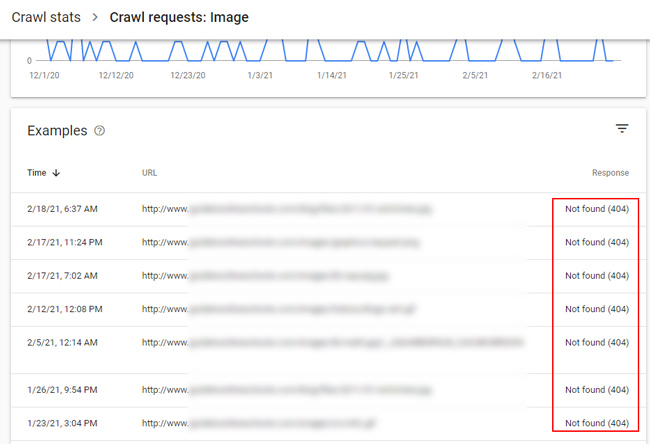

If image search is important for your business, you will definitely want to review the “By Googlebot type” section of the reporting. You will see a listing for “Image”. You can click into that reporting to see the urls Googlebot Image is crawling. If you see 404s, 5XX, etc., then make sure you jump on those issues quickly. You should see plenty of 301s if you redirected images properly during the migration (which you should). I covered that in my Mythbusting video with Google’s Martin Splitt about site migrations. The video can be seen later in this post.

As you can see below, Googlebot Image is coming across 404s as well. This is from the site that went through a domain name change.

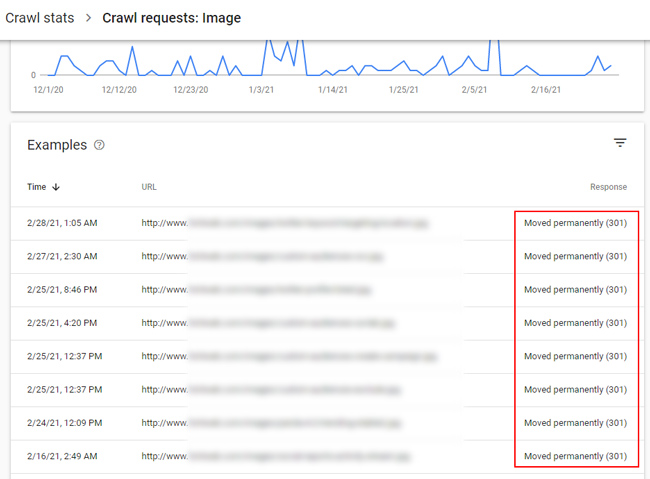

This is what you should see. Notice how the Googlebot Image requests all properly 301 redirect to the images on the new domain:

Robots.txt issues:

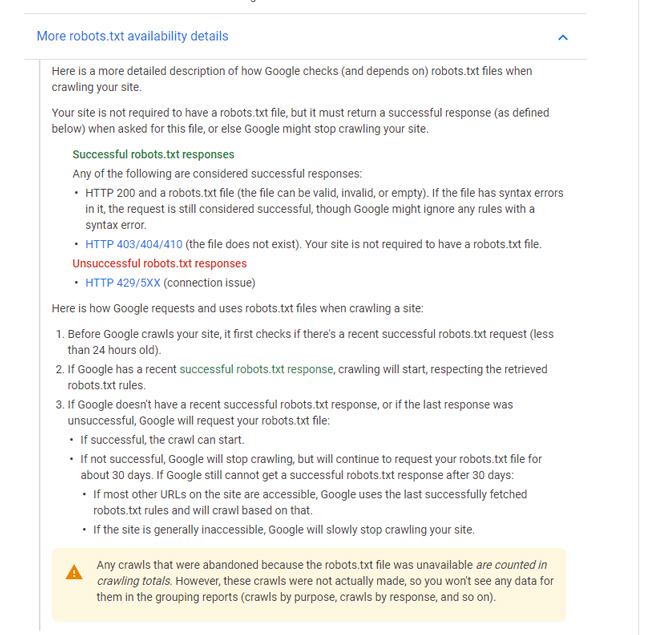

In the host issues section, you can see if Googlebot is having problems accessing the robots.txt file for the domain(s) involved. If Google cannot fetch the robots.txt file (which returns a 200 or 403/404/410), then it will not crawl the site at that time. Google will check back later to see if it can fetch the robots.txt file. If it can, then crawling will resume. You can read more details about how this is handled on Google’s support page (or in the screenshot below). Note, you can 404 a robots.txt file and that’s absolutely fine. This is about Google having problems fetching the file (i.e. Google seeing a 429, 5XX).

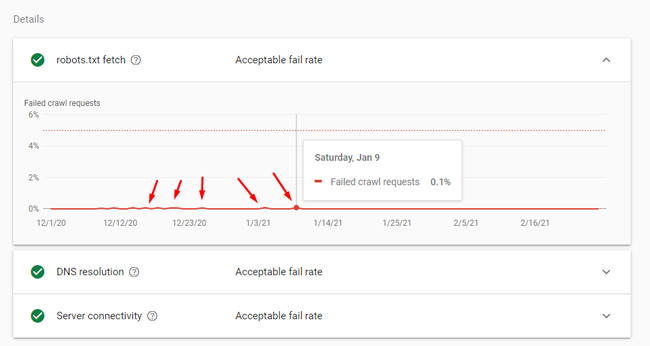

And here is what it can look like in GSC’s Crawl Stats reporting. Although this falls under an “acceptable fail rate”, I would sure check why the robots.txt fetch is failing at all:

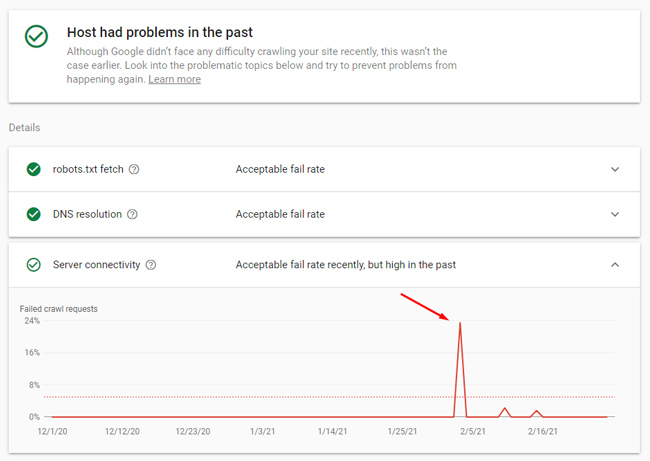

Other host issues: DNS Resolution and Server Connectivity:

Along the same lines, you can see if there are other host-level issues going on. The host reporting also contains DNS resolution errors and server connectivity problems. You obviously want to make sure Google can successfully recognize your hostname and that it can connect to your site.

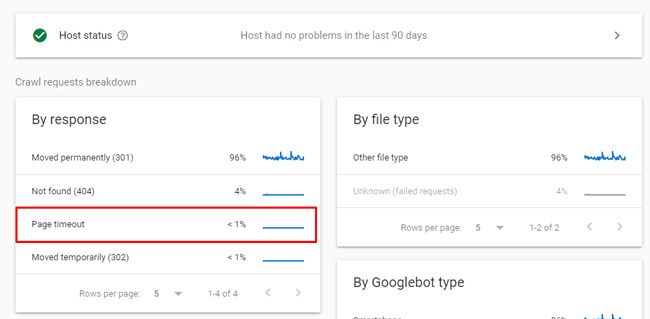

Performance Problems:

The reporting also will show pages that are timing out for some reason, so keep an eye on that report. You will find that in the “By response” section.

Subdomain Issues:

Hopefully you picked up all subdomains that were in use before you pulled the trigger on the domain name change. But if you didn’t, you can see Crawl Stats reporting per subdomain that Google is crawling. The catch is that you need a domain property set up in GSC for the domain you are moving from (unless you had those subdomains verified and set up already in GSC).

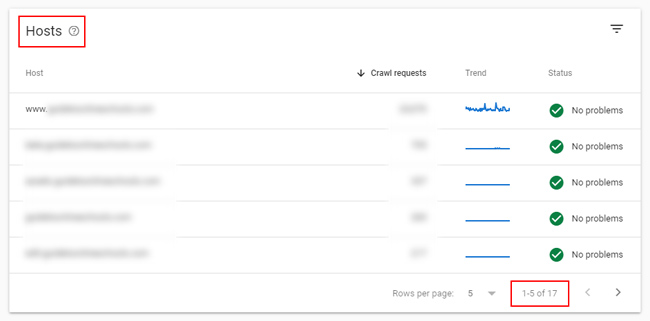

If you did, you could view the crawl stats reporting for those subdomains separately. Domain properties make this easier since all subdomains being crawled by Google (the top 20 over the past 90 days) will be shown in the Hosts report in the Crawl Stats reporting.

Below, you can see that the crawl stats reporting shows 17 different subdomains with crawl requests over the past 90 days.

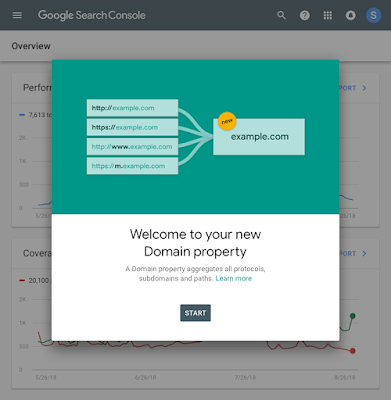

Note, I always recommend having a domain property set up. It’s amazing how many companies have not done this yet… If you haven’t, I would do that today. It doesn’t take long to set up and it covers all protocols and subdomains.

Crawl Stats For Site Migrations: Final tips And Recommendations

The Crawl Stats reporting can help site owners and SEOs get closer to log file analysis, when gaining those logs might be tough. Although there’s a lag in the data populating (3-4 days), the Crawl Stats reporting can sure help surface problems during domain name changes and url migrations. And the quicker you can nip those problems in the bud, the less chance they become bigger issues SEO-wise.

Here are some final tips and recommendations:

- Set up domain properties for each of the domains involved in the migration (if changing domain names). This will give you access to all subdomains in the Crawl Stats reporting.

- Once data starts populating in the Crawl Stats reporting post-migration, dig into the domain you are moving from. You might see a number of issues there based on what I explained earlier. For example, 404s, performance issues, robots.txt problems, and more.

- Nail the redirection plan. If you see gaps and problems with your 301 redirects, move quickly to rectify those problems. Nip those problems in the bud.

- Check for host-level problems (like robots.txt fetch issues, DNS resolution issues, and server connectivity problems). Your redirection plan doesn’t matter if Google can’t successfully connect to your site.

- Look for pages that are timing out. This would show up in the “By response” section of the reporting. If you see that, dig into those problems to see why the pages are timing out. Again, move quickly to address performance issues.

- Don’t forget your images! Make sure to 301 redirect your images and then check the section labeled “By Googlebot type”. Then check the “Image” reporting to see how Googlebot Image is crawling your content.

More About Site Migrations: Mythbusting Video

If you are interested in site migrations, then you should check out the Mythbusting video I shot with Google’s Martin Splitt. In the video, we cover a number of important topics including domain name changes, url migrations, redirecting images, when a site should revert a migration, site merges, the Change of Address Tool in GSC, and more.

Summary – GSC’s Crawl Stats as a proxy for log files.

After reading this post, I hope you see the power in adding Google’s Crawl Stats reporting to your site migration checklist. The reporting provides a boatload of great information based on Google crawling your site post-migration. I’ve found it extremely helpful while helping companies monitor and troubleshoot domain name changes, url migrations, and more. And remember, Murphy’s Law is real for site migrations. Things will go wrong… which is ok. The important part is how quickly you handle and rectify those problems.

GG