Last month I wrote a post covering a number of real-world mobile problems I surfaced on sites using separate mobile urls (like m-dot subdomains). With Google moving to a mobile-first index, it’s extremely important to make sure your mobile urls contain the equivalent content, structured data, canonical tags, hreflang tags, etc. as your desktop urls. Since Google will use the mobile urls and content for ranking purposes once the switch to m-first happens, you can end up with ranking issues and a drop in Google organic search traffic if your mobile urls are inadequate. And that’s never a good thing for sites looking to grow.

Now, if a site is responsive or using dynamic serving, it should be fine. But for sites using separate mobile urls (like an m-dot subdomain), there could be many gremlins lurking around. My post contains actual problems I have uncovered while helping companies prepare for Google’s mobile-first index. From botched switchboard tags to broken canonicals to double canonicals to rel alternate being delivered via the header response, companies could run into a number of problems that are tough to detect with the naked eye.

As part of my final recommendations in that post, I explained that it’s important to crawl your site as both Googlebot desktop and Googlebot for Smartphones to make sure the mobile crawl doesn’t surface many problems not present via the desktop crawl. It’s a great way to check your setup from both a desktop and mobile perspective. But I didn’t explain one tool that can really help those sites using separate mobile urls, and I’m going to cover that in this post. If you are using separate mobile urls, I think you’re going to dig it. Read on.

Comparing Desktop to Mobile Crawls (with the help of DeepCrawl)

You can definitely crawl your desktop urls and mobile urls and dig in manually. That’s a smart thing to do. But comparing larger-scale sites using this method can get tough. Wouldn’t it be great if a crawling tool could help by comparing the two crawls for you? Then you could surface problems faster and spend your time digging into those problems versus scanning thousands of urls.

Enter DeepCrawl’s test site feature, which you can hack to compare against your mobile subdomain.

Hacking DeepCrawl’s Test Site Feature To Compare Mobile Versus Desktop URLs

If you’ve read my previous posts about audits, migrations, algorithm updates and more, then you have come across many mentions of DeepCrawl. It’s one of my favorite SEO tools and has been for a long time. Actually, I loved it so much that I’m on the customer advisory board and have been a CAB member since it was launched a few years ago.

In DeepCrawl, there’s an option for crawling a test site. It’s been part of DeepCrawl for a long time and it’s a great way for SEOs to crawl and analyze a site while it’s in staging. Not only will it crawl a test site, but it will compare the test site to the production site. And if you’re following along with what I mentioned above about testing m-dots, then you might see where this is headed. :)

Although your m-dot is not a test site, you can hack DeepCrawl into thinking it’s a test site. Then it will crawl the mobile subdomain and compare to the previous crawl (which can be your desktop site). This technique was covered by Jon Myers in his presentation about preparing for Google’s mobile-first index. You should check out his presentation on SlideShare if you haven’t yet. And if you’re running a site that employs m-dots, then a lightbulb probably appeared above your head. Below, I’m going to explain how to set this up via DeepCrawl. Then I’ll cover some of the problems you can surface once the crawls have completed.

How to set up DeepCrawl to compare desktop and m-dot urls:

In order for DeepCrawl to compare separate mobile urls to desktop urls, you need an initial crawl of your desktop pages and then a second crawl of your “test site”, which will be your m-dot subdomain. Follow the steps below to set this up.

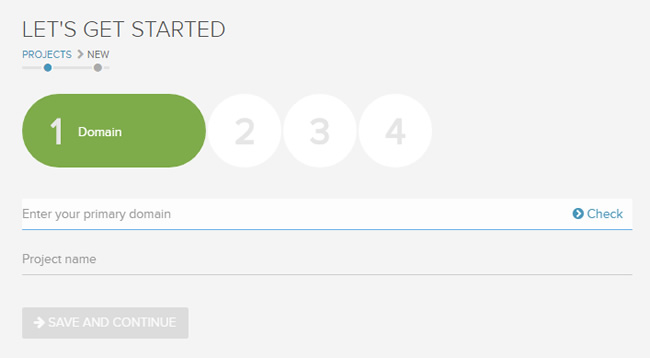

1. Set up a new crawl, enter your desktop domain and give the crawl a unique name.

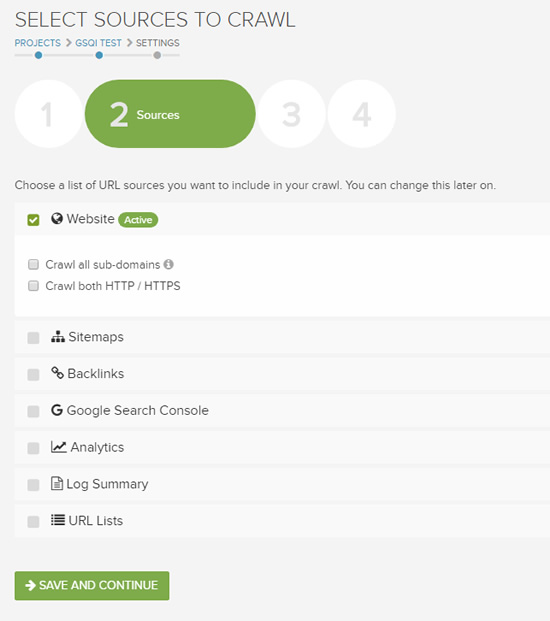

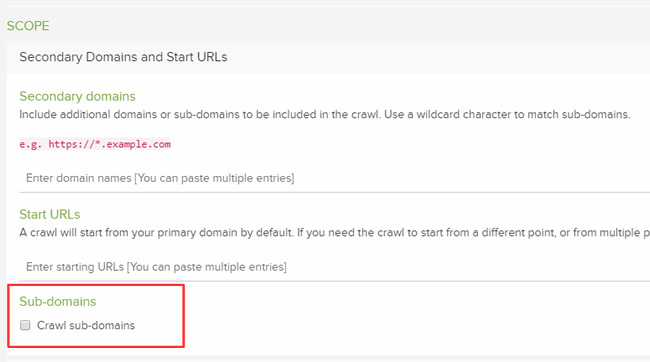

2. Check the box for “Website” crawl and do not check the box for “Crawl all subdomains”.

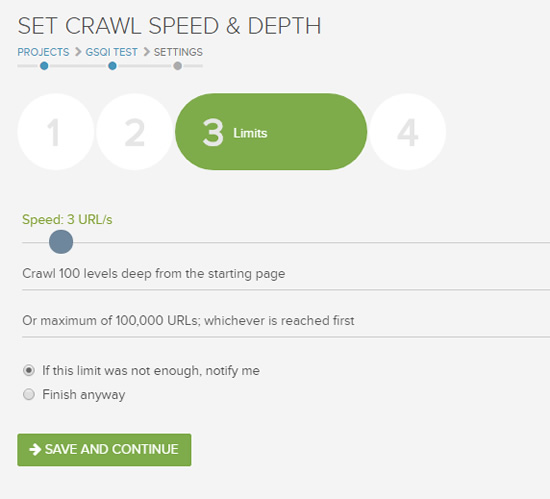

3. Set the maximum number of urls to crawl. I recommend crawling as many as you can from the site (unless it’s a massive site). You want to make sure you are comparing apples to apples, so it would be optimal to get a significant sample from the site. For many sites, this won’t be a problem. If you have a large-scale site (greater than a few hundred thousand pages indexed), then you can craft strategies for crawling site sections, certain directories, etc.)

4. Next, click “Advanced Settings” which is marked with a gear icon. Again, make sure “Crawl subdomains” is not checked. We want to focus on the desktop site.

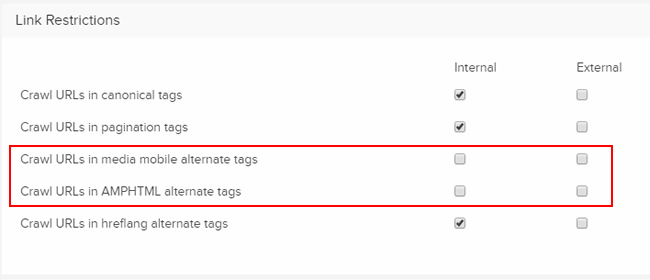

5. Under “Link Restrictions”, uncheck “Crawl URLs in media mobile alternate tags” and “Crawl URLs in AMPHTML alternate tags. Again, we want the first crawl to be focused on our desktop urls.

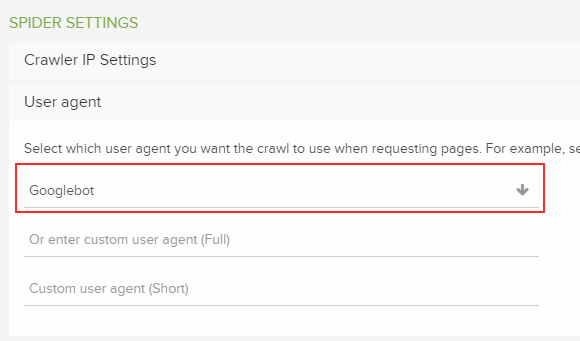

6. Under “Spider Settings”, select Googlebot as the user-agent for the first crawl. In the second crawl, we’ll use Googlebot for Smartphones.

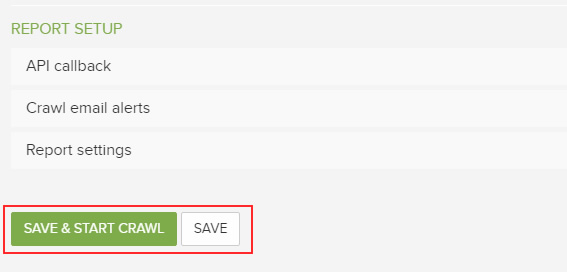

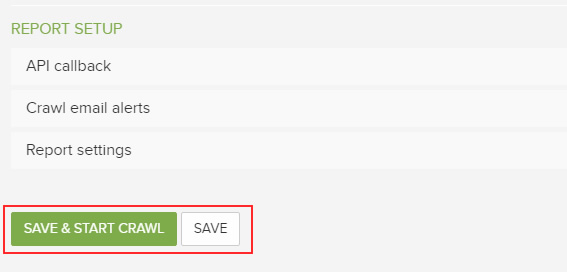

7. Then click “Save and Start Crawl” to begin the crawl of your desktop urls.

8. {Optional} While the crawl is running, you can relax and sip a frozen drink while reading my tweets about SEO. :)

Crawl Two: Setting Up A Crawl Of Your Separate Mobile URLs (m-dot)

Once the first crawl completes, you’ll be ready to launch the second crawl (which will crawl your m-dot subdomain and enable DeepCrawl to compare the two crawls). Follow the instructions below to set this up:

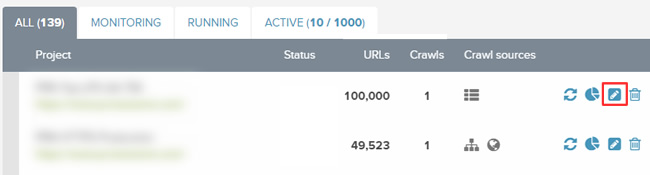

1. From the main projects screen in DeepCrawl, click the edit icon (a small pencil icon). This will enable you to edit the settings so you can correctly crawl your mobile subdomain.

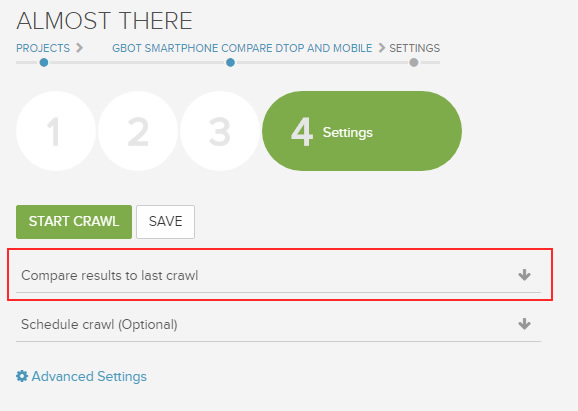

2. Jump to the “Settings” screen, which is the fourth screen when editing your crawl settings. First, make sure “Compare to last crawl” is selected. The first crawl you launch won’t have a previous and that’s normal. But the second crawl you launch should be compared to the first (basically comparing m-dot to desktop). Note, you can always select a specific crawl to compare to in case you launched additional crawls of your m-dot and wanted to compare to a previous crawl of desktop or m-dot.

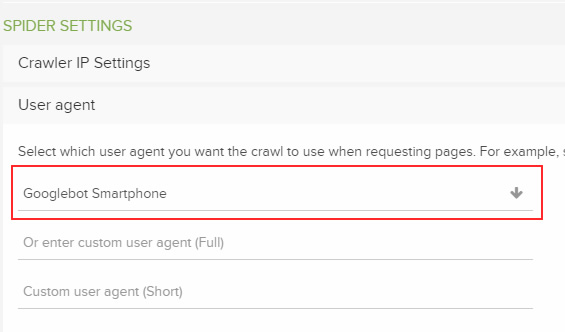

3. Click “Advanced Settings” (fourth screen) again and jump down to “Spider Settings”. Select Googlebot for Smartphones as the user-agent for this crawl.

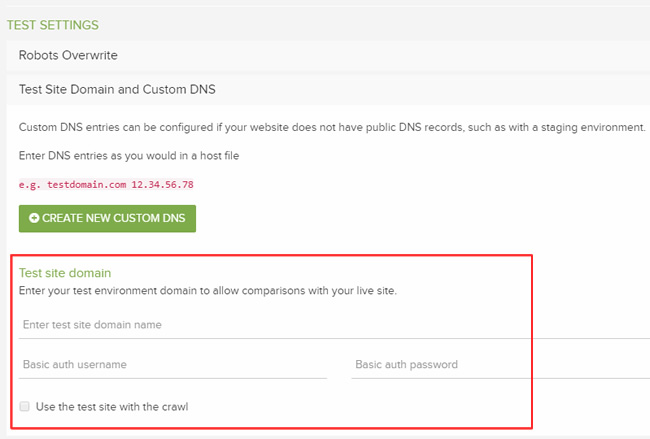

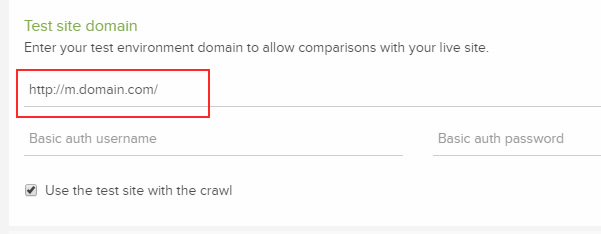

4. Next, jump down to Test Settings and select “Test Site Domain and Custom DNS”. Under “Test site domain”, you should enter your m-dot subdomain on the first line. And since this isn’t a real test server (remember, we’re hacking this feature to compare the m-dot to desktop), you don’t need to enter anything in the Basic auth username or Basic auth password fields. Also, make sure “Use the test site with the crawl” is checked.

5. That’s all you need to change in order for DeepCrawl to crawl your m-dot and then compare to the previous desktop crawl. Click “Save and Start Crawl” to begin the crawl of your m-dot.

6. {Optional}: Finish your frozen drink from earlier and read more tweets from me about SEO on Twitter. :)

Examples of problems you can surface when comparing m-dot to desktop:

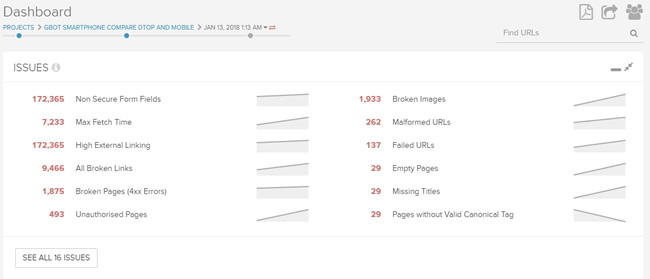

Once the mobile crawl finishes, you’re ready to dig into the data and surface gaps between your desktop and mobile sites. The dashboard view will show you the number of top issues surfaced overall while also showing a trending line for each problem compared to the previous crawl.

When employing separate mobile urls, you need to watch out for major gaps and differences between your desktop site and mobile site. That includes content differences, canonical problems, internal linking gaps, crawl errors, hreflang problems, structured data issues, and more. You should review all of the major categories in DeepCrawl to make sure you have a solid view of how the crawls compare. Below, I’ll cover a few examples of what you can find.

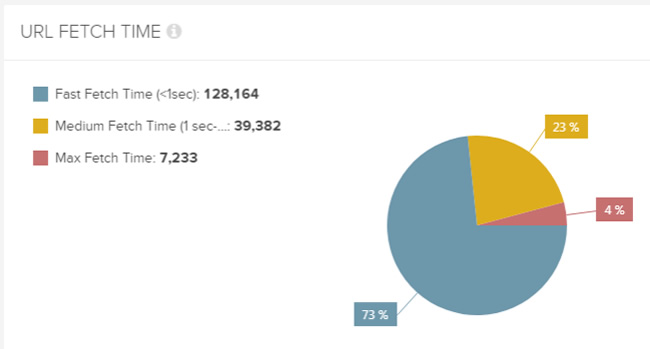

Max Fetch Time (Good timing based on Google’s latest announcement, pun intended.)

In the screenshot below, you can see a major difference in the number of urls that took a long time to load between desktop and mobile. There were over 7K urls that had performance problems, including some urls taking up to 15 seconds to load.

Google just announced that mobile page speed will be a ranking factor as of July 2018, so this handy report can help you uncover mobile urls that might have performance problems.

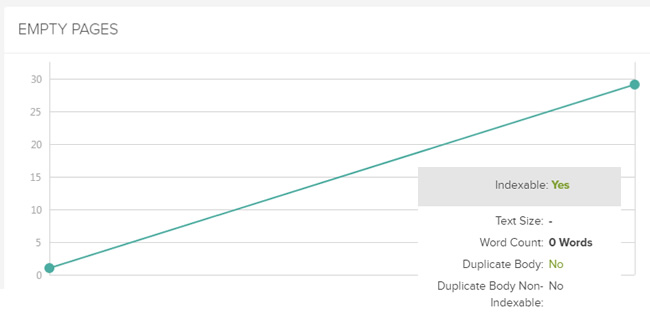

Thin content and empty pages

You might find more thin content or even empty pages in the mobile crawl when comparing to desktop. With Google’s mobile-first index, mobile pages will be used for rankings (once your site is switched to the m-first index). Therefore, you don’t want a situation where the desktop urls contains high-quality content, but some of the mobile urls are thin or even blank.

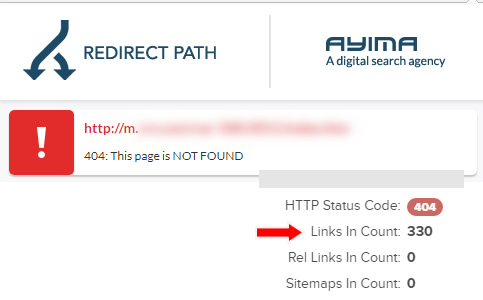

404s (Page Not Found)

You might find a spike in 404s on the mobile site, which can obviously cause big problems SEO-wise (if the pages should resolve with 200s and contain content matching the desktop alternatives). In this example, there’s definitely an increase in 404s on the m-dot, and the company will need to rectify the problems soon (as the mobile-first index approaches). And combine this report with the broken links report and you can hunt down which pages contain links to the 404s.

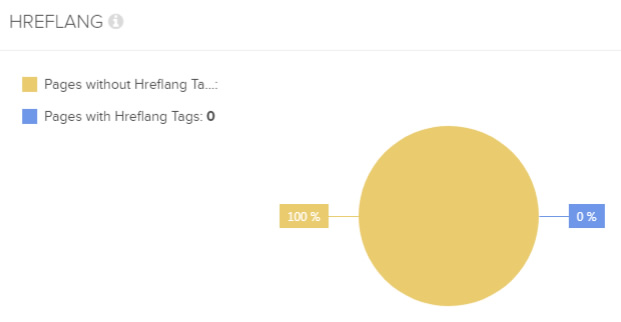

Pages Without hreflang

For sites providing content in multiple languages, hreflang is a great solution. It enables you to tell Google which urls provide the content in which languages. Then Google can match up the correct page with the correct user based on language. When using hreflang, many site owners provide a “cluster” of alternate urls in the head of the document. You can also supply hreflang via sitemaps and via the header response, but many provide the tags directly in the code. If those tags are missing on your mobile urls, then hreflang will not work as expected. In the screenshot below, you can see a spike in pages without hreflang. There’s clearly a problem with the site publishing hreflang tags on the m-dot.

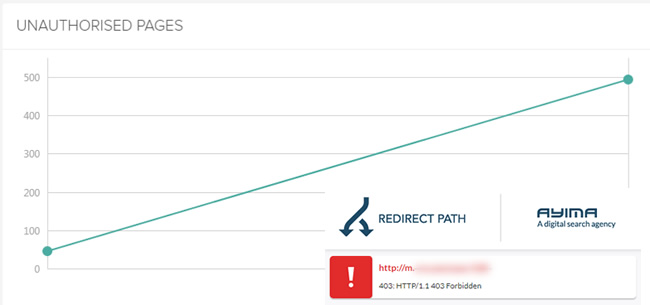

403s Spike on Desktop (Forbidden)

Well, that’s not good. You can clearly see a spike in 403s on the mobile site for some reason. You would clearly want to dig in and find out why that’s happening. That’s a sinister SEO problem that can definitely cause problems rankings-wise. I’ve seen some pages return 403s that load perfectly (so the urls are returning 403s, but they look like 200s).

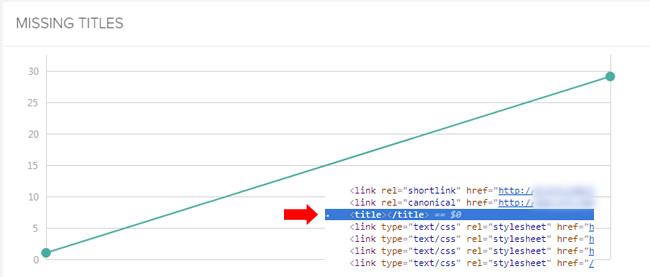

Missing Titles

When using separate mobile urls, it’s easy for some site owners to pay less attention to the m-dot. For example, not properly optimizing each mobile page. In the screenshot below, you can see a spike in missing title tags (where the title tags are completely missing from the page or empty). Again, when your site gets switched to the mobile-first index, the mobile urls and content will be used for ranking purposes. I would not have many urls without title tags when that’s the case.

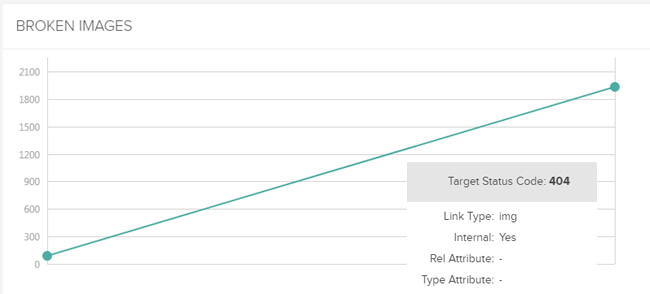

Broken Images

When comparing m-dot to desktop, you might also find problems with the images on your site. Again, many site owners neglect their m-dots as they focus on desktop. Below, you can see a spike in broken images on the mobile subdomain. Broken images could obviously impact image search, while also impact the user experience. It’s hard to have confidence in a site when the page looks broken. And it’s hard for images to rank in image search if they return 404s.

I think you get the picture. As you can see, DeepCrawl can surface many issues when comparing m-dots to desktop sites (and many of those issues could be invisible to the naked eye). When you crawl at scale, you have a better shot at picking up those issues, which can help you fix important problems before your site is switched to Google’s mobile-first index.

Summary: Hacking is not always a bad thing (when DeepCrawl is involved)

The process I documented in this post covers a smart way to hack the “test site” feature in DeepCrawl to compare separate mobile urls to desktop. And based on Google’s mobile-first index, and Google’s announcement about mobile page speed becoming a ranking factor, it’s important to compare your mobile and desktop sites now (and not after the fact). Because if you compare them now, you can nip problems in the bud (which can help the site in the short-term and after Google’s changes go live). So hack away. :)

GG