A few weeks ago, I was contacted by a small business owner about my SEO services. And what started out as a simple check of a website turned into an interesting case study about hidden SEO dangers. The company has been in business for a long time (30+ years), and the owner was looking to boost the site’s SEO performance over the long-term. From the email and voicemail I received, it sounded like they were struggling to rank well across important target queries and wanted to address that ASAP. I also knew they were running AdWords to provide air cover for SEO (which is smart, but definitely not a long-term plan for their business).

Unfortunately, my schedule has been crazy and I knew I couldn’t take them on as a longer-term client. But, I still wanted to quickly check out their website to get a better feel for what was going on. And it took me about three minutes to notice a massive problem (one that is killing their efforts to rank for many queries). And that’s a shame because they probably should rank for those keywords based on their history, services, content, etc.

Surfacing a Giant SEO Problem

As I browsed the site, I noticed they had a good amount of content for a small business. The site had a professional design, it was relatively clean from a layout perspective, and provided strong content about their business, their history, news about the organization, the services they provided, and more.

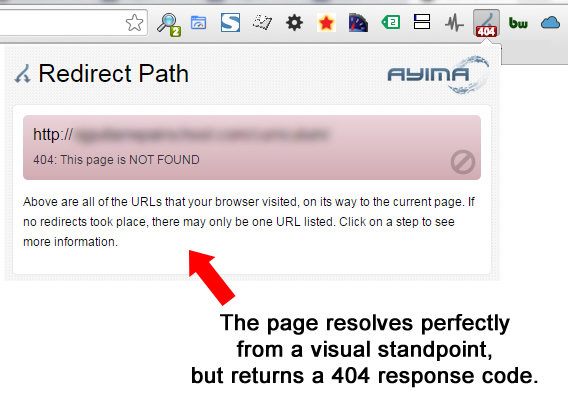

But then it hit me. Actually, it was staring me right in the face. I noticed a small 404 icon when hitting one of their service pages (via the Redirect Path Chrome extension). OK, so that’s odd… The page renders fine, the content and design show up perfectly, but the page 404s (returning a Page Not Found error). It’s like the opposite of a soft 404. That’s where the page looks like a 404, but actually returns a 200 code. Well in this situation, the page look like a 200, but returns a 404 instead. I guess you can call it a “soft 200”.

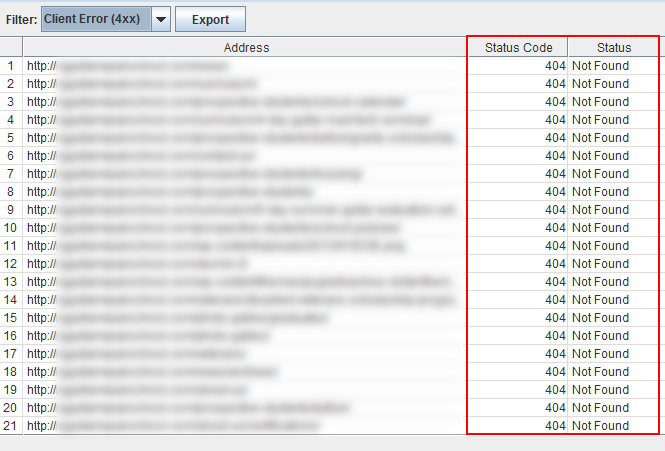

So I started to visit other pages on the site and more 404 header response codes followed. Actually, almost every single page on the site was throwing a 404 header response code. Holy cow, the initial 404 was just the tip of the iceberg.

After seeing 404s pop up all over the site, I quickly decided to crawl the website via Screaming Frog. I wanted to see how widespread of a problem it was. And it ends up that my initial assessment was spot on. Almost every page on the site returned a 404 header response code. The only pages that didn’t were the homepage and some pdfs. But every other page, including the services pages, news pages, about page, contact, etc. returned a 404.

For those of you familiar with SEO, then you know how this problem can impact a website. But for those of you unfamiliar with 404s and how they impact SEO, I’ll provide a quick rundown next. Then I’ll jump back to the story.

What is a 404 Header Response Code?

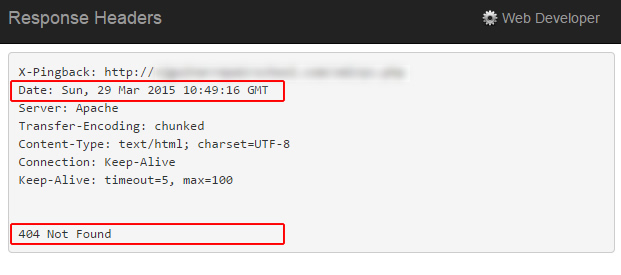

Every time a webpage is requested, the server will return a header response code. There are many that can be returned, but there are some standard codes you’ll come across. For example, 200 means the page returned OK, 301 means permanent redirect, 302 is a temporary redirect, 500 is an application error, 403 is forbidden, and 404 means page not found.

Header response codes are extremely important to understand for SEO. If you want a webpage indexed, then you definitely want it to return a 200 response code (which again, means OK, the request has succeeded). But if the page returns a 404, then that tells the engines that the page was not found and that it should be removed from the index. Yes, read that last line again. 404s basically inform Google and Bing that the page is gone and that it can be removed from each respective index. That means it will have no shot of ranking for target keywords.

And from an inbound links perspective, 404s are a killer. If a page 404s, then it cannot benefit from any inbound links pointing at the url. And the domain itself cannot benefit either (at an aggregate level). So 404s will get urls removed from Google’s index and can hamper your link equity (at the url level and at the domain level). Not good, to say the least.

Side Note: Checking Response Codes

Based on what I’ve explained, some of you reading this post might be wondering how to easily check your header response codes. And you definitely should. I won’t cover the process in detail in this post, but I will point you in the right direction. There are several tools to choose from and I’ll include a few below.

You could Fetch as Google in Google Webmaster Tools to check the response sent to Googlebot (which includes the header response code). You can also use a browser plugin like Web Developer Tools or Redirect Path to quickly check header response codes on a url by url basis.

Fetch as Google and browser plugins are great, but they only let you process one url at a time. But what if you wanted to check your entire site in one shot? For situations like that, you could use a tool that crawls an entire website (or sections of a site). For example, you could use Xenu or Screaming Frog for small to medium sized sites and then a tool like Deep Crawl for larger-scale sites. All three will return a boatload of information about your pages, including the header response codes. Now back to the case study.

Dangerous, But Invisible to the Naked Eye

Remember, the entire site was returning 404 header response codes, other than the homepage and a few pdfs. But this 404 situation was sinister since the webpages looked like they resolved ok. You didn’t see a standard 404 page, but instead, you saw the actual page and content. But, the pages were actually 404ing and not being indexed. Like I said, it was a sinister problem.

Based on what I just explained, you could tell why an SMB owner would be baffled and simply not understand why their website wasn’t ranking well. They could see their site, their content, the various pages resolving, but they couldn’t see the underlying problem. Header response codes are hidden to the naked eye, and most people don’t even realize they are being returned at all. But the response code returned is critically important for how the search engines process your webpages.

My Response – “You’re At SEO Defcon 2”

This was a tough situation for me. I absolutely wanted to help the business longer-term, but couldn’t based on my schedule. But I absolutely wanted to make sure they understood the problem I came across while quickly checking out their website.

So I crafted a quick email explaining that I couldn’t help them at this time, but that I found a big problem on their site. As quickly and concisely as I could, I explained the 404 situation, provided a few screenshots, and explained they should get in touch with their designer, developer, or hosting provider to rectify the situation ASAP. That means ensuring their webpages return the proper header response codes. Basically, I told them that if their webpages should be indexed, then they should return a 200 header response code and not the 404s being returned now.

I hit “Send” and the ball was in their court.

Their Response – “We hear you and we’re on the right track – we think.”

I heard back from the business owner who explained they started working with someone to rectify the problem. They clearly didn’t know this was going on and they were hoping to have the situation fixed soon.

But as of today, the problem is still there. The site still returns 404 header response codes on almost every page. That’s unfortunate, since again, the pages returning a 404 have no chance at all of ranking in search and cannot help them from a link equity standpoint. The pages aren’t indexed and the site is basically telling Google and Bing to not index any of the core pages on the site.

I’m going to keep an eye on the situation to see when the changes take hold. And I hope that’s soon. It’s a great example of how hidden technical dangers can destroy SEO.

Opening Up The Site – How Will The Engines Respond?

My hope is that when the pages return the proper response codes that Google and Bing will begin indexing the pages and ranking them appropriately. And that will help on several levels. The website can drive more prospective customers via organic search, while the business can probably pull back on AdWords spend. And the site can grow its power from an inbound link standpoint as well, now that the pages are being indexed properly.

But as I often say about SEO, it’s all about the execution. If they don’t implement the necessary changes, then their situation will remain as-is. I’ll try an update this post if the situation improves.

Summary – Know Your Header Response Codes

Although hidden to the naked eye, header response codes are critically important for SEO. The right codes will enable the engines to properly crawl and index your webpages, while the wrong codes could lead to SEO disaster. I recommend checking your site today (via both manual checks and a crawl). You might find you’re in the clear with 200s, but you also might find some sinister 404s. So check now.

GG

Very interesting, and something that people without a technical background might not know about. Any idea why the pages were 404’d? Do you think it’s a CMS issue or just a bad chunk of code hidden somewhere?

Also, thank you for the recommendations for browser extensions. I can really benefit from them.

Hard to say without digging in. My guess is that it’s a CMS issue. I would think it’s a straight-forward problem to rectify, but the problem is still there today. I’ll try and follow up with them to see how things are going, what the core problem was, etc.

And no problem regarding the tools. The plugins are great for quick checks, while the crawl tools are awesome for checking an entire site (or a group of urls in bulk). Thanks for the comment!

I’ve got BeamUsUp for crawling which has the advantage of costing nothing, but the disadvantage of being terribly slow. Screaming Frog seems to be the industry standard so I may have to look into upgrading.

I’ve seen the same thing several times in the Google Webmaster Help Forum – I’ve learned to take it another step further and use the Googlebot user agent when auditing sites because (although rare) I’ve seen 200 header for users and 404 for Googlebot. I don’t know how you would accomplish such a feat but it’s possible.

Great point Rick. That could be happening too… and that’s even more sinister. My hope is that more SMBs start checking their pages via the tools listed above (and nip problems like this in the bud). I’ll try and update this post if the situation changes.

This is really interesting, I use Screaming Frog on a regular basis for my sites but I’ll be checking out those plugins too. I hope the site in question gets the problem resolved!

Thanks Jay. I hope they get it resolved soon too! I’ll definitely try and update this post if the situation changes. And hopefully that’s good news. :)

Wow, now that’s something I’ve never come across. Really interesting, though. I use Screaming Frog and the Redirect Path plugin, but I would have been utterly perplexed if I’d stumbled onto this issue without having read this first, so thanks for sharing. I’m curious to know for sure if it was a CMS issue… and how they rectified it. I’ll watch for updates…

Right, it’s a big problem that lies hidden to the naked eye. Very dangerous for sure. I’ll let you know what I find, but I’m a step closer to understanding what’s going on. I’ll update the post when I know for sure.

Great read Glenn, thank you. What do you think of the approach in cases when a page is deliberately taken down for redirecting visitors to a new page – Of course a 301 would be best in this case, but the desired signal to send to Google would be “hey, this page isn’t here anymore, so we are just showing this other arbitrary page to visitors so that they don’t have to see a 404 message. (but this is not a 301 because the arbitrary page is not intended as a replacement to the pages being taken down)” Should a 404 get sent but then redirect the visitor to the new page? That appears to result in a soft 404 in WMT. Does that make sense?

If you can send users to a new page for the content at hand, or to an extremely relevant page, then a 301 would work well. If you don’t have an opportunity to do that, then I would 404 the page. And if there are a good number of high quality links to that original page, then I would try and retain the url if possible (by updating the content rewriting it, etc.) I hope that helps.

This is really unusual! I haven’t seen this rare issue with my site yet but yesterday while reading a blog post, I noticed that my little Redirect Path extention code was showing a red marked 404. This post explains just that but didn’t give the answer though completely. Is there a workaround for such cases ever it happens in the future to my site? What do you think Glenn?

Depends on the site and the CMS. Could be a simple fix in certain situations. I would check with the dev team first, then the hosting provider. Between the two, you should be able to get the problem fixed quickly. I’ll try and post an update here soon about this case, since they did fix the problem. More to come. :)