If you’ve read my previous posts, then you already know that SEO audits are a core service of mine. I still believe they are the most powerful deliverable in all of SEO. So when prospective clients call me for help, I’m quick to start checking some of their stats while we’re on the phone. As crazy as it sounds, there are times that even a few SEO checks can reveal large SEO problems. And that’s exactly what happened recently while on the phone with a small business owner who was wondering why SEO wasn’t working for them.

While performing audits, I’ve found some really scary problems. Problems that spark nightmares for SEOs. But what I witnessed recently had me falling out of my seat (literally). It was a serious robots.txt issue that was causing serious problems SEO-wise. I can tell you that I’ve never seen a robots.txt issue like what I saw while on that call. It was so bad, and so over the top, that I’m adding it as an example to my SEO Bootcamp training (so attendees never implement something like that on their own websites).

Although robots.txt is a simple file that sits at the root of your website, it can still cause serious SEO problems. The result of the scary-as-heck robots.txt file I mentioned earlier is a small business website with only one page indexed, and all other pages blocked (including its core services pages). In addition, the robots.txt file was so riddled with problems, that I’m wondering if Googlebot and Bingbot are so offended by the directives that they don’t even crawl the site. Yes, it was that bad.

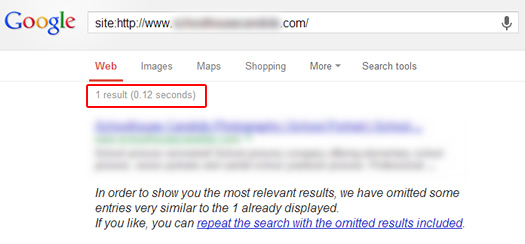

Site command revealing only one page indexed:

The SMB SEO Problem

Many small to medium sized businesses are skeptical of SEO companies. And I don’t blame them. There are some crazy stories out there, from SEO scams to SEOs not delivering to having all SEO work outsourced to less-experienced third parties. That leads to many SMBs handling SEO for themselves (which is ok if they know what they are doing). But in situations where business owner are not familiar with SEO best practices, advanced-level SEO work, etc., they can get themselves in trouble. And that’s exactly what happened in this situation.

5K Lines of SEO Hell

As you can imagine, the site I mentioned earlier is not performing well in search. The business owner didn’t know where to begin with SEO and was asking for me assistance (for setting a strong foundation that they could build upon). That’s a smart move, but I picked up the robots.txt file issue within 5 minutes of being on our call. I did a quick site: command in Google to see how many pages were indexed, and only one page showed up. Then I quickly checked the site’s robots.txt file and saw the problems. And there were really big problems. Like 5K+ lines of problems. That’s right, over 5K lines of directives were included in this small business website’s robots.txt file.

Keep in mind that most small businesses might have five or six lines of directives (at most). Actually, there are some with just three two or three lines. Even the most complex websites I’ve worked on had less than 100 directives. So to see an SMB website with 5K+ lines shocked me to say the least.

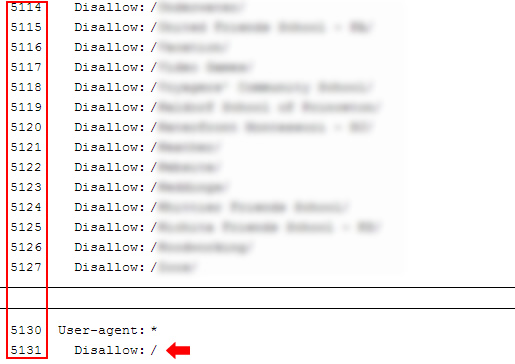

Excessive and redundant directives in a robots.txt file:

Yes, the SMB Owner Was Shocked Too

The business owner was not happy to hear what was going on, but still had no idea what I was talking about. I sent them a link to their robots.txt file so they could see what I was referring to. I also explained that their web designer or developer should change that immediately. As of now, it’s still there. Again, this is one of the core problems when small businesses handle their own SEO. Serious problems like this can remain (and sometimes for a long time).

What’s Wrong with the File?

So, if you’re familiar with SEO and robots.txt files, you are probably wondering what exactly was included in this file, and why a small business would need 5K+ lines of directives. Well, they obviously don’t need 5K+ lines, and this was something added by either the CMS they are using or the hosting provider. It’s hard to tell, since I wasn’t involved when the site was developed.

The file contains a boatload of disallow directives for almost every single directory on the site. Those directives were replicated for a number of specific bots as well. Then it ends with a global disallow directive (blocking all engines). So the file goes to great lengths to disallow every bot from hundreds of directories, but then issues a “disallow all” (which would cover every bot anyway).

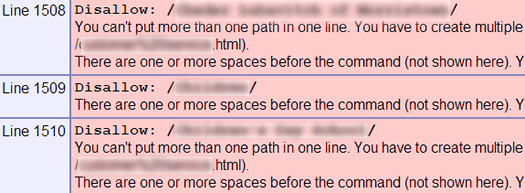

Errors in a robots.txt file:

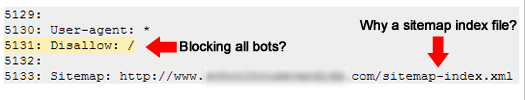

Sitemap Index File Problems

But it gets worse. The final line is a sitemap directive! Yes, the file blocks everything, and then tries to feed the engines an xml sitemap that should contain all urls on the site. But, the site is actually using a sitemap index file, which is typically used to include multiple sitemap files (if you need more than one). Remember, this is a small business website… so it really shouldn’t need more than one xml sitemap. When checking the sitemap index file, it only contains one xml sitemap file (which makes no sense)! If you only have one sitemap file, then why use a sitemap index file?? And that one xml sitemap only contains one URL!! Again, this underscores the point that the site is creating an overly complex situation for something that should be simple (for most small business websites).

Wrong use of a sitemap index file:

So let me sum this up for you. The robots.txt file:

- Blocks all search engines from crawling all content on the site.

- Is overly complex by blocking each directory for each bot under the sun.

- Contains malformed directives (like close to a thousand).

- Provides autodiscovery for a sitemap index file that only contains one xml sitemap, with only one URL listed! And all of those urls are blocked by robots.txt anyway!

What To Do – Get the basics down, then scale.

Based on the audits I’ve performed and the businesses I’ve helped, here is what I think small businesses should do with their robots.txt files:

- Keep it simple. Don’t take it from me, listen to Google. Only add directives when absolutely needed. If you are unsure what a directive will do, don’t add it.

- Test your robots.txt file. You can use Google Webmaster Tools to test your robots.txt file to ensure it blocks what you need it to block (and that it doesn’t block what you want crawled). You can also use some online tools to test your robots.txt file. You can read another one of my posts to learn about how one of those tools saved a client’s robots.txt file.

- Add autodiscovery to make sure your clean xml sitemap can be found automatically by the engines. And use a sitemap index file if you are using more than one xml sitemap.

- And if you’re a small business owner and have no idea what I’m talking about in the previous bullets, have an SEO audit completed. It is one of the most powerful deliverables in all of SEO and can definitely help you get things in order.

Summary – Avoid 5K Line Robots.txt Files

If there’s one thing you should take away from this post, it’s that the basics are really important SEO-wise. Unfortunately, the small business with the 5K+ line robots.tx file could write blog posts until the cows came home and it wouldn’t matter. They could have gotten a thousand likes and tweets, and it would have only impacted them in the short term. That’s because they are blocking every file from being crawled on their website. Instead of doing that, you should develop a solid foundation SEO-wise and then build upon it.

Nobody needs a 5K line robots.txt file, not even the most complex sites on the web. Remember, keep it simple and then scale.

GG

But how do they were showing up for 1 result on Google if they were blocked by robots.txt?

The robots.txt file was such a mess that it doesn’t shock me the engines were confused. In theory, that page should not be indexed. That said, there were thousands of lines of directives, and the bots could absolutely get confused with what to crawl, and what not to crawl. Only one page was indexed, so it still left the site in grave condition SEO-wise.

Is it possible to disallow for example Ahref bots?

Yes, you can follow the instructions here -> https://ahrefs.com/robot/

my website url blocked as show in screenshot could you help me?