Last month John Shehata from NewzDash published a blog post documenting a study covering the impact of syndication on news publishers. For example, when a publisher syndicates articles to syndication partners, which site ranks and what does that look like across Google surfaces (Search, Google News, etc.)

The results confirmed what many have seen in the SERPs over time while working at, or helping, news publishers. Google can often rank the syndication partner versus the original source, even when the syndicated content on partner sites is correctly canonicalized to the original source.

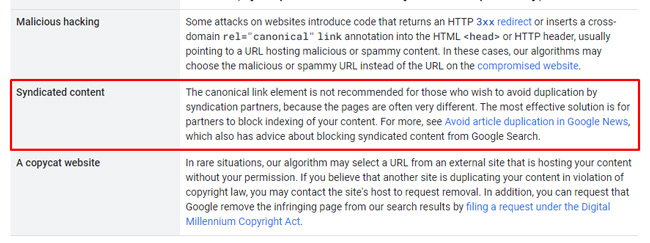

And as a reminder, Google updated its documentation about canonicalization in May of 2023 and revised its recommendation for syndicated content. Google now fully recommends that syndication partners noindex news publisher content if the publisher doesn’t want to compete with that syndication partner in Search. Google explained that rel canonical isn’t sufficient since the original page and the page located on the syndication partner website can often be different (when you take the entire page into account including the boilerplate, other supplementary content, etc.) Therefore, Google’s systems can presumably have a hard time determining that it’s the same article being syndicated and then rank the wrong version, or even both. More on that situation soon when I cover the case study…

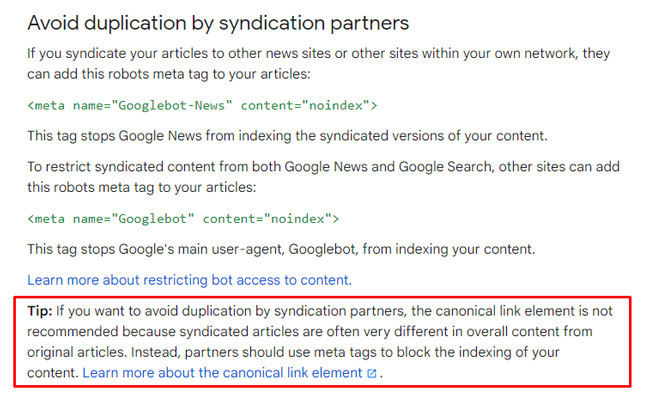

And here is information from Google’s documentation for news publishers about avoiding duplication problems in Google News with syndicated content:

Previously, Google has said you could use rel canonical pointing to the original source, while also providing a link back to the original source, which should have helped their systems determine the canonical url (and original source). And to be fair to Google, they did also explained in the past that you could noindex the content to avoid problems. But as anyone working with news publishers understands, asking for syndication partners to noindex that content is a tough situation to get approved. I won’t bog down this post by covering that topic, but most syndication partners actually want to rank for the content (so they are unlikely to noindex the syndicated content they are consuming.)

Your conversations with them might look like this:

The Case Study: A clear example of news publisher syndication problems.

OK, so we know Google recommends noindexing content on the syndication partner website and to avoid using rel canonical as a solution. But what does all of this actually look like in the SERPs? How bad is the situation when the content isn’t noindexed? And does it impact all Google surfaces like Search, Top Stories, the News tab in Search, Google News, and Discover?

Well, I decided to dig in for a client that heavily syndicates content to partner websites. They have for a long time, but never really understood the true impact. After I sent along the study from NewzDash, we had a call with several people from across the organization. It was clear everyone wanted to know how much visibility they were losing by syndicating content, where they were losing that visibility, if that’s also impacting indexing of content, and more. So as a first step, I decided to craft a system to start capturing data that could help identify potential syndication problems. I’ll cover that next.

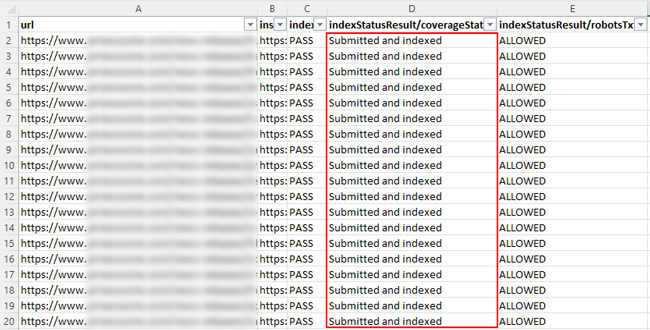

The Test: Checking 3K recently published urls that are also being syndicated to partners.

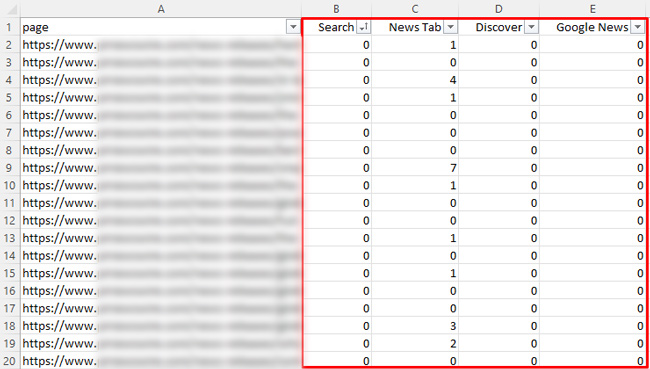

I took a step back and began mapping out a system for tracking the syndication situation the best I could based on Google’s APIs (including the Search Console API and the URL Inspection API). My goal was to understand how Google was handling the latest three thousand urls published from a visibility standpoint, indexing standpoint, and performance standpoint across Google surfaces (Search, Top Stories, the News tab in Search, and Discover).

Here is the system I mapped out:

- Export the latest three thousand urls based on the Google News sitemap.

- Run the urls through the URL Inspection API to check indexing in bulk (to identify any type of indexing issue, like Google choosing the syndication partner as the canonical versus the original source). If the pages weren’t indexed, then they clearly wouldn’t rank…

- Then check performance data for each URL in bulk via the Search Console API. That included data for Search, the News tab in Search, Google News, and Discover.

- Based on that data, identify indexed urls with no performance data (or very little) as candidates for syndication problems. If the urls had no impressions or clicks, then maybe a syndication partner was ranking versus my client.

- Spot-check the SERPs to see how Google was handling the urls from a ranking perspective across surfaces.

No Rhyme or Reason: What I found disturbed me even more than I thought it would.

First was the indexing check across three thousand urls, which went very well. Almost all of the urls were indexed by Google. And there were no examples of Google incorrectly choosing syndication partners as the canonical. That was great and surprised me a bit. I thought I would see that for at least some of the urls.

Next, I exported performance data in bulk for the latest three thousand urls. Once exported, I was able to isolate urls with very little, or no, performance data across surfaces. These were great candidates for potential syndication problems. i.e. If the content yielded no impressions or clicks, then maybe a syndication partner was ranking versus my client.

And then I started spot-checking the SERPs. After checking a number of queries based on the list of urls that were flagged, there was no rhyme or reason why Google was surfacing my client’s urls versus the syndication partners (or vice versa). And to complicate things even more, sometimes both urls ranked in Top Stories, Search, etc. And then there were times one ranked in Top Stories while the other ranked in Search. And the same went for the News tab in Search and Google News. It was a mess…

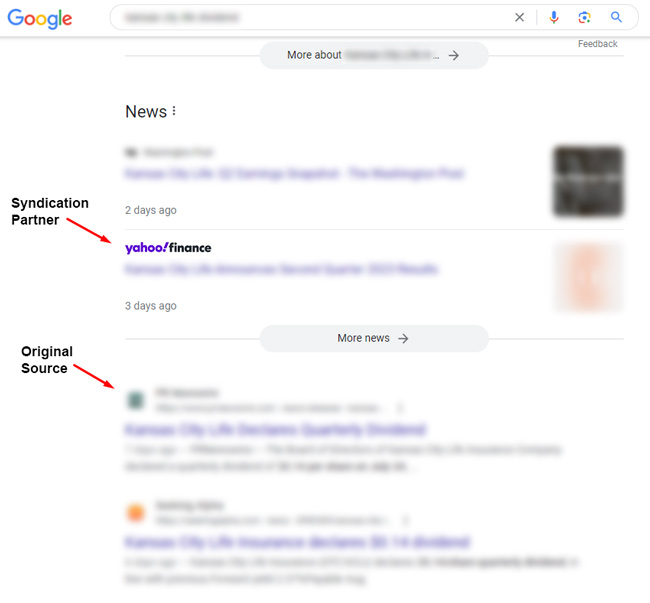

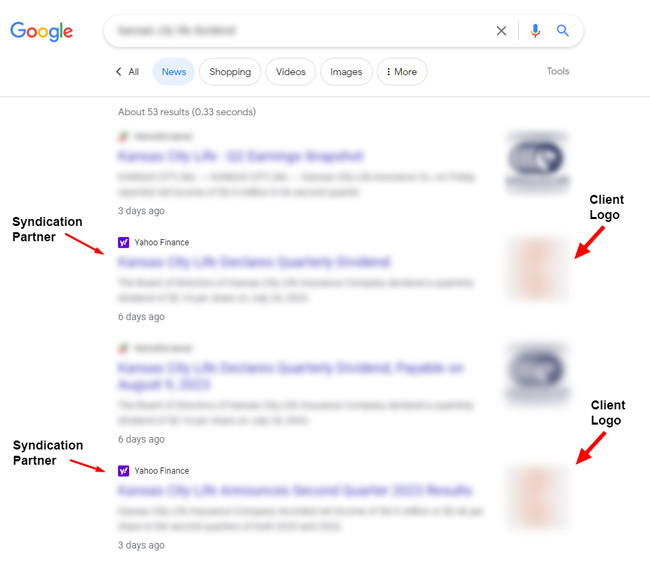

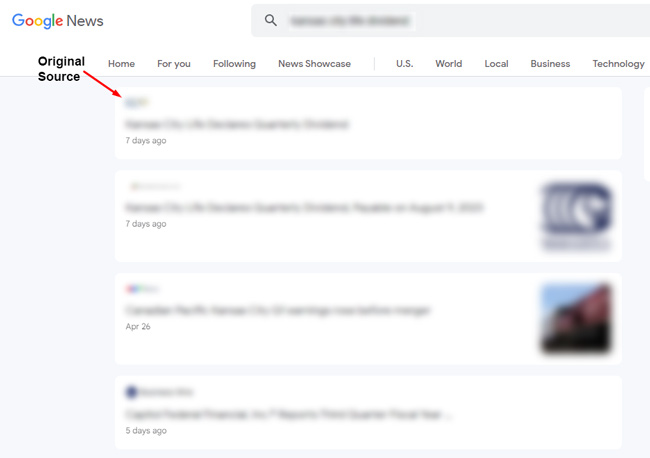

I’ll provide a quick example below so you can see the syndication mess. Note, I had to blur the SERPs heavily in the following screenshots, but I wanted to provide an example of what I found. Again, there was no rhyme or reason why this was happening. Based on this example, and what I saw across other examples I checked, I can understand why Google is saying to noindex the urls downstream on syndication partners. If not, any of this could happen.

First, here is an example of Yahoo Finance ranking in Top Stories while the original ranks in Search right below it:

Next, Yahoo News ranks twice in the News tab in Search (which is an important surface for my client), while the original source is nowhere to be found. And my client’s logo is shown for the syndicated content. How nice…

And then in Google News, the original source ranks and syndication partners are nowhere to be found:

As you can see, the situation is a mess… and good luck trying to track this on a regular basis. And the lost visibility across thousands of pages per month could really add up… It’s hard to determine the exact number of lost impressions and clicks, but it can be huge for large news publishers.

Discover: The Personalized Black Hole

And regarding Discover, it’s tough to track lost visibility there since the feed is personalized and you can’t possibly see what every other person is seeing in their own feed. But you might find examples in the wild of syndication partners ranking there versus your own content. Below is an example I found recently of Yahoo Finance ranking in Discover for an Insider Monkey article. Note, Insider Monkey is not a client and not the site I’m covering in the case study, but it’s a good example of what can happen in Discover. And if this is happening a lot, the site could be losing a ton of traffic…

Here is Yahoo Finance ranking in Discover:

And here is the original article on Insider Monkey (but it’s in a slideshow format). This example shows how Google can see the pages are different, which can cause problems understanding that they are the same article:

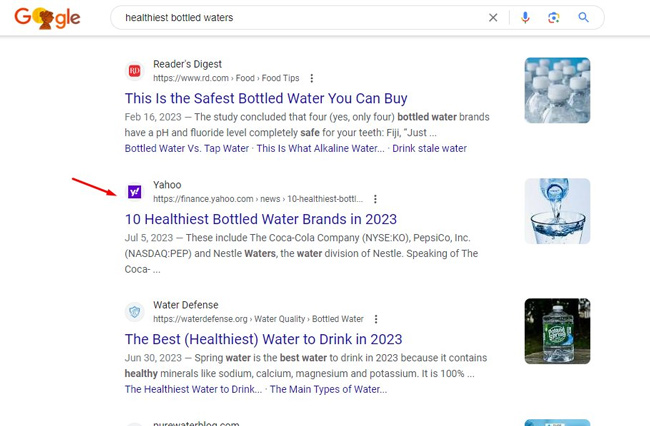

And here is Yahoo Finance ranking #2 for the target keyword in the core SERPs. So the syndication partner is ranking above the original in the search results:

Key points and recommendations for news publishers dealing with syndication problems:

- First, try to understand indexing and visibility problems the best you can. Use an approach like I mapped out to at least get a feel for how bad the problem is. Google’s APIs are your friends here and you can bulk process many urls in a short period of time.

- Weigh the risks and benefits of syndicating content to partners. Is the additional visibility across partners worth losing visibility in Search, Top Stories, the News tab in Search, Google News and Discover? Remember, this could also mean a loss of powerful links as well… For example, if the syndication partner ranks, and other sites link to those articles, you are losing those links.

- If needed, talk with syndication partners about potentially noindexing the syndicated content. This will probably NOT go well… Again, they often want to rank to get that traffic. But you never know… some might be ok with noindexing the urls.

- Understand Discover is tough to track, so you might be losing more traffic there than you think (and maybe a lot). You might catch some syndication problems there in the wild, but you cannot simply go there and find syndication issues easily (like you can with Search, Top Stories, the News tab, and Google News).

- Tools like Semrush and NewzDash can help fill the gaps from a rank tracking perspective. And NewzDash focuses on news publishers, so that could be a valuable tool in your tracking arsenal. Semrush could help with Search and Top Stories. Again, try to get a solid feel for visibility problems due to syndicating content.

Summary – Syndication problems for news publishers might be worse than you think.

If you are syndicating content, then I recommend trying to get an understanding of what’s going on in the SERPs (and across Google surfaces). And then form a plan of attack for dealing with the situation. That might include keeping things as-is, or it might drive changes to your syndication strategy. But the first step is gaining some visibility of the situation (pun intended). Good luck.

GG