Update: June 5, 2025

Google just updated its documentation for SafeSearch and there are some interesting changes and new information there. The new documentation covers allowing Google to fetch your video content, how to handle age gates, changes to how to group explicit content, and more.

—————

For many site owners and SEOs, SafeSearch can be a confusing topic. For example, understanding how SafeSearch works, what filtering looks like in action, and how it can impact traffic from Google organic search. That’s especially the case for sites that walk a fine line between providing non-explicit and explicit content (at least according to Google’s algorithms).

I’ve worked on several cases recently where SafeSearch came into play. Site content was being filtered for users with SafeSearch active, which was impacting the visibility of content in the SERPs and subsequent traffic to the site.

But these weren’t porn sites being filtered… far from it actually. Instead, the sites are publishers that write about a range of topics within their niche. And sometimes those articles provide content and images that trip the SafeSearch filter. When that happens, those urls and images are filtered out of the search results yielding lower traffic levels from Google.

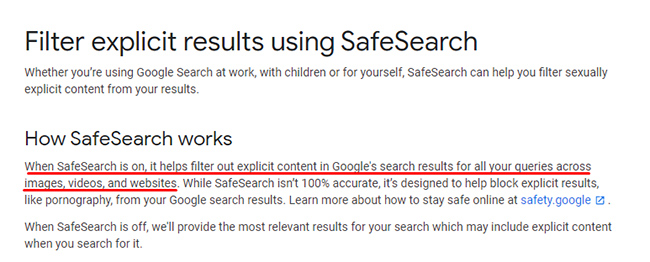

And to clarify, filtering applies to images, videos, and websites, so it’s not just about filtering in the standard organic results. It impacts your media as well.

This is why it’s a smart idea for publishers to become as knowledgeable as possible about Google’s SafeSearch feature, how it works, how to test if you’re being actively filtered, and how to test new content to future-proof your site.

That’s because if you trip the filter, traffic could drop to various degrees depending on how the filter is applied. For example, is SafeSearch just impacting certain areas of the site or the entire site? It depends on your setup, your content, and how far your content crosses the line.

Also, while helping companies deal with SafeSearch filtering, I’ve received many questions about how to test content to see how close it is to tripping the SafeSearch filter. And if you do trip the filter, how do you know you are being filtered? And what about if you are incorrectly being filtered? Can you reach out to Google to let them know that’s happening?

These are all important questions and I’ll be covering a number of these topics in this post.

Images and Text, Not Just Explicit Photos

A lot of people don’t know this, but Matt Cutts was tasked with creating the first version of SafeSearch. He explained more about this during a recent interview with Barry Schwartz (at 12:08 in the video). In that interview, Matt explained that Google initially used text to understand what should be filtered. Since the first iteration of SafeSearch was in about 2000, Google didn’t have machine learning algorithms to help identify pornographic images. Therefore, it was mostly text-based.

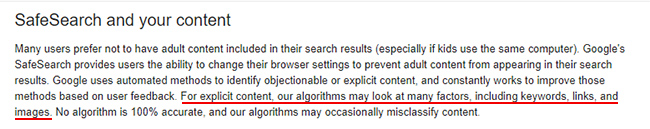

SafeSearch is undoubtedly much more sophisticated now and Google has explained it does use machine learning to detect explicit content that should be filtered. More about that soon, but I wanted to make sure you understood that both text and images are taken into account. Also, there’s a SafeSearch team at Google that is focused on the feature. That’s also important to understand in case you run into trouble, like if your site is incorrectly being filtered.

An older Google help document I surfaced, which is now removed, explains that SafeSearch looks at “keywords, links, and images” to determine what should be filtered. So again, it’s not just images.

Tripping The SafeSearch Filter – What Can Happen?

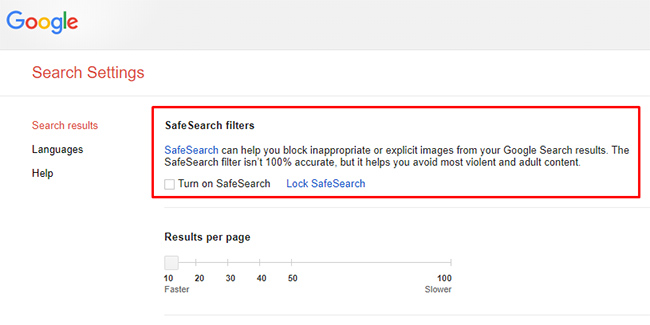

When a site trips the SafeSearch filter, Google will filter some (or all) of your pages for users that have SafeSearch active (again, across images, video, and websites). It’s an option in your search preferences, so some people have to manually turn it on. For others, it might be turned on at a higher level, like a school administrator, IT department, or even a parent managing a child’s computer.

If this happens, then your pages won’t appear like they normally would for certain queries leading to your site. They will be filtered out. Poof, gone. There’s no communication from Google about this, like a message in GSC (at least that I know of). Instead, your pages will just be filtered in the SERPs.

As you can guess, some sites can experience lower traffic levels depending on their target demographic. For example, if your audience includes people that have SafeSearch turned on, then the filter can cause problems traffic-wise. If most users that visit the site don’t have SafeSearch active, then you might not even know you are being filtered. It’s tricky that way.

But let’s face it, “tricky” is a four-letter word in SEO. As a site owner or SEO, you definitely want to know if your content is being filtered by SafeSearch. You don’t want any surprises down the line, like ghostly traffic drops that are hard to figure out. That can be very frustrating. I’ll explain how to check for SafeSearch filtering soon, but first a note about site structure and adult metadata.

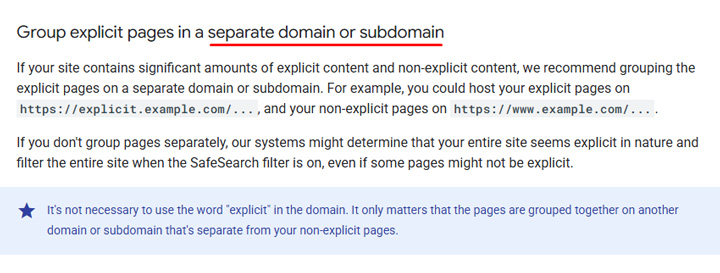

How Site Structure Can Help Google Focus Its SafeSearch Filtering

With the latest update to its SafeSearch documentation, Google now explains that you can help its algorithms understand which sections contain adult content by using a separate domain or subdomain. It previously explained you could place that content in a separate directory, but now says ‘domain’ or ‘subdomain’. That’s a pretty big change for sites containing explicit content.

If you do that, then Google’s algorithms can determine that the entire site shouldn’t be filtered, but just the specific domain or subdomain. That can save you down the line, so I highly recommend trying to organize your content that way if you need to publish adult content for some reason (or content that Google believes is explicit).

Now, you might be wondering what exactly constitutes “adult content”. That’s a tricky one… since it’s determined algorithmically. I’ll cover ways to understand how your content is being viewed by SafeSearch later in this post.

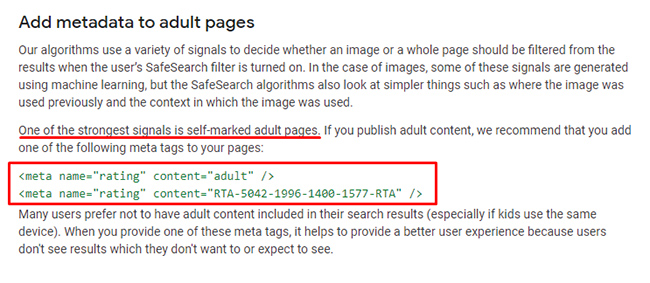

Adult Meta Tags

Google also explains that the best way to let them know that adult content is on your page is to use adult metadata. They say it’s the “strongest signal” you can provide about this. There are two meta tags you can use in your code to accomplish this (you just need to include one, but you have a choice).

How To Check For SafeSearch Filtering – Tripping The Filter

Below, I’ll cover several ways to check to see if your content is actively being filtered by SafeSearch. You’ll be checking after-the-fact, meaning you are already being filtered. After I cover these methods, I’ll explain how to conduct some preventative work to make sure you stay out of the danger zone. i.e. Testing content you want to publish to see how Google’s SafeSearch sees the content.

Before we begin, I find it’s easier to check for SafeSearch filtering with two browsers open. One will have SafeSearch on and the other off. And if you have dual monitors, it’s great to have one browser up on each display.

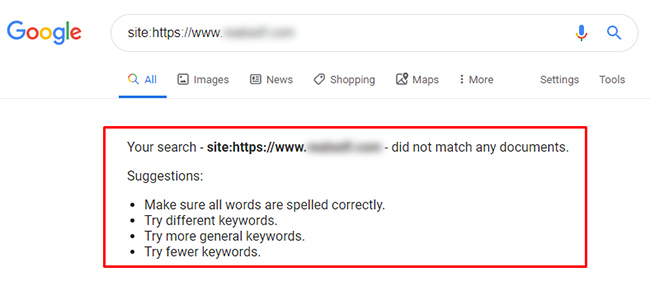

1. Perform a site query with SafeSearch active

First, fire up the browser with SafeSearch on and perform some site commands (including by directory). In extreme cases, you will see 0 results returned when your entire site is flagged and filtered.

For sites not completely filtered, I’ve found site queries to be a little sketchy. Sometimes they show a site being partially filtered and sometimes they don’t… So use this as a quick way to check for filtering, but don’t 100% rely on it. Again, for sites being completely filtered by SafeSearch, this can be helpful. For partial filtering, this process can be unreliable.

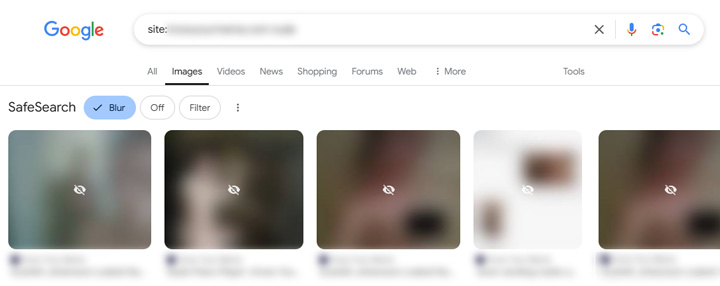

1a. Site queries in *image search* and look for blurring:

Since Google started blurring images by default that are being flagged by SafeSearch in 2023, you can hop over to the image tab (or Google Images) and perform site queries there. I recommend adding keywords to the site query to see if any images come back being blurred. Then you can follow the images back to the webpages and start to determine if those pages are being filtered via SafeSearch.

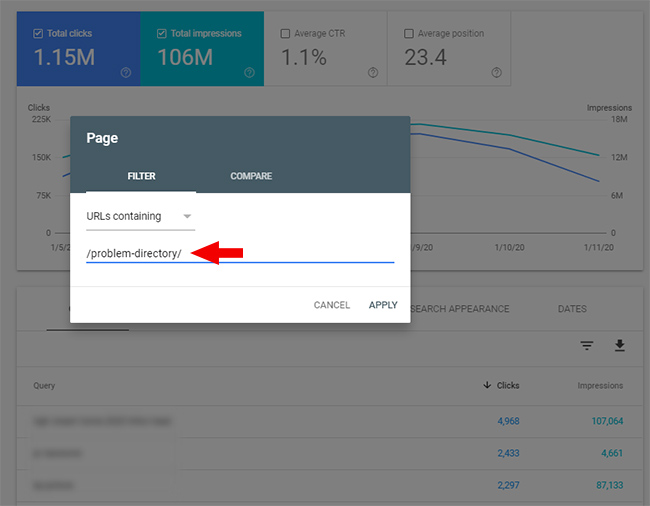

2. Identify at-risk queries and perform actual searches

The best way to check for SafeSearch filtering is to start searching using the two browser-setup I mentioned earlier. First, go to GSC and export a bunch of queries leading to your site. Find ones that would be considered risky from a SafeSearch perspective (either based on the query itself or the content you have at the destination landing pages). And then start searching.

Enter a query in the browser with SafeSearch off, check your rankings, and then perform the same search in the browser with SafeSearch on. You might be surprised with what you find. You might see major gaps in the SERPs where your pages used to rank. If that’s the case, yep, you’ve tripped the filter.

I just did this for a client and found many queries that yielded SafeSearch filtering for them. And the filtering was focused on one specific directory (which made complete sense considering how they have the site structured).

Remember what I said earlier about the importance of url structure for SafeSearch? Well, they luckily house all risky content in one directory. That’s the good news. The bad news is that there’s a lot of non-explicit content there as well… So, certain pages are being filtered by SafeSearch, but at least it’s not the entire site being filtered.

Repeat for more queries and identify which queries and landing pages are being filtered. By analyzing more of this data, you can start to get a feel for what crosses the line content-wise. That’s a good segue to the next section of this post… how to catch problematic content before it gets filtered.

How far is too far?… Enter Google’s Vision API

Up to this point, I’ve covered how to check if you’re actively being filtered, but what about performing some preventative work BEFORE you get filtered? This is a natural question for any company that has gotten caught in the filter. They normally want to future-proof their site and they don’t want Google categorizing the site as adult (or a site that houses some adult content).

Well, Google has you partially covered, pun intended. :) Enter Google’s Vision API, which is pretty amazing. It uses machine learning to automatically identify and categorize images. You can read more about the Vision API on the site, but I’ll stick to how we can use it based on this post.

One of the features of the Vision API is that it can be used to detect explicit content via SafeSearch. It can identify adult content, violence, and more. It also uses OCR to detect text in the image, so it’s not purely about the images in the photo.

Also, if you have development resources, then you can tap into the API at scale to check your images using SafeSearch. If you don’t have development resources, and you’re not checking a lot of images on a regular basis, then you can test specific images right on the site. There’s an option to try the API for single images.

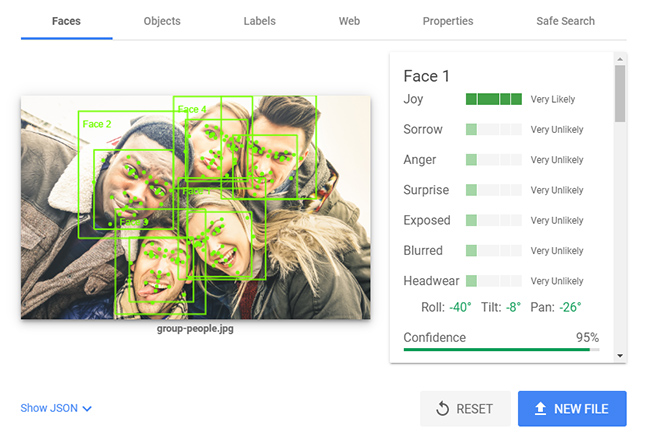

After dragging an image to the tool, or selecting one from your computer, Google’s Vision API will analyze that photo and return a boatload of information, including sections for faces, objects, labels, entities, webpages that contain the image, text, properties, and… SafeSearch.

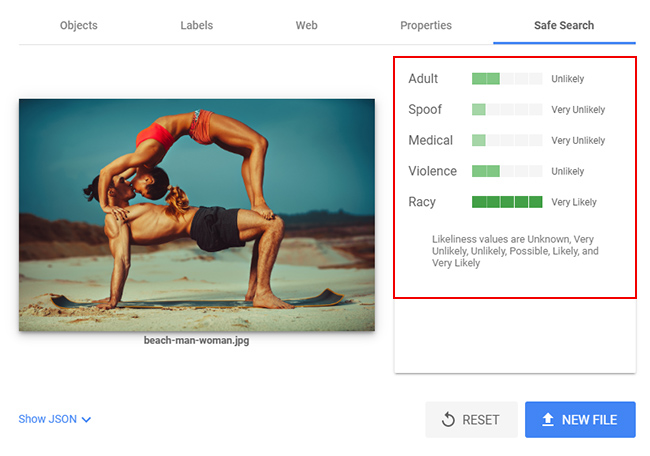

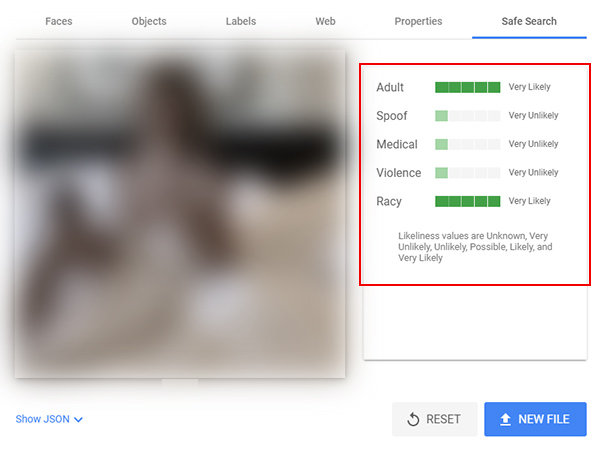

Yes, there’s a dedicated section for SafeSearch that provides the likeliness that the image is adult, spoof, medical, violence, or racy. It’s fascinating.

When testing various images using the API, you can start to get a feel for what crosses the line. Here are some examples of what the API returns for SafeSearch. The first returned the likelihood of being “racy”, but not really “adult”, while the second crossed the line and returned “very likely” for both “adult” and “racy”.

Note, although you’ll receive some great information from the API, it’s hard to tell exactly what will trip the SafeSearch filter. That said, this sure gives you a stronger view of how SafeSearch works. If you see “Very Likely” for both Adult and Racy, then that’s clearly an image you want to rethink (if you don’t want to trip SafeSearch). Also, you don’t want Google to begin to classify your site, or parts of your site, as having adult content.

False Positives, Notifying Google That Your Site Is Incorrectly Being Flagged

Machine learning algorithms aren’t perfect and there are times sites are incorrectly flagged as having adult content. If you experience this situation, then you should let Google know about it. In the past there was a form you could fill out to let Google know your site was incorrectly being flagged by SafeSearch.

Unfortunately, Google removed that form and now drives site owners to the webmaster forums to make their case. I’m not a big fan of that approach since site owners often don’t want to announce to the world that their site is being flagged by SafeSearch (especially if they don’t focus on adult content…)

So, the good news is that you can post in the forums and hopefully get your information in front of a Googler on the SafeSearch team. The bad news is that you have to tell the world about it, since the post is going to be public.

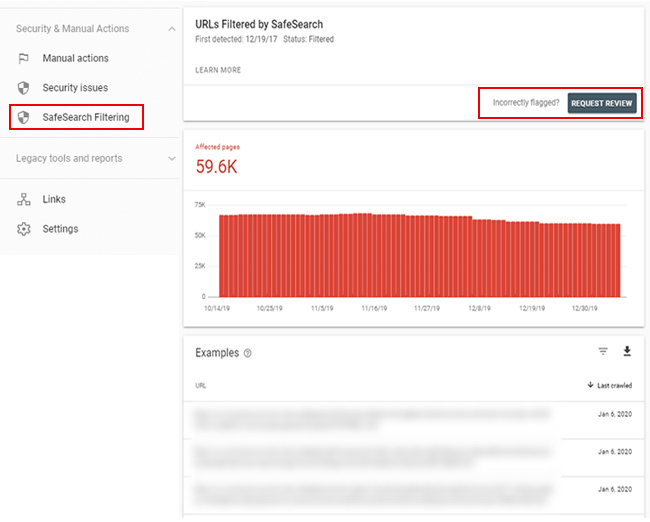

Feature Request: SafeSearch Report in Google Search Console Wanted!

After helping some companies with this situation, I think it would be great to have a notification in GSC or some type of report that lets site owners know if their content is being flagged by SafeSearch. And maybe have a “request review” button there in case the site, or sections of the site, are incorrectly being flagged. Here is what that can look like (hint, hint GSC team). :)

Closing Tips: SafeSearch, Tripping The Algo, and Future-proofing your site

As I explained earlier, it’s important to become as familiar as possible with SafeSearch if you provide content that could be classified as explicit (like adult content). Using the methods and tools listed in this post can help you determine if you are currently being filtered, and to understand if you might be filtered in the future. Below, I’ll cover some final tips and recommendations:

- Understand your current situation fully. If you think SafeSearch might be at play with your own site, perform thorough testing to see if you are being filtered. Then form a plan of attack if you are. Use the methods I listed earlier to test queries with SafeSearch off, and then on. Again, you might be surprised with what you find.

- If you provide adult content (or any type of content that might be deemed explicit), then start conversations with your marketing and dev teams about site structure. Remember, Google has explained that placing all adult content in a specific directory can help its algorithms understand where explicit content resides on your site (and then SafeSearch can be as granular as possible).

- Use adult meta tags to provide self-marked pages that contain explicit content. Google explained this is the strongest signal you can send to them that your pages contain adult content.

- Identification-wise, SafeSearch takes both images and text into account. It’s not just the image content.

- If you are being filtered, then that filtering can happen for webpages, video, and images. It’s not just about filtering in the standard organic search results.

- Educate your editorial staff about SafeSearch and show them how to test images via Google’s Vision API. This can help protect your site down the line by having employees conduct preventative checks BEFORE that content is published.

- And if you believe your site is being incorrectly flagged by SafeSearch, you unfortunately can’t submit a form to tell Google like in the past. You must go got the webmaster forums to provide the details. That can hopefully get in front of a Googler on the SafeSearch team, but there are no guarantees.

Summary – Try To Be Safe With SafeSearch

I hope this post helped you gain a better understanding of Google’s SafeSearch feature, how to test your own site, and then how to conduct preventative checks. Don’t let some ghostly drop in traffic create a confusing situation for your company. Know when you’re being filtered and then form a plan for handling that situation. Good luck and safe searching :)

GG