{Update July 2022: I just published my post about the May 2022 broad core update. In my post, I cover five micro-case studies of drops and surges.}

In part one of my series on the December 2020 broad core update, I covered a number of important items. If you haven’t read that post yet, I recommend doing that and then coming back to this post. For example, I covered the rollout, timing, tremors and reversals, the impact I was seeing across verticals, and then I covered a number of important points for site owners to understand about broad core updates.

Those items spanned quality indexing, the importance of providing a strong user experience, avoiding hammering users with ads, technical SEO problems that cause quality problems, the A in E-A-T, machine learning in Search, and more. My first post will provide a strong foundation before jumping into the case studies I’ll cover below.

Three Case Studies, Three Interesting Lessons About Broad Core Updates

In this post, I’ll cover three case studies that underscore the complexity and nuance of broad core updates. Each is a unique situation and site owners (and SEOs) can learn a lot from what transpired with the sites I’ll be covering. I was planning on covering four cases, but this post was getting too long. I might cover that other case in another post in the future, though.

Remember, Google is evaluating many factors over time with broad core updates. It’s never about one or two things. Instead, there could be many things working together that cause significant impact. Google’s Gary Illyes once explained that Google’s core ranking algorithm is comprised of “millions of baby algorithms working together to output a score”. That’s why I recommend using a “kitchen sink” approach to remediation where you surface all potential problems impacting a site and fix them all (or as many as you can). The core point, pun intended, is to significantly improve the site overall.

It’s also important to understand that Google wants to see significant improvement over the long-term. Google’s John Mueller has explained this many times over the years, and it’s what I have seen while helping many companies deal with broad core updates. And the case studies below support that point as well.

In the case studies below, I’ll cover each site’s background leading up to the December broad core update, some of the important issues I surfaced while helping those companies, how those issues were addressed (or not), and what the outcome was when the December update rolled out. Again, my hope is that these cases will help site owners understand more about broad core updates, how to deal with negative impact, and understand various factors that could be leading to negative impact. Let’s jump in.

Below, I have provided a table of contents in case you want to jump to a specific part of this post:

- Case Study 1: Niche News Publisher – A Double Core Update Hit, But E-A-T Galore

- Case Study 2: Crushed By The December Update. It’s Like Google Doesn’t Even Know You Anymore

- Case Study 3: Overcoming “The Creep”

- Tips and recommendations for site owners.

Case Study 1: Niche News Publisher – A Double Core Update Hit, But E-A-T Galore

The first case I’m going to cover is a super interesting one (and I felt especially connected to it since I loved the site even before the site owner reached out to me!) It’s a news publisher that focuses on a very specific niche that reached out to me after getting hit hard by the January 2020 core update. Again, I knew the site right away (since I had been reading it for years) and I was initially extremely surprised to hear the site had been hit hard.

Note, they contacted me in March of 2020, so months after the January update rolled out. Waiting to improve a site is a big risk site owners take and I’ll touch on that later in this case.

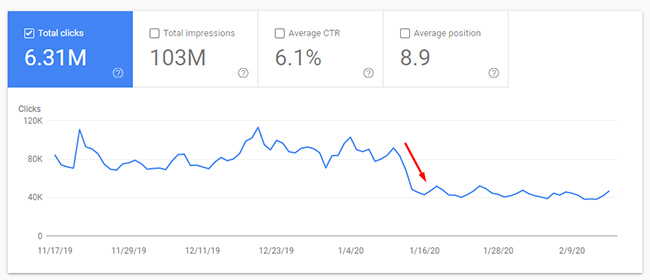

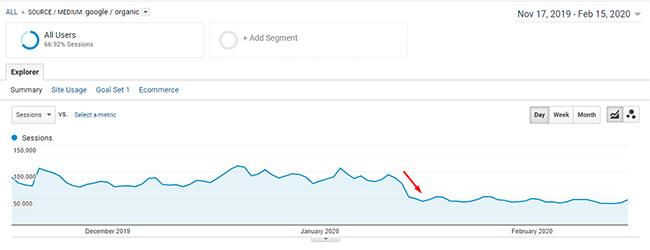

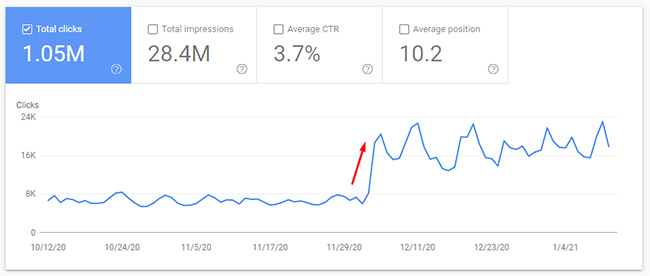

The site had lost 45% of its Google organic traffic overnight with the January 2020 broad core update:

I wish that was the only drop the site had to deal with in 2020, but it wasn’t. More about that soon.

Google News, Top Stories, and Discover:

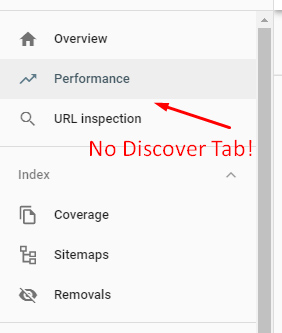

Based on how Google was viewing the site, it rarely showed up in Top Stories, almost never ranked highly in Google News, and had no Discover traffic at all. And I mean none… the Discover reporting didn’t even show up in GSC. That means it had no impressions or clicks over time. And remember, it’s arguably the top publisher in its niche.

E-A-T Galore:

The site, including its core writers, had E-A-T galore. That was definitely not the problem, not even close. It’s a well-known, well-respected, leader in its niche. I would argue it’s the top news site in its niche and has broken some of the biggest stories in its category.

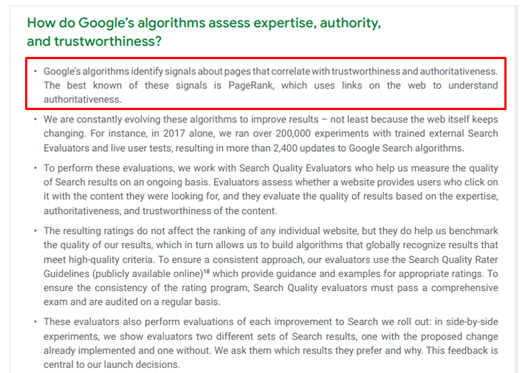

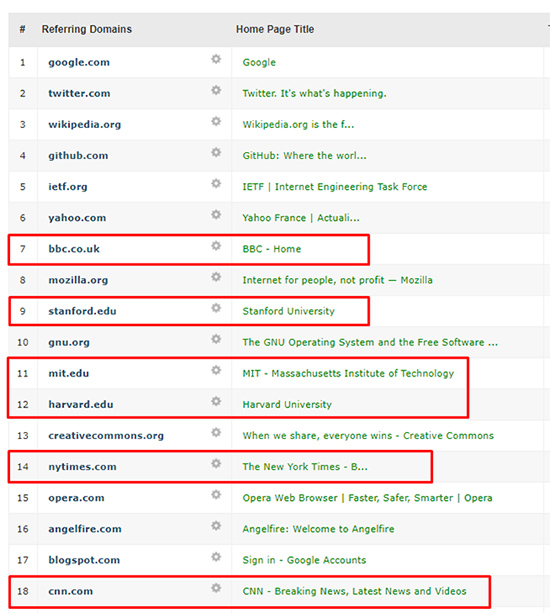

With regard to the A in E-A-T, which is authority, Google has explained that one of the best-known signals it uses is PageRank, or links from across the web. Google explained this in a whitepaper it released about fighting disinformation.

Also, Gary Illyes once explained that E-A-T was largely based on links and mentions from well-known sites. Note, I covered the A in E-A-T in my first post in this series (if you are interested in learning more about that topic). For this site, this was covered big-time. The site is absolutely an authority in its niche. The site has over 2M inbound links from over 26K referring sites. And a number of the sites linking to this news publisher are some of the most powerful and authoritative websites in the world.

So what was the problem? Why did the site take such a big hit? If you read part one of this series on the December 2020 broad core update, then you know that core updates are never about one thing. Google is evaluating many factors across a site and for an extended period of time. I always say there’s never one smoking gun, there’s typically a battery of them.

Therefore, I dug in the way I normally would in order to surface all potential issues that could be causing problems. That included auditing content quality, user experience, the advertising situation, technical SEO, and more. I clearly explained this process to my client and they were on board. They said they would clear a path, implement as many changes as possible, and as quickly as possible, in order to see recovery in the future.

Well, Hello May 2020 Broad Core Update, How Nice To Meet You…

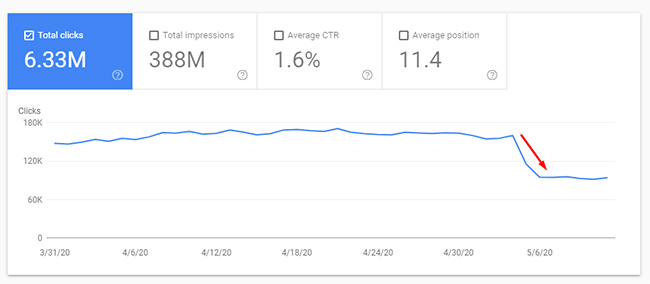

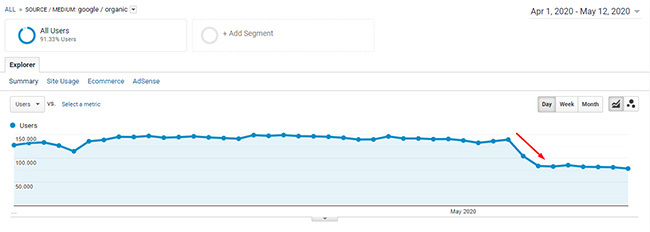

It’s also worth noting that as I was auditing the site, and my client was implementing changes, the May 2020 core update rolled out AND THEY GOT HIT AGAIN. This can absolutely happen if a site hasn’t improved significantly (or if a site chooses to not improve at all). In addition, even if you are implementing changes, it’s important to understand that recent changes would not be reflected in the next broad core update. I covered that several times in my previous posts, including both the May 2020 core update and part one about the December 2020 broad core update.

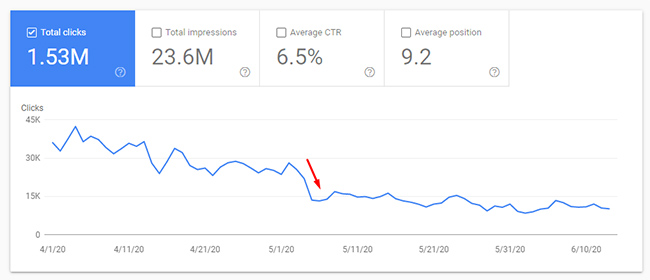

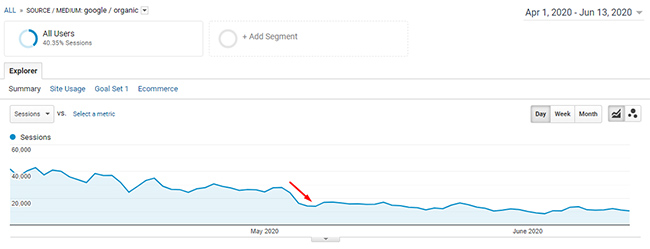

With the May 2020 core update, this news publisher dropped by an additional 51%. That’s on top of dropping 45% with the January core update. So they were down over 90% from where they once were:

Needless to say, my client felt defeated. They couldn’t believe they dropped even more. They were now receiving a trickle of traffic compared to what they used to. I explained that they should continue to make changes, that not enough had been implemented, and not over the long-term.

I basically felt like Mickey in Rocky pushing his fighter to keep driving forward:

Needless to say, my client was eager to keep driving forward, implement big changes, improve the site significantly, etc. But, it’s important to know that you can get hit by multiple core updates in a row if Google isn’t digging your site. I have covered that before in blog posts and in my presentations on broad core updates.

Below, I’ll cover several of the core items I surfaced during the audit that could have been contributing to the drops the site experienced during two broad core updates. I can’t cover everything that was addressed during the engagement, but I will provide a number of important findings. And keep in mind, there are millions of baby algorithms working together to output a score. It’s not about one of the following items, but more about improving all of them (including other items not covered below).

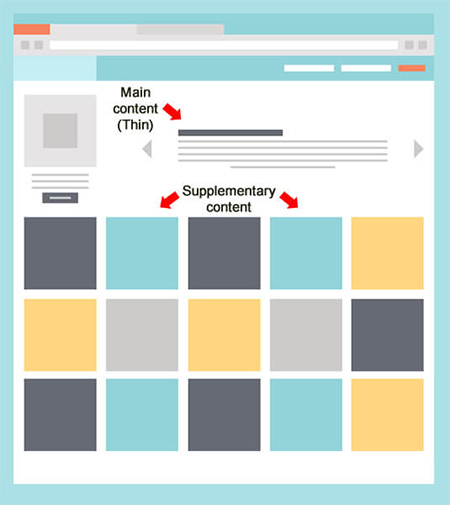

Lower-hanging fruit: Thin content, cruft:

With many news publishers, there’s a tendency for low-quality content to build up over time. They are typically larger, more complex sites and cruft can definitely build. And after years go by, some sites might have a lot of lower-quality content in pockets across the site.

Although this site produces some of the best content in its niche, I did surface a lot of cruft that had built up over time. I explained what “quality indexing” was to my client, which is making sure only high-quality content is indexed, while ensuring low-quality or thin content is not indexed. They understood the concept quickly and had no problem nuking thin and low-quality content from the site. Remember, Google is on record explaining that every page indexed is taken into account when evaluating quality.

Here is Google’s John Mueller explaining this:

Content quality (and strategy):

As I was analyzing content that dropped heavily during each update, I noticed something interesting. There were a number of round-up-like posts that were thin and somewhat misleading. So instead of creating one strong page that could be updated for the content at hand (which I can’t reveal), the site was publishing many thinner pages over time. This led to many pages on the site that were ranking and receiving traffic, but had no way to meet or exceed user expectations. This was a huge find in my opinion.

My client tackled this head-on and used the approach I recommended for creating one piece of content that can live over time and always contain the latest information, while archiving older pages when needed. This led to higher quality content that can meet or exceed user expectations, while also improving the “quality indexation” levels on the site overall. It was a win-win.

User Frustration and “Hell hath no fury like a user scorned”:

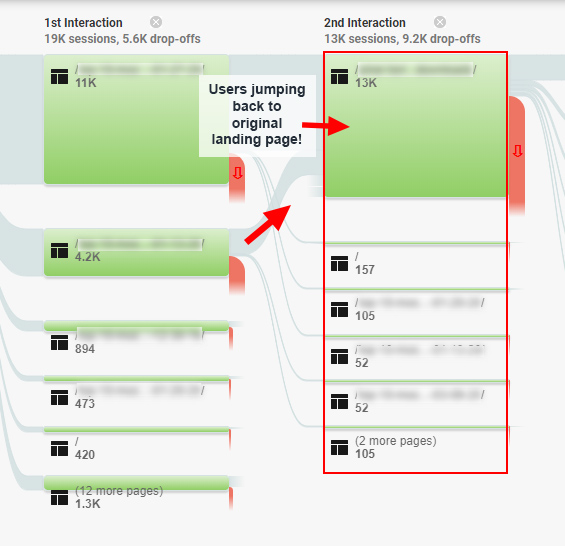

While continuing to analyze content that dropped on the site, I noticed some content that could easily confuse users (and it looked like it was confusing Google too). I can’t go too in-depth here, but let’s just say that users searching Google to accomplish a task were ending up on the site and on these pages. But the pages did not provide that functionality at all.

So users were visiting that page, then digging further into the site believing they could ultimately find what they were looking for. But they couldn’t find that information no matter where they turned, which led them back to the original landing page. And then using behavior flow in Google Analytics, I could see they were then trying other pages on the site in a never-ending journey. This is similar to the case study I wrote about the engagement trap and user frustration.

After explaining this to my client, and showing them behavior flow screenshots of what was happening, they nuked those pages. I also believe this was an important finding. Remember, “hell hath no fury like a user scorned”.

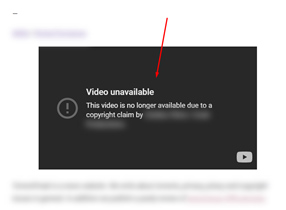

UX, Unavailable Videos, and Broken pages:

There are also times where news publishers push an article live and don’t revisit those pages (since they are working on the next big story). Unfortunately, those older pages can break for one reason or another. For example, I surfaced pages with either missing images or videos that weren’t available anymore. And when the videos were core to the article, that made the pages useless. There is no way a page can meet or exceed user expectations when the core element doesn’t even load.

So in an effort to enhance the user experience across the site, my client tackled these issues page by page. And if a page couldn’t meet or exceed user expectations anymore based on query, then the page was nuked.

Here was a third-party video used to support an article, but it was no longer available (and was actually removed due to a copyright claim against whoever uploaded the video to YouTube):

Sponsored links: Handle with care:

I’ll keep this one quick. The site has sponsors that are prominently displayed on the site with sponsored links. But, it wasn’t clear they were sponsors, so that required a clear disclaimer. Second, the links were followed. My client nofollowed all of those links and used rel sponsored as well. This was an easy fix, but the right one on several levels.

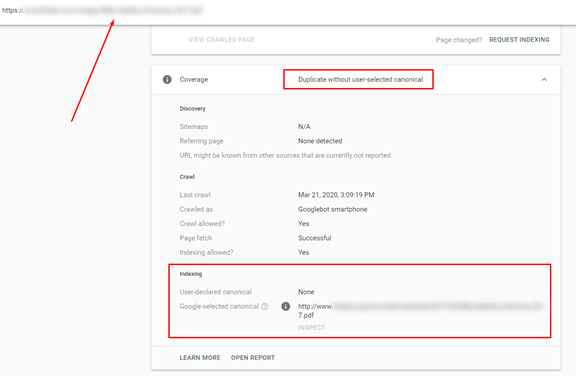

Google canonicalizing pages to third-party sites:

There was also a canonicalization issue where the publisher had uploaded a number of pdfs from other sites to support content from specific articles. In Google Search Console (GSC), you could clearly see that Google was canonicalizing all of those pdfs to pages on other sites (knowing where the original pdfs originated).

Having a few here and there isn’t a big deal, but you have to wonder what type of signal it sends to Google when many are seen as duplicate (and Google is choosing pages from third-party sites as the canonical urls). My client determined the pdfs were not essential to the articles and nuked many of them from the site.

In addition, there were a number of pages that had canonical tags pointing at urls that didn’t resolve with 200 codes. Some were 404ing, others were redirecting, etc. Rel canonical is a strong signal to Google about which pages should be indexed and ranking, so I always recommend having accurate canonical tags.

Here is an example of Google canonicalizing pdfs to urls on other sites:

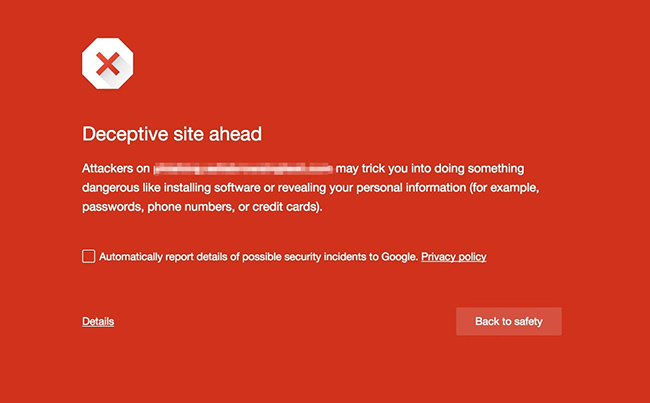

Downstream Sites and Maintaining User Happiness (and Security):

For larger-scale publishers, it’s easy to not revisit older articles over time, including checking the outbound links on those pages. And over time, you could be driving users to very strange (and risky) sites. I’ve always recommended auditing outbound links to make sure you aren’t putting your users, and essentially Google’s users, in a tough situation.

Based on the niche, this news publisher had a number of links that led to sketchy websites, and I would say even risky websites. It’s hard to say if those domains changed hands at some point, or if something else was going on, but the outbound link situation wasn’t great. My client worked on making sure outbound links were accurate, helpful, and didn’t put users at risk.

Chrome was even showing a safe browsing warning when visiting some of the downstream sites:

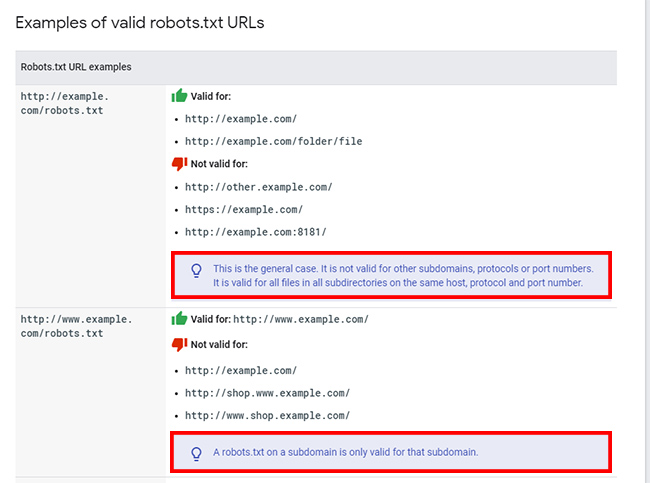

Search URLs and playing hide and seek with robots.txt:

When I began auditing the site, I quickly noticed that many internal search urls were showing up in the crawl data, in GSC, etc. Some were indexed, some were not, and the site’s robots.txt was supposed to be blocking them (or I thought anyway). It ends up there were many internal search urls triggered from outside the site that didn’t return any content. I saw thousands of these urls in the reporting.

But… the directory holding internal search urls was blocked by robots.txt. So what was going on?? Well, I wrote a post last year that covered how robots.txt files are by protocol and subdomain, and that’s exactly what was going on here. Basically, you can have multiple robots.txt files running at one time and the directives in each file can conflict. For this site, there were two files running on the same subdomain (based on protocol), and one blocked internal search and the other didn’t. My client cleaned this up pretty quickly.

Beyond what I covered above, there were a number of other findings surfaced during the audit. I can’t cover everything here or the post would be huge. But just understand that they tackled many different problems across the site. Some major, some minor, but all with the goal in mind of improving the site significantly over the long-term.

Liftoff in December: 149% Surge

Months went by since the last broad core update in May and my client would email me periodically to see if I had any idea when the next update was coming. I explained there’s no set timeframe for broad core updates and that we would hopefully see one soon. I told them to keep driving forward.

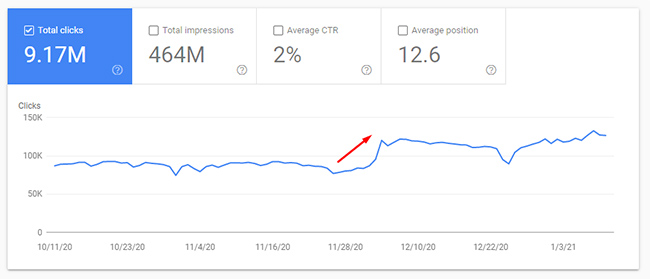

And then nearly seven months after the May update, the December 2020 broad core update rolled out. And it was a very, very good one for this news publisher. They surged almost immediately, and it was hockey stick growth. The site surged 149% with the update:

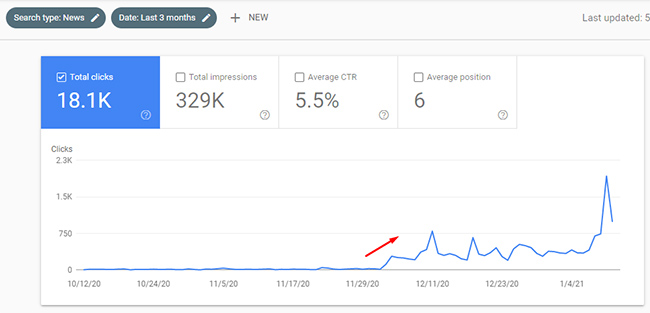

But that doesn’t even do the surge justice. Remember, they had almost no rankings and traffic from Google News traffic, Top Stories, or Discover. After the update, they started ranking in Top Stories and in Google News more prominently. It was awesome to see. Here is the news tab in GSC showing the growth there:

Regarding Discover, that is still a mystery to me. They still have no Discover traffic at all. I believe it could be due to the niche they cover and not necessarily the site, but it’s hard to say for sure. So, this would be the one area that didn’t surge with the update.

Case Study 2: Crushed By The December Update: It’s Like Google Doesn’t Even Know You Anymore

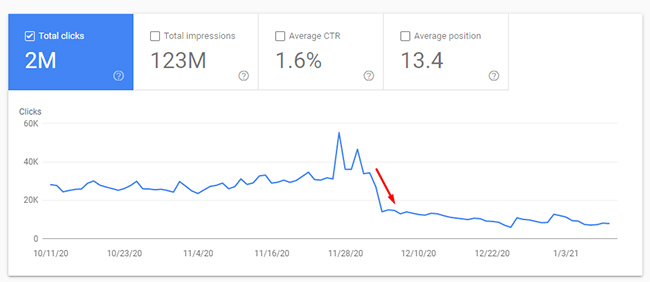

The next case study I’ll cover is a site that has seen its fair share of movement over the years (dating back to medieval Panda). It has been doing well recently, and even saw a nice surge during the May 2020 core update. But there was a growing, even insidious, problem that could have heavily contributed to the drop the site experienced with the December broad core update.

I can’t go into too much detail about the niche, but it’s an affiliate site with several types of content (which target a different user intent). The December update was not a good one for this site… It lost 57% of its Google organic traffic overnight starting on 12/3/20 and Google represents a majority of overall traffic. Needless to say, this is extremely problematic for the business.

I know the CEO of the company and he reached out quickly after the December update started rolling out. The drop, as you can see above, was dramatic. I had a call with the CEO, and as I learned more about their situation and setup, my “broad core update antenna” went up. I knew the site pretty well, and specific points he was making about what they had been doing recently had me concerned. I’ll cover what I can below. My hope is that they will be making big changes moving forward.

Swerving Out Of Your Lane: Major injection of thin content (and content not targeting their core competency):

I’ve mentioned the importance of “staying in your lane” several times over the years after seeing companies expand beyond their core competency and publishing content that they either didn’t have the expertise to write about, or content that could confuse Google topicality-wise. For this site, the latter was my concern.

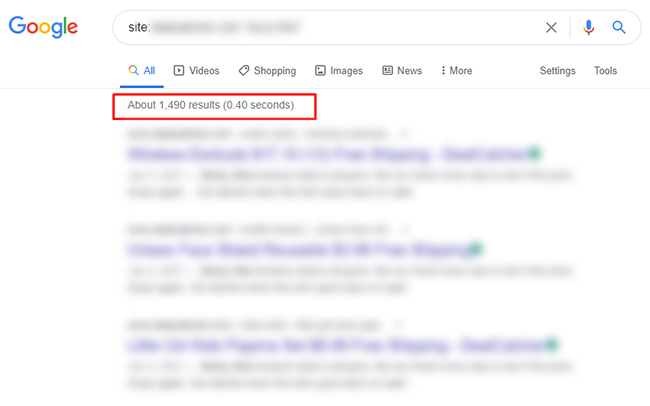

And in addition to potentially confusing Google’s algorithms about what the site’s core competency was, a lot of the new content was thin and lower quality. This is exactly the type of content that sites should avoid having indexed. I’ve covered “quality indexing” many times in the past and this is exactly the type of content I would recommend keeping out of Google’s index. Unfortunately for this site, thousands of pages were added over time and Google was actually ranking some of it well. That’s a dangerous situation and could end up very badly SEO-wise.

Before they knew it, a big shift had happened percentage-wise for the site from an indexing standpoint. Now there was more low-quality and thin content on the site than core content. And that core content is what got them to where they were SEO-wise (performing well). And in my opinion, Google is clearly not digging that shift. Again, the site dropped by 57% since the December broad core update rolled out.

To add insult to injury, many of those thin pages contained content that expired, yet the pages remained on the site and indexable. Google was seeing some of those pages as soft 404s, while others remained in the index. So, if users were searching Google and finding those pages, the content had no chance of meeting or exceeding user expectations. Again, this was an insidious problem in my opinion. And those thin pages were causing multiple problems from an SEO standpoint.

Here was a quick site query showing close to 1,500 urls indexed that held expired content:

Beyond those findings, there were also a number of other issues that I surfaced which should be fixed. Those included the use of popups on some versions of the pages (including on mobile), more thin content across other page types, urls categorized as “crawled, not indexed” that should be handled properly (which spanned several page types), and more.

I’m still in the middle of analyzing the site, so there will be more findings. But the core point with this case is to always maintain strong quality indexing levels, make sure you don’t tip the scales with lower quality content, always make sure you can meet or exceed user expectations, and make sure technical SEO problems don’t end up causing quality problems. I hope to report back about this site after subsequent broad core updates (based on the changes the site owner decides to implement).

Case Study 3: Overcoming “The Creep”

A large-scale site in a tough niche roars back with the December update:

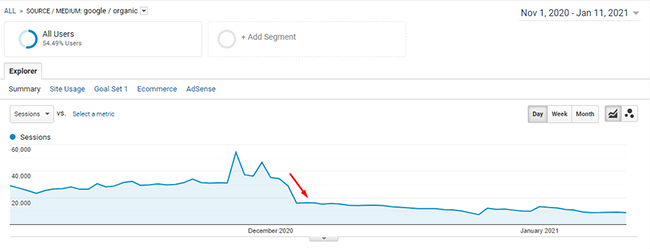

I can’t tell you how happy I am to be covering this recovery. This was actually one of the case studies in my post about the May 2020 core update, but as a big drop! As I mentioned in that post, many problems had crept back into the site over time. They had surged with previous major algorithm updates only to get hammered by the May 2020 core update. They dropped 41% overnight in early May.

Revisiting Core Problems: Bombarding Users With Ads

Leading up to the May broad core update, the ad situation had gotten much, much worse over time. It was extremely aggressive, disruptive, and even deceptive in some cases. This was not a new problem for the site since they had tackled aggressive ads previously (years ago). But like I said earlier, some bad problems crept back in over time.

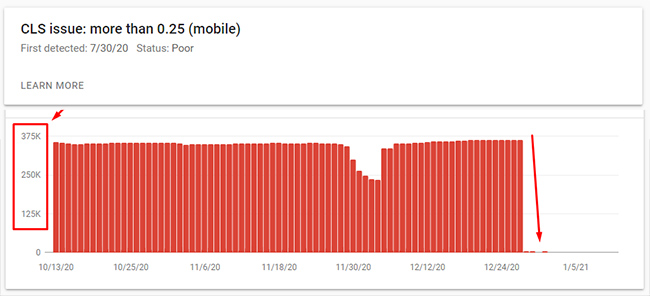

For some pages, there were 15-20 ads per page and organized in a way that was extremely disruptive to the user experience. After sending my findings through (including many screenshots of what I was seeing), the site owner moved quickly to tone down the ad situation. That resulted in a reduction of 50% of ads per page in some situations.

Needless to say, this improved the user experience greatly on the site. And those aggressive ads were impacting performance as you can see in the Core Web Vitals reporting in GSC. CLS scores were not good. The number of urls with CLS problems is now down to 0 post-update (after the site owners worked on toning down the ad situation and improving performance overall). They also moved to AWS after the May update to improve performance for the site overall.

Large and Complex Site With Pockets of Thin Content:

In addition, there was also a thin content problem (which is common in this niche). This is also something that has been brought up in the past for this site. Once the pockets of thin content were uncovered, the site owner moved quickly to noindex or 404 as much of that content as they could (depending on the page type). This led to an increase in “quality indexing”, which is making sure only high-quality pages are indexed, while ensuring low-quality or thin content is not indexed. This is something I have written about many times and it can rear its ugly head easily on larger-scale and complex sites.

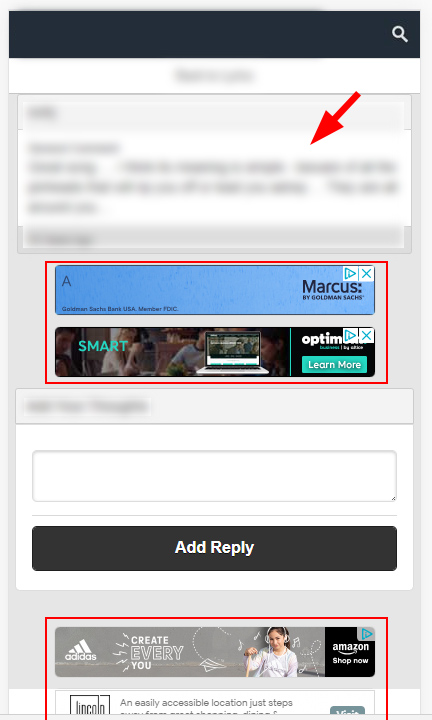

Here are some screenshots of thin content I was surfacing. Also, notice the ads + thin content in the second screenshot. That’s a lethal combination in my opinion:

The Power of Providing Unique Content For Sites With The Same, Or Very Similar Content:

In my post about the December 2020 core update, I mentioned how sites with the same, or very similar content, need to differentiate their sites as much as possible. John Mueller has covered this several times in webmaster hangout videos and I have helped a number of companies deal with this situation.

For this site, I recommended adding some type of unique and valuable content beyond what every other site was providing. Once I ran through the reasons why they should consider this, along with sending John’s video covering the issue, the site owner brainstormed a few ideas and came up with a strong solution. I can’t explain what they did specifically, but they were able to add unique content in a prominent place on core pages that was helpful for users.

Percentage-wise, this didn’t impact a large percentage of pages on the site, but it did impact some of the most popular pages. I’m hoping they roll this out to more pages as time goes on.

Here is Google’s John Mueller explaining the importance of differentiating your content as much as you can:

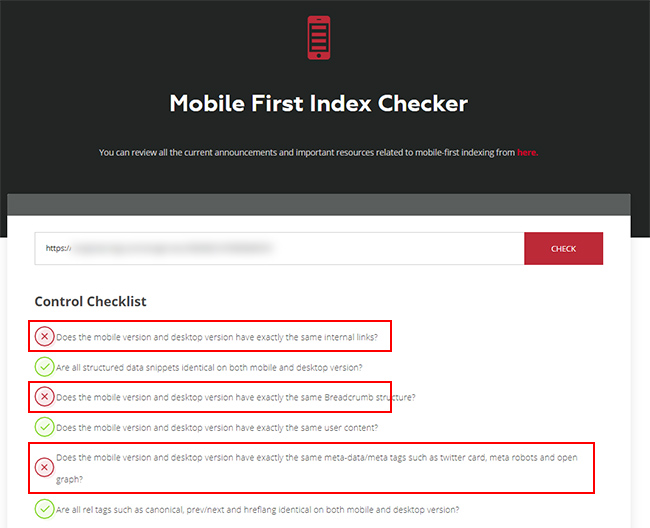

Technical Problems Causing Quality Problems: Mobile and Desktop Parity Issues

When digging into the site last May, I started noticing a problem with the mobile setup. This site still runs an m-dot subdomain holding the mobile pages, and I started checking for parity across mobile and desktop. What I found was alarming across some urls.

When switching to mobile, the urls were redirecting to the desktop version, but to a different url! This wasn’t site-wide and was just impacting certain urls, but needless to say, this was extremely problematic. Also, Google still hasn’t moved the site to mobile-first indexing based on the m-dot setup and the parity problems I discussed in my May 2020 case study post. For example, the pages lacked parity from a content standpoint, links standpoint, and more. So, Google’s systems are clearly having issues with moving the site to mobile-first indexing. And to clarify, there’s no ranking benefit to being moved to mobile-first indexing, but not being moved underscores the parity problems I just covered.

Since the desktop content is what’s indexed for this site, it’s hard to say how the weird redirect problem was impacting the site SEO-wise, but it’s clear this was impacting users. Once I communicated the problem to the site owner, they tackled the strange redirect problem quickly. But who knows how long that problem was in place, how many users that impacted, etc.

Beyond what I covered above, there were a number of other problems riddling the site (across several categories of issues). There were more quality problems, UX issues, technical problems causing quality problems, and more. Again, it’s a large-scale and complex site with many moving parts (and it’s in a tough niche). The site owner moved to make as many changes as possible.

And by the way, they still have a huge change in the works. As I mentioned earlier, they run an m-dot now (which is not optimal), but they have a responsive version of the site ready to launch soon! So theoretically, things will get even better over time usability-wise, site maintenance-wise, etc. Needless to say, I’m eager to see that go live.

Happy Holidays! The December Update Comes Roaring Through…

Since you can’t (typically) see a recovery in between broad core updates, the site owner fixed as much as they could and kept driving forward. The site owner was fully aware that they probably needed to wait for the next broad core update to see significant movement in the right direction and hoped for the best.

Well, the December update didn’t disappoint!

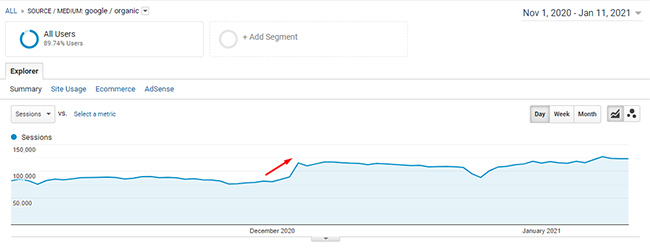

Within 24 hours of the rollout, the site absolutely started to surge. The site is up 40% since the December update. It’s been amazing to watch and it underscores how a “kitchen sink” approach to remediation worked well for this client.

Also, they are moving to a responsive design soon, which will alleviate parity problems across mobile and desktop and hopefully get them moved to mobile-first indexing soon. Again, there’s no ranking benefit to getting moved to mobile-first indexing, but it decreases the number of issues that can arise based on maintaining two pages for every one on the site. Also, mobile-first indexing will be enabled for all sites in March of 2021, so I’d love to see them with a solid setup and possibly moved before that date.

Moving Forward: Another List of Tips and Recommendations for Site Owners

As you can see via the case studies I covered, broad core updates are complex and nuanced. Google is evaluating many factors across a site and over the long-term. When a site is negatively impacted by a broad core update, there’s never going to be one thing a site can surface that’s causing the drop. Instead, it’s often a combination of issues that is dragging the site down.

Below, I’ll cover some final bullets for site owners that are dealing with negative impact from broad core updates.

- Relevancy adjustment? – First understand whether or not there was a major relevancy adjustment, which could be correct. For example, was your site ranking for queries it had no right ranking for? There’s not much you can do about that… But if it’s not a relevancy adjustment, then dig into your site to surface all potential issues that could be causing problems.

- The power of user studies – To understand how real users feel about your site, content, expertise, etc., run a user study through the lens of broad core updates. I’ve written a case study about doing this that you can review, Google recommends running user studies for this purpose, and it makes sense on multiple levels. I can’t tell you how powerful it can be to receive objective feedback from real users.

- Never one smoking gun – Avoid looking for one smoking gun. You won’t find it. Instead, objectively analyze your site and surface all potential problems that could be causing issues. Then form a plan of attack for fixing them all (or as many as you can).

- Recovery – Understand you (typically) cannot recover until another broad core update rolls out. I have covered this in previous posts and in my SMX Virtual presentation about broad core updates. So, don’t roll out the right changes only to roll them back after a few weeks. That’s not how this works. You will end up spinning your wheels.

- Read the Quality Rater Guidelines (QRG) – There’s a boatload of amazing information directly from Google about what they deem high versus low quality. The document is 175 pages of SEO gold.

- Recovery Without Remediation – Understand that some sites recover without performing any remediation. That can definitely happen since there are millions of baby algorithms working together to output a score. In my opinion, if you don’t significantly improve your site over the long-term, you leave your site susceptible to seeing negative impact down the line. That’s why you can see the yo-yo effect that some sites experience (as they sit in the gray area of Google’s algorithms). That’s a maddening place to live for site owners. I would try and improve as much as possible.

Summary – Google’s Broad Core Updates Are Complex and Nuanced

I hope you found the case studies I covered interesting. One thing is for sure, they underscore the complexity and nuance of Google’s broad core updates. If you have been negatively impacted by a broad core update, then I recommend reading several of my posts about core updates, form a plan of attack for thoroughly analyzing your site through the lens of those updates, and then work to significantly improve your site over time. You can absolutely recover, but it takes time, hard work, and patience. Good luck.

GG

Back To Table of Contents

Read part one of this series on the December 2020 broad core update.