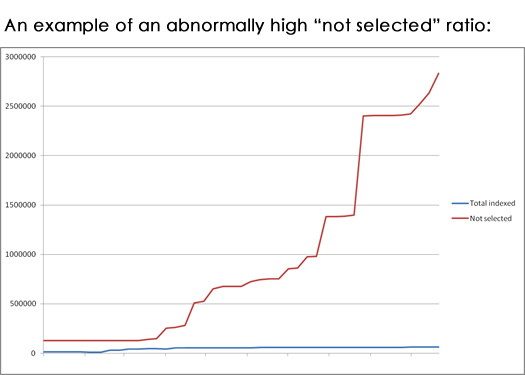

With the release of index status in Google Webmaster Tools, many webmasters are now questioning why their “not selected” numbers are high. They wonder if those numbers are good, bad, normal, etc? Unfortunately, there’s not an easy answer to that question, since it depends on the site at hand. But, you can definitely look at the ratio of “not selected” to pages indexed to start to understand if there is a technical problem causing a spike in pages being categorized as “not selected”.

For example, if you have 200 pages indexed on your site, and you see 350 categorized as “not selected”, that might be ok. But if you see 25K pages as “not selected” or more, then that could raise a red flag that something may not be right with the site… For example, is there a site structure issue that’s causing thousands of variations of pages with extremely similar content (duplicate content)?

A Recent Example of a Poor “Not Selected” Ratio

During SEO audits, there are times I come across significant problems like the one I mentioned above. And those problems could be inhibiting a company’s search efforts (to say the least). During a recent SEO audit, I came across a very interesting situation. Index status revealed an extremely high number of “not selected” pages (as compared to the number of pages indexed) and I found myself digging into the site to find out why.

I found several issues causing the problem, so there wasn’t just one issue pumping up the number. That said, the problem I’m going to cover today was causing thousands of duplicate pages to be created, and without the site owner knowing. The more pages I checked, the more duplicates I found. And this is a problem that can easily slip through the cracks for many webmasters. And that’s especially the case if a small or medium sized business is handling all website development on its own. Below, I’m going to cover what I found, and more importantly, how you can avoid the problem in the first place.

The Danger of an Extra Character

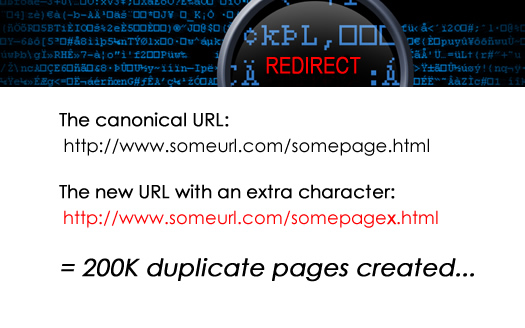

As I was analyzing the site manually, and via a number of test crawls, I came across some URL’s that contained an extra character. Specifically, the extra character was being appended to each canonical URL. All of those URL’s were from one specific section of the site (which contained thousands of URL’s). After digging into that section of the site, I found out that this problem was happening to almost every URL being linked to from a certain element within each page. So, I honed in on that element within each page to find out how the duplicate pages were being created. And by the way, it just so happens that the section of the site contains nearly 200K pages. Yes, this was a huge problem that was uncovered.

The Result – Duplicate Content Ad Infinitum

The core problem is that the extra character created a new URL, but that new URL was an exact duplicate of the canonical URL (the URL that should be resolving). And as you can guess, both pages can be accessed on the site. One part of the site links to the canonical version of the pages, while this problematic section linked to the duplicate versions of the pages.

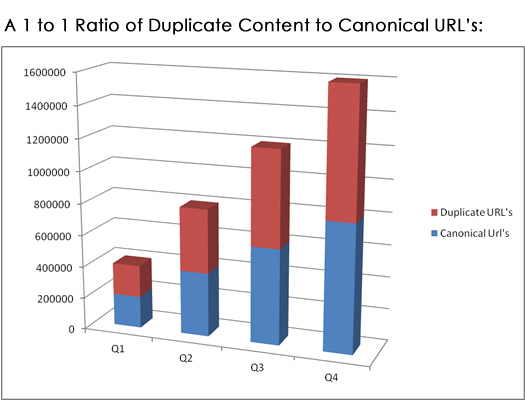

So, right off the bat, we are dealing with at least 200K duplicate pages. In addition, as more content is added to this section, more duplicate pages will be created over time (based on the extra character being added to each URL). Also, the canonical URL tag was not being used on the duplicate pages, so that wasn’t helping this specific case. And on that note, I wouldn’t advocate using the canonical URL tag to fix this problem… Technical problems like this should be addressed at the code or structure level.

So, if this was left in place, this problem could generate an unlimited number of duplicate pages. If 500K pages ended up there, then there would be 500K pages of duplicate content. Not good, so I dug deeper to find out exactly what was causing the problem.

The Root Problem – Faulty Redirects

Let’s face it, we all need to implement redirects at some point. And that introduces the possibility of a poor implementation, which can be catastrophic SEO-wise. It’s one of the reasons that website redesigns and CMS migrations are so risky. On that note, to learn how to avoid SEO disaster during a redesign or migration, you should check out my Search Engine Journal column on the subject.

For example, using 302’s versus 301’s, using meta refresh redirects, redirecting to the wrong pages, or having the redirect code bomb the URL’s. For this situation, we ran into the “bombing of URL’s” problem. The redirects were faulty, and were redirecting to URL’s with an extra character.

The Solution – Fix the Redirect Code!

So, hundreds of thousands of duplicate pages were being generated, and it was due to one piece of redirect code on the server. The 301 redirects being generated simply added an extra character to the destination URL. That’s it. The fix will be implemented soon, and once the new redirect code is rolled out, the correct URL’s will resolve.

This situation underscores the fact that even one small piece of code could have serious implications SEO-wise. If this situation was left unchanged, it could have ended up generating an unlimited number of duplicate pages. Knowing the content on this site, my guess is the problem would have generated 500K-750K pages of duplicate content over the next 2-3 years.

How To Avoid This Situation

After reading this post, you might be scared that this could happen to you, or worse, that it’s happening right now. I’m going to provide a short list of things you can do to make sure this doesn’t happen. Of course, if you feel you are having problems already, you should have an SEO audit performed.

- First, whenever you create redirects, make sure you have a system for testing those redirects before they launch. You can do this a number of ways, including on a local server or test server prior to releasing the final code to production. If you thoroughly test the redirects, you could nip serious problems in the bud.

- Second, make sure your xml sitemaps contain the canonical url’s for the pages at hand. Making sure you are feeding Google and Bing the correct URL’s can help them understand which ones should be considered the canonical url’s.

- Third, you should develop a strategy for using the canonical URL tag on the site. If the tag is present, then you can ensure that any duplicate pages pass their search equity to the canonical URL’s. Note, I’m not saying that you should leave a technical problem in place! Instead, I’m saying that having the canonical URL tag in place will make sure the engines pass any search equity to the correct pages on your site while you figure out solutions to your technical problems.

- This final bullet assumes you are already experiencing problems with duplicate content from a technical problem. If you are, and you cannot determine what’s going on, then invest in having a technical SEO audit completed. To me, the provide the most bang for your SEO buck. It’s a great way to find out what’s truly going on with your site (beyond just the problem I listed here).

Summary – Know Your Site

This case emphasizes something I’ve said a thousand times over the past few years. It’s incredibly important to have a sound site structure in order to perform at your highest level SEO-wise. Coding problems, site structure issues, flawed redirects, etc. can kill your SEO efforts. It’s one of the reasons that I believe SEO audits are critically important. They can catch all types of SEO issues and provide ways to remedy those problems. You know, like generating hundreds of thousands of duplicate pages. :)

GG